Hi,

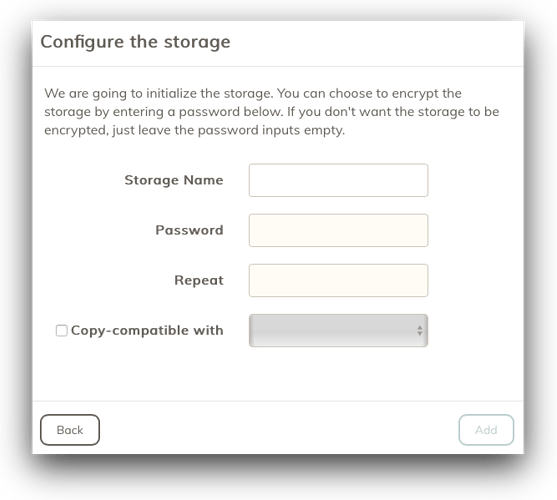

I am trying to associate a second storage backend to an existing backup in the Web GUI (1.2.1). I have one backup configured going to one destination (SFTP). I have configured a second storage backend (local mounted drive). How do I associate this second backend with the existing backup? I went to the Backups section in the UI but couldn’t see any way to associated the second backend with the existing backup. I created a new backup, selected the first storage (SFTP) and selected the existing Backup ID, then changed the storage to the second storage (local mounted drive). However, it seems that I still have to specify what directory to backup which means I will actually have two separate backups? With separate include/excludes? I want to use the same config from the existing backup, I don’t want to have to maintain two lists of includes/excludes.

Am I doing this correctly? Am I misunderstanding something? I tried searching the forums but couldn’t find anything that explained how to do this in the Web GUI.

Thank you very much!

so that’s pretty good

so that’s pretty good