I attempted to restore a little over 1GB of data this morning and encountered a “corrupted chunk” error. I opened the details and see the below:

2021-09-07 09:47:50.291 INFO REPOSITORY_SET Repository set to C:/Users/Primary/Downloads/Dragon

2021-09-07 09:47:50.292 INFO STORAGE_SET Storage set to odb://Duplicacy/Backups

2021-09-07 09:47:51.550 INFO SNAPSHOT_FILTER Loaded 1 include/exclude pattern(s)

2021-09-07 09:47:52.691 INFO RESTORE_INPLACE Forcing in-place mode with a non-default preference path

2021-09-07 09:47:54.716 INFO SNAPSHOT_FILTER Parsing filter file \\?\C:\ProgramData\.duplicacy-web\repositories\localhost\restore\.duplicacy\filters

2021-09-07 09:47:54.717 INFO SNAPSHOT_FILTER Loaded 0 include/exclude pattern(s)

2021-09-07 09:47:54.731 INFO RESTORE_START Restoring C:/Users/Primary/Downloads/Dragon to revision 150

2021-09-07 09:47:56.917 INFO DOWNLOAD_PROGRESS Downloaded chunk 1 size 4004593, 1.91MB/s 00:02:04 1.5%

2021-09-07 09:47:59.550 WARN DOWNLOAD_RETRY The chunk 60097f696a804bfa03a7f3fd7ac11a1ed71c5c0a525c8b51aba81ed707d39cd3 has a hash id of 5329f32af5e26113412d48b71ff4f59f294b974d6e348e6e2e06d84cc4781895; retrying

2021-09-07 09:48:01.797 WARN DOWNLOAD_RETRY The chunk 60097f696a804bfa03a7f3fd7ac11a1ed71c5c0a525c8b51aba81ed707d39cd3 has a hash id of 5329f32af5e26113412d48b71ff4f59f294b974d6e348e6e2e06d84cc4781895; retrying

2021-09-07 09:48:04.018 WARN DOWNLOAD_RETRY The chunk 60097f696a804bfa03a7f3fd7ac11a1ed71c5c0a525c8b51aba81ed707d39cd3 has a hash id of 5329f32af5e26113412d48b71ff4f59f294b974d6e348e6e2e06d84cc4781895; retrying

2021-09-07 09:48:06.212 ERROR DOWNLOAD_CORRUPTED The chunk 60097f696a804bfa03a7f3fd7ac11a1ed71c5c0a525c8b51aba81ed707d39cd3 has a hash id of 5329f32af5e26113412d48b71ff4f59f294b974d6e348e6e2e06d84cc4781895

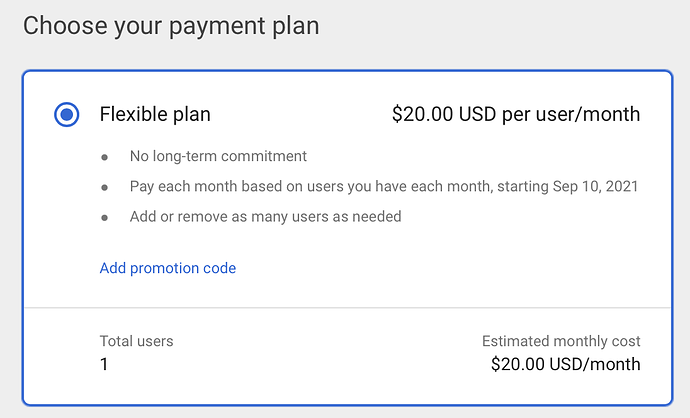

Note, I have a check scheduled to follow every backup. I was just about to buy a license but this doesn’t inspire confidence that I’ll have a backup that is not corrupted.