I think it will definitely help because the backup will continue rather than start over.

For example, I keep restarting the backup every day, and instead of skipping a lot of chunks, the longer the pause between backups, the more chunks are reuploaded instead of being skipped.

For example: the backup ran to 48% last night and stopped at 3am (previously I was able to get it to as high as 60%). At 8am, I restarted it, and already many initial chunks said uploaded instead of skipped:

[2019-08-13 08:22:40 PDT] Listing all chunks

[2019-08-13 08:29:52 PDT] Skipped 582731 files from previous incomplete backup

[2019-08-13 08:29:52 PDT] Use 4 uploading threads

[2019-08-13 08:29:56 PDT] Skipped chunk 2 size 60780518, 14.49MB/s 17:37:13 0.0%

[2019-08-13 08:29:59 PDT] Skipped chunk 3 size 166162161, 30.92MB/s 08:15:26 0.0%

[2019-08-13 08:30:00 PDT] Skipped chunk 4 size 33179333, 31.01MB/s 08:13:58 0.0%

[2019-08-13 08:30:00 PDT] Skipped chunk 5 size 37355359, 35.46MB/s 07:11:55 0.0%

[2019-08-13 08:30:02 PDT] Skipped chunk 7 size 17242555, 30.01MB/s 08:30:19 0.0%

[2019-08-13 08:30:03 PDT] Uploaded chunk 1 size 103348254, 36.25MB/s 07:02:32 0.0%

[2019-08-13 08:30:05 PDT] Uploaded chunk 6 size 33483828, 33.13MB/s 07:42:19 0.0%

[2019-08-13 08:30:06 PDT] Uploaded chunk 8 size 27896830, 32.66MB/s 07:48:53 0.0%

[2019-08-13 08:30:08 PDT] Skipped chunk 9 size 268435456, 44.58MB/s 05:43:26 0.0%

[2019-08-13 08:30:12 PDT] Skipped chunk 10 size 165201060, 43.54MB/s 05:51:34 0.0%

[2019-08-13 08:30:13 PDT] Skipped chunk 11 size 33117451, 42.97MB/s 05:56:13 0.0%

[2019-08-13 08:30:14 PDT] Skipped chunk 12 size 19390558, 41.86MB/s 06:05:41 0.1%

[2019-08-13 08:30:15 PDT] Skipped chunk 13 size 58737214, 42.47MB/s 06:00:21 0.1%

[2019-08-13 08:30:17 PDT] Skipped chunk 14 size 80045607, 42.13MB/s 06:03:16 0.1%

[2019-08-13 08:30:22 PDT] Skipped chunk 15 size 177101917, 40.74MB/s 06:15:36 0.1%

[2019-08-13 08:30:32 PDT] Uploaded chunk 17 size 63400839, 32.06MB/s 07:57:10 0.1%

[2019-08-13 08:30:32 PDT] Uploaded chunk 19 size 46820720, 33.18MB/s 07:41:06 0.1%

[2019-08-13 08:30:33 PDT] Uploaded chunk 16 size 99820662, 34.69MB/s 07:20:57 0.1%

[2019-08-13 08:30:33 PDT] Uploaded chunk 18 size 71985168, 35.50MB/s 07:10:52 0.1%

[2019-08-13 08:30:37 PDT] Uploaded chunk 20 size 45592751, 34.10MB/s 07:28:33 0.1%

[2019-08-13 08:30:40 PDT] Uploaded chunk 22 size 79612882, 33.55MB/s 07:35:51 0.1%

[2019-08-13 08:30:44 PDT] Uploaded chunk 24 size 45138352, 31.80MB/s 08:00:58 0.1%

[2019-08-13 08:30:44 PDT] Uploaded chunk 21 size 173008834, 34.97MB/s 07:17:15 0.1%

[2019-08-13 08:30:48 PDT] Uploaded chunk 26 size 27600928, 32.94MB/s 07:44:09 0.2%

[2019-08-13 08:30:49 PDT] Uploaded chunk 25 size 62839868, 33.42MB/s 07:37:33 0.2%

[2019-08-13 08:30:50 PDT] Uploaded chunk 27 size 76625982, 34.10MB/s 07:28:20 0.2%

[2019-08-13 08:30:54 PDT] Uploaded chunk 23 size 172804205, 34.56MB/s 07:22:19 0.2%

[2019-08-13 08:30:58 PDT] Uploaded chunk 31 size 36910783, 33.00MB/s 07:43:13 0.2%

[2019-08-13 08:31:06 PDT] Uploaded chunk 30 size 88912128, 30.58MB/s 08:19:51 0.2%

[2019-08-13 08:31:07 PDT] Uploaded chunk 29 size 244635407, 33.28MB/s 07:39:08 0.2%

[2019-08-13 08:31:09 PDT] Uploaded chunk 32 size 102043296, 33.68MB/s 07:33:39 0.2%

[2019-08-13 08:31:10 PDT] Uploaded chunk 33 size 36717785, 33.70MB/s 07:33:24 0.2%

[2019-08-13 08:31:12 PDT] Uploaded chunk 34 size 78548417, 33.79MB/s 07:32:06 0.2%

[2019-08-13 08:31:17 PDT] Uploaded chunk 36 size 53356755, 32.40MB/s 07:51:27 0.2%

[2019-08-13 08:31:23 PDT] Uploaded chunk 28 size 80264516, 31.11MB/s 08:11:03 0.3%

[2019-08-13 08:31:23 PDT] Uploaded chunk 35 size 223258293, 33.45MB/s 07:36:35 0.3%

[2019-08-13 08:31:28 PDT] Uploaded chunk 39 size 57763404, 32.28MB/s 07:53:05 0.3%

[2019-08-13 08:31:28 PDT] Uploaded chunk 38 size 85548438, 33.13MB/s 07:40:54 0.3%

[2019-08-13 08:31:29 PDT] Uploaded chunk 40 size 44283456, 33.22MB/s 07:39:35 0.3%

[2019-08-13 08:31:32 PDT] Skipped chunk 45 size 17961339, 32.40MB/s 07:51:17 0.3%

[2019-08-13 08:31:32 PDT] Skipped chunk 46 size 21815217, 32.60MB/s 07:48:16 0.3%

[2019-08-13 08:31:34 PDT] Uploaded chunk 43 size 29388633, 32.24MB/s 07:53:33 0.3%

[2019-08-13 08:31:35 PDT] Uploaded chunk 37 size 265250236, 34.38MB/s 07:23:54 0.3%

[2019-08-13 08:31:37 PDT] Uploaded chunk 44 size 22253349, 33.93MB/s 07:29:49 0.3%

[2019-08-13 08:31:40 PDT] Uploaded chunk 47 size 68783370, 33.59MB/s 07:34:16 0.3%

[2019-08-13 08:31:42 PDT] Uploaded chunk 42 size 204630656, 34.76MB/s 07:18:59 0.4%

[2019-08-13 08:31:49 PDT] Uploaded chunk 48 size 169936753, 34.06MB/s 07:27:51 0.4%

[2019-08-13 08:31:55 PDT] Uploaded chunk 50 size 101910932, 33.19MB/s 07:39:33 0.4%

[2019-08-13 08:31:55 PDT] Uploaded chunk 41 size 88773003, 33.88MB/s 07:30:11 0.4%

[2019-08-13 08:31:56 PDT] Uploaded chunk 51 size 103656669, 34.40MB/s 07:23:16 0.4%

[2019-08-13 08:32:01 PDT] Uploaded chunk 53 size 59969268, 33.51MB/s 07:35:01 0.4%

[2019-08-13 08:32:02 PDT] Uploaded chunk 49 size 268435456, 35.23MB/s 07:12:47 0.4%

[2019-08-13 08:32:04 PDT] Uploaded chunk 52 size 131609653, 35.64MB/s 07:07:40 0.5%

[2019-08-13 08:32:10 PDT] Uploaded chunk 55 size 88771294, 34.71MB/s 07:19:10 0.5%

[2019-08-13 08:32:12 PDT] Uploaded chunk 54 size 199911899, 35.57MB/s 07:08:23 0.5%

[2019-08-13 08:32:14 PDT] Uploaded chunk 58 size 40190493, 35.34MB/s 07:11:10 0.5%

[2019-08-13 08:32:15 PDT] Uploaded chunk 56 size 148288730, 36.08MB/s 07:02:14 0.5%

[2019-08-13 08:32:17 PDT] Uploaded chunk 59 size 74478379, 36.08MB/s 07:02:18 0.5%

[2019-08-13 08:32:20 PDT] Uploaded chunk 62 size 25833619, 35.51MB/s 07:09:00 0.5%

[2019-08-13 08:33:16 PDT] Uploaded chunk 57 size 58344064, 26.04MB/s 09:45:05 0.5%

[2019-08-13 08:33:24 PDT] Uploaded chunk 64 size 40695424, 25.24MB/s 10:03:36 0.5%

[2019-08-13 08:34:19 PDT] Uploaded chunk 63 size 268435456, 21.00MB/s 12:05:16 0.6%

[2019-08-13 08:34:23 PDT] Uploaded chunk 66 size 41078410, 20.83MB/s 12:11:00 0.6%

[2019-08-13 08:43:24 PDT] [1] Maximum number of retries reached (backoff: 64, attempts: 15)

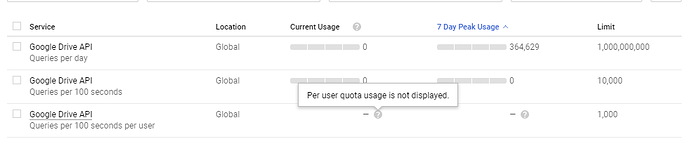

[2019-08-13 08:43:24 PDT] Failed to upload the chunk 1580f575fc54c646969e1b3c215011a0ddf7ac57ce9c985e04f05e4cc0c96b2c: googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded

[2019-08-13 08:43:38 PDT] Incomplete snapshot saved to preferences/incomplete

If the backup was instead backing off, it would have continued instead of restarting and reuploading chunks over and over. It’d be much better for this backoff to automatically handle retrying than me doing it manually and losing progress.

should do is allow us to set the number of retries (instead of the default 15), but

should do is allow us to set the number of retries (instead of the default 15), but