I fully agree with your comment: as long as the storage is accessible from the client machine only some sort of write-only mechanism on the storage machine itself may help against typical malware/client breach/password re-use scenarios. This is THE major problem of push backups to storage backends and a real show stopper for many backup strategies.

I’m currently using duplicacy to backup to a storage on a freenas box which gives me the possibility to “freeze” the storage by creating ZFS snapshots of the storage. In case of a breach on the client machine the attacker could delete the whole storage and I would still be able to roll back the ZFS snapshots to the point in time when the storage was intact.

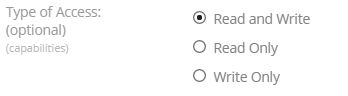

Another possibility would perhaps be to have a daily task running on the storage system which changes user permissions on the storage files to read-only for the backup user and do a chown in order to keep the backup user from changing permissions. According to my understanding this would only harm “prune” commands because the “backup” commands will only add files to the storage.