This is not as easy as it sounds.

In fact, literally just this second, I checked the Duplicacy web edition icon in the tray next to the clock. Hovered over the icon and it disappeared. Duplicacy, was in fact, not running.

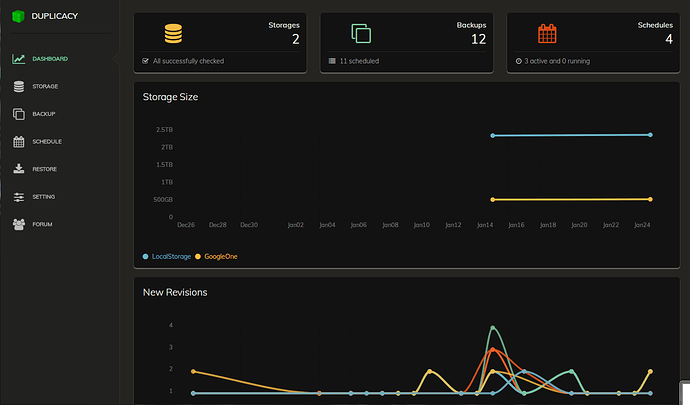

This is an issue I’ve been seeing for the past several weeks but am unable to put a finger on it. My PC is on 24/7 and I leave it logged in. Once a week or two I’ll notice Duplicacy isn’t running. Why? I have no idea! The Windows event logs don’t show any crashes. My last successful backup today was at 12:15 but the 14:15 (and 16:15 & 18:15) backup didn’t run according to the dashboard. Sometimes a day or two will pass before I notice it isn’t running. Luckily, today I only missed a few hours.

Anyway, the point I’m trying to make… Duplicacy itself can’t tell you if something like that goes wrong, if it isn’t even running.

Hence the purpose of an external service like healthchecks.io. I recently set up an alert for a customer’s system running Vertical Backup (Duplicacy’s brother for ESXi hosts) and set up a pre-script to ping a given URL. If it doesn’t run, I get an email notification. I may have to do the same on my personal machine.