Almost. Except last statement in every line.

I feel you are missing a crucial detail abut how prune works. Did you read the prune wiki page?

-keep X:Y

Each keep parameter features two numbers. Y determines revisions this rule applies to. X detemines every how many days one revision will be kept, all others will be deleted.

so, -keep 7:28 means:

- Appy this rule to revisions older than 28 days

- Delete all revisious, except one every 7 days.

Because duplicacy tries every statement in order, statements with larger Y must apper first, to have effect.

If you write

-keep 7:7 -keep 0:365

Duplicacy will keep one revision every week forever. The second keep won’t have a chance to work because revisions that are older than 365 days are also older than 7 days — then the first rule will match them first.

So lets read last row text, and build the prune string.

So, we go from the end.

Delete all revisions older than 0 days, but keep one every week. This is a weird policy. but that’s what you requested:

-keep 7:0

I would start doing this after the revision is at least 1 week old instead.

-keep 7:7

This obviously shall apply to revisions older than 30 days, so that the yourger ones will be handled by the rule above:

-keep 30:30 -keep 7:7

This shall apply to revisons older than 1 year (so that younger ones will be addressed by the rest of the rules):

-keep 365:365 -keep 30:30 -keep 7:7

This is straighforward:

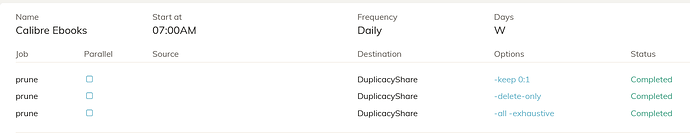

-keep 0:1095 -keep 365:365 -keep 30:30 -keep 7:7

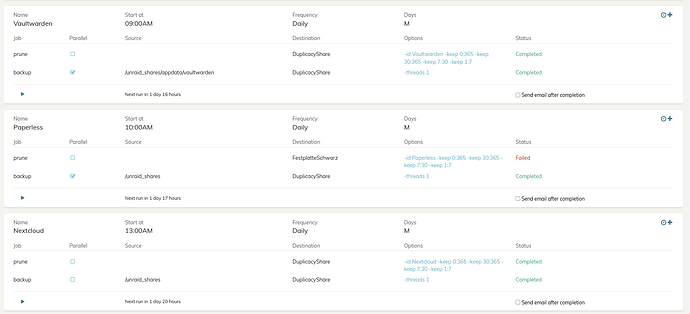

That said, I’m not sure why are you complicating rules so much. You won’t save much space if any with prune, duplciacy deduplcates everything. Pick one rule and apply it everywhere.

Good starting point is

-keep 7:30 -keep 1:1

This will keep all backups within the same day, then daily backups for the past month, and weekly backups thereafter forever. This is a pretty universally useful approach and works for most people. It also happens to be the default with several backup tools, including macOS Time Machine.