I just started using @saspus docker container in Unraid.

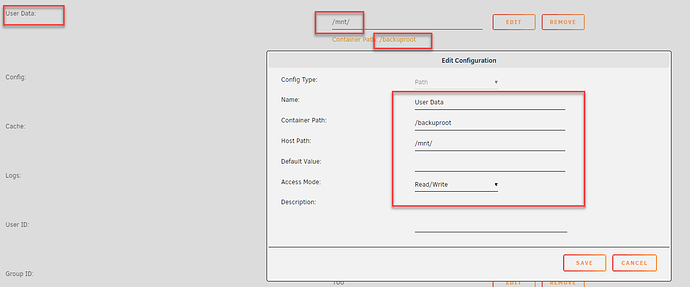

The configuration seemed self explanatory, I mapped the backuproot to /mnt/user, the rest of the paths - default.

- started the container, accessed the WebGUI,

- set up the source: 3 drive unraid array (2 data + 1 parity)

- the destination: separate ZFS pool, a 2-drive mirror (supported natively as of Unraid v6.12)

- configured the job

- started the first backup.

After the indexing was done I immediately noticed slow speeds 20MB/s and 18 hrs left for a 1.25TB of data. Yes, technically it’s HDD to HDD copy, not expecting hundreds of MBps, but not 20MB/s either.

I go to Unraid dashboard to look at the Reads/Writes and see this:

the Reads 20MB/s and Writes 20MB/s occurring on the same source array! The ZFS pool is not registering any Writes!

So I open the console and browse to the ZFS pool and my backup destination subfolder: /mnt/recpool/duplicacy-backup

It is empty!

I start browsing around to locate the actual destination, where the backup is being written. I find it on the Unraid array disk 1. It’s under another folder with the identical name to the one that I created on recpool:

duplicacy-backup.

In my next comment I will describe how I am able to reproduce the problem.