Planning a re-work of my backup systems and have been reading about and playing with Duplicacy, though a lot of my criteria could be covered by Rclone as well.

Data:

- 1.5 - 2 TB of mostly raw photos and videos (various codecs but usually h.264 or h.265)

- 0.5 - 1 TB of non-photo / non-video content such as Lightroom catalogs, code, documents, config files, home-lab backups, etc which all compress fairly well.

Hardware:

Criteria:

-

Integrity: Must not propagate corruption if at all possible.

- In the past I’ve had files get corrupted and sometimes propagate their way through my backup system. Granted that’s largely due to lack of proper checks and simple copy / clone tools propagating the corrupted files. So, I’d like to have something baked in that can avoid or mitigate this.

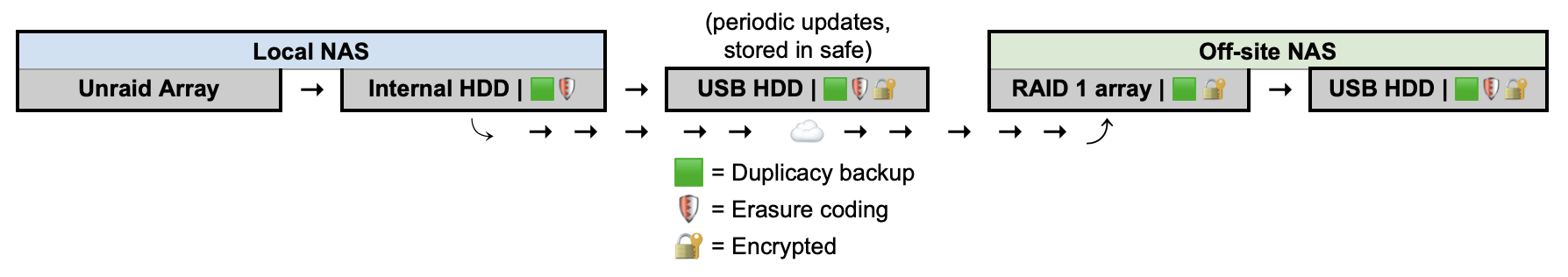

- I like the idea of using erasure coding in Duplicacy for the stand-alone disks in the chain which can help with integrity on non-redundant storage. I realize it’s considered a band-aid solution by some but I think it’s reasonable for these devices in the chain.

- Tooling: Ideally a single backup tool manage the entire flow. I’d rather not use say Duplicacy for one set of data and rclone + custom scripts for another set of data.

- Encryption: Required for devices outside the local NAS.

- Deduplication: Isn’t a must as a large portion of the data is not easily deduplicable but in my tests it’s reduce the overall backup size anywhere from 150-300GB, so it’s not nothing.

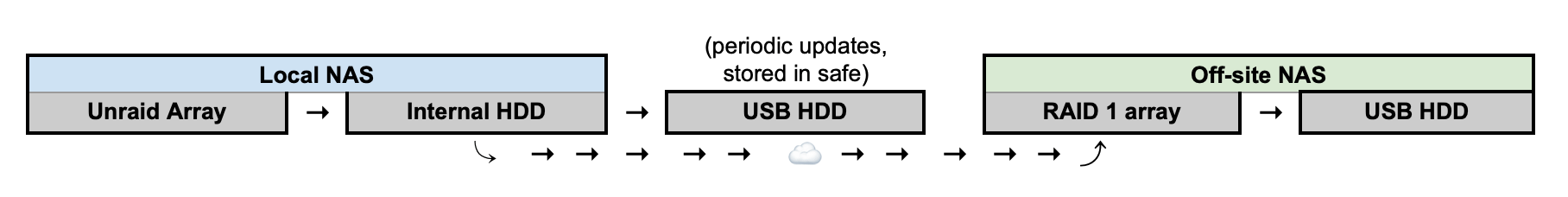

I like the technical implementation and feature set of Duplicacy so considering something like this.

Much of the same could be done with something like Rclone (minus deduplication) but seems it would require more custom config for some of it? Haven’t gone down the Rclone rabbit hole as much as Duplicacy but I believe there are some differences in how checksumming and integrity is handled?