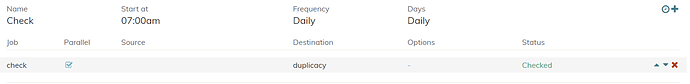

It ran this morning with -d. Looks like the time is all in the Listing Chunks. Once that is complete the process completes in a couple minutes

Running check command from /cache/localhost/all

Options: [-log -d check -storage duplicacy -a -tabular]

2020-05-29 **07:00**:01.262 INFO STORAGE_SET Storage set to gcd://backup

2020-05-29 07:00:01.349 DEBUG PASSWORD_ENV_VAR Reading the environment variable DUPLICACY_DUPLICACY_GCD_TOKEN

2020-05-29 07:00:03.571 DEBUG PASSWORD_ENV_VAR Reading the environment variable DUPLICACY_DUPLICACY_GCD_TOKEN

2020-05-29 07:00:03.571 DEBUG PASSWORD_ENV_VAR Reading the environment variable DUPLICACY_DUPLICACY_PASSWORD

2020-05-29 07:00:04.415 TRACE CONFIG_ITERATIONS Using 16384 iterations for key derivation

2020-05-29 07:00:04.610 DEBUG STORAGE_NESTING Chunk read levels: [1], write level: 1

2020-05-29 07:00:04.620 INFO CONFIG_INFO Compression level: 100

2020-05-29 07:00:04.620 INFO CONFIG_INFO Average chunk size: 4194304

2020-05-29 07:00:04.620 INFO CONFIG_INFO Maximum chunk size: 16777216

2020-05-29 07:00:04.620 INFO CONFIG_INFO Minimum chunk size: 1048576

2020-05-29 07:00:04.620 INFO CONFIG_INFO Chunk seed: <DELETED>

2020-05-29 07:00:04.620 TRACE CONFIG_INFO Hash key: <DELETED>

2020-05-29 07:00:04.620 TRACE CONFIG_INFO ID key: <DELETED>

2020-05-29 07:00:04.620 TRACE CONFIG_INFO File chunks are encrypted

2020-05-29 07:00:04.620 TRACE CONFIG_INFO Metadata chunks are encrypted

2020-05-29 07:00:04.620 DEBUG PASSWORD_ENV_VAR Reading the environment variable DUPLICACY_DUPLICACY_PASSWORD

2020-05-29 07:00:04.631 DEBUG LIST_PARAMETERS id: , revisions: [], tag: , showStatistics: false, showTabular: true, checkFiles: false, searchFossils: false, resurrect: false

**2020-05-29 07:00:04.631 INFO SNAPSHOT_CHECK Listing all chunks**

**2020-05-29 07:00:04.631 TRACE LIST_FILES Listing chunks/**

**2020-05-29 **10:56:58**.042 TRACE SNAPSHOT_LIST_IDS Listing all snapshot ids**

2020-05-29 10:56:58.281 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot tv

2020-05-29 10:56:58.467 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot movies

2020-05-29 10:56:58.916 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/movies/1 from the snapshot cache

2020-05-29 10:56:59.097 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/movies/2 from the snapshot cache

2020-05-29 10:56:59.342 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/movies/3 from the snapshot cache

2020-05-29 10:56:59.544 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/movies/4 from the snapshot cache

2020-05-29 10:56:59.723 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/movies/5 from the snapshot cache

2020-05-29 10:56:59.938 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/movies/6 from the snapshot cache

2020-05-29 10:56:59.938 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot audiobooks

2020-05-29 10:57:00.653 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/1 from the snapshot cache

2020-05-29 10:57:00.829 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/2 from the snapshot cache

2020-05-29 10:57:01.022 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/3 from the snapshot cache

2020-05-29 10:57:01.196 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/4 from the snapshot cache

2020-05-29 10:57:01.498 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/5 from the snapshot cache

2020-05-29 10:57:01.815 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/6 from the snapshot cache

2020-05-29 10:57:02.135 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/7 from the snapshot cache

2020-05-29 10:57:02.329 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/8 from the snapshot cache

2020-05-29 10:57:02.595 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/9 from the snapshot cache

2020-05-29 10:57:02.889 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/10 from the snapshot cache

2020-05-29 10:57:03.141 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/audiobooks/11 from the snapshot cache

2020-05-29 10:57:03.141 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot bootcamp

2020-05-29 10:57:07.788 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/bootcamp/1 from the snapshot cache

2020-05-29 10:57:07.788 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot wedding

2020-05-29 10:57:08.089 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/wedding/1 from the snapshot cache

2020-05-29 10:57:08.090 TRACE SNAPSHOT_LIST_REVISIONS Listing revisions for snapshot docker

2020-05-29 10:57:08.478 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/docker/4 from the snapshot cache

2020-05-29 10:57:08.660 DEBUG DOWNLOAD_FILE_CACHE Loaded file snapshots/docker/5 from

.

.

.

LOTS MORE OF THIS

.

.

2020-05-29 **10:59:34**.226 INFO SNAPSHOT_CHECK