I’ve created an unscheduled schedule to run a massive check ‘-a -files’ once on a local database. After few days of running it simply stopped, seemingly cleanly, halfway:

Full log here

Running check command from /var/services/homes/duplicacy-web/.duplicacy-web/repositories/localhost/all

Options: [-log check -storage tuchka -a -files -a -tabular]

2020-05-12 18:56:59.958 INFO STORAGE_SET Storage set to /volume1/Backups/duplicacy

2020-05-12 18:57:00.020 INFO SNAPSHOT_CHECK Listing all chunks

2020-05-12 18:57:30.480 INFO SNAPSHOT_CHECK 5 snapshots and 283 revisions

2020-05-12 18:57:30.492 INFO SNAPSHOT_CHECK Total chunk size is 1425G in 302414 chunks

2020-05-12 22:03:48.242 INFO SNAPSHOT_VERIFY All files in snapshot users-imac at revision 86 have been successfully verified

2020-05-13 00:59:06.755 INFO SNAPSHOT_VERIFY All files in snapshot users-imac at revision 126 have been successfully verified

2020-05-13 03:28:45.316 INFO SNAPSHOT_VERIFY All files in snapshot users-imac at revision 135 have been successfully verified

2020-05-13 06:18:24.769 INFO SNAPSHOT_VERIFY All files in snapshot users-imac at revision 150 have been successfully verified

2020-05-13 09:18:54.001 INFO SNAPSHOT_VERIFY All files in snapshot users-imac at revision 172 have been successfully verified

2020-05-13 11:50:41.796 INFO SNAPSHOT_VERIFY All files in snapshot alex-iMacPro at revision 1 have been successfully verified

2020-05-13 14:10:00.223 INFO SNAPSHOT_VERIFY All files in snapshot alex-iMacPro at revision 6 have been successfully verified

2020-05-13 17:22:34.653 INFO SNAPSHOT_VERIFY All files in snapshot alex-iMacPro at revision 13 have been successfully verified

2020-05-13 20:44:23.588 INFO SNAPSHOT_VERIFY All files in snapshot alex-iMacPro at revision 14 have been successfully verified

2020-05-13 22:07:25.730 INFO SNAPSHOT_VERIFY All files in snapshot alex-imac at revision 146 have been successfully verified

2020-05-14 00:35:32.020 INFO SNAPSHOT_VERIFY All files in snapshot alex-imac at revision 860 have been successfully verified

2020-05-14 01:49:28.674 INFO SNAPSHOT_VERIFY All files in snapshot alex-imac at revision 1257 have been successfully verified

2020-05-14 03:13:38.341 INFO SNAPSHOT_VERIFY All files in snapshot alex-imac at revision 1803 have been successfully verified

2020-05-14 04:41:10.916 INFO SNAPSHOT_VERIFY All files in snapshot alex-imac at revision 2094 have been successfully verified

2020-05-14 05:40:15.196 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 1 have been successfully verified

2020-05-14 06:33:59.579 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 133 have been successfully verified

2020-05-14 07:16:45.795 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 361 have been successfully verified

2020-05-14 07:49:09.360 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 588 have been successfully verified

2020-05-14 08:28:13.395 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 629 have been successfully verified

2020-05-14 08:58:33.920 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 635 have been successfully verified

2020-05-14 09:08:16.269 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 643 have been successfully verified

2020-05-14 09:22:51.984 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 644 have been successfully verified

2020-05-14 09:44:00.820 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 647 have been successfully verified

2020-05-14 09:59:06.391 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 650 have been successfully verified

2020-05-14 10:15:53.959 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 651 have been successfully verified

2020-05-14 10:28:25.346 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 652 have been successfully verified

2020-05-14 10:43:41.907 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 654 have been successfully verified

2020-05-14 13:48:48.741 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 657 have been successfully verified

2020-05-14 16:54:26.656 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 661 have been successfully verified

2020-05-14 20:04:01.389 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 666 have been successfully verified

2020-05-15 00:12:36.282 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 671 have been successfully verified

2020-05-15 04:36:33.522 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 674 have been successfully verified

2020-05-15 08:18:54.915 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 675 have been successfully verified

2020-05-15 12:04:22.253 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 676 have been successfully verified

2020-05-15 15:42:58.416 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 677 have been successfully verified

2020-05-15 19:12:00.253 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 678 have been successfully verified

2020-05-15 22:52:37.198 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 680 have been successfully verified

2020-05-16 02:36:28.690 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 681 have been successfully verified

2020-05-16 06:17:57.867 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 683 have been successfully verified

2020-05-16 09:49:55.438 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 684 have been successfully verified

2020-05-16 13:24:17.587 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 685 have been successfully verified

2020-05-16 16:56:36.396 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 688 have been successfully verified

2020-05-16 20:21:25.181 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 689 have been successfully verified

2020-05-17 00:05:52.365 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 690 have been successfully verified

[snip]

2020-05-20 22:31:17.817 WARN DOWNLOAD_RESURRECT Fossil 0da2a486e748da7c5c365cc4d9adecc713ab9c120a3e2a4d8d25624ad8f65e3d has been resurrected

2020-05-20 22:31:21.331 WARN DOWNLOAD_RESURRECT Fossil 2a6cd986c323b4f83432acce29ecf4acb5f35b0b5ff4c936b04f7fc5947c7adb has been resurrected

2020-05-20 22:31:21.562 WARN DOWNLOAD_RESURRECT Fossil 9da13387900f19dcd2ebe79b81e53ea710fe3f3e53d0542d92d406b8ccd7c5ae has been resurrected

2020-05-20 22:31:23.571 WARN DOWNLOAD_RESURRECT Fossil 35f1a6f3f2d8ff6f9c9c795fdbf2e700192544aa486fa2060f4f71dab78bdaa1 has been resurrected

2020-05-20 22:31:28.743 WARN DOWNLOAD_RESURRECT Fossil f628676979f9eb62f24f75479e4e241ba7a88e637effb4b5c37c722847384f81 has been resurrected

2020-05-20 22:31:28.965 WARN DOWNLOAD_RESURRECT Fossil 15f7b824e5ad7a484e269998763b5b7b615c07667d812048c0000a4fce27139a has been resurrected

2020-05-20 22:31:29.765 WARN DOWNLOAD_RESURRECT Fossil 64725de546f7bd816f70682880ff892a05069ec89961a07996b25d25517a1981 has been resurrected

2020-05-20 22:31:30.592 WARN DOWNLOAD_RESURRECT Fossil 13d912ea83b45b0953a909f92faac4fd691fca98cfc420735cb1412a789c171c has been resurrected

2020-05-20 22:31:32.129 WARN DOWNLOAD_RESURRECT Fossil 430dc385fc82143e337eb2dc34a607eea44d7934cb8b0901aeded9610220a513 has been resurrected

2020-05-20 22:31:43.300 WARN DOWNLOAD_RESURRECT Fossil 64e6642fead04a2798bec6316aab68d1bd64b85a4937507f4f4e564e099bd7b3 has been resurrected

2020-05-20 22:31:43.736 WARN DOWNLOAD_RESURRECT Fossil fb5c6d3370d5dd3d78b49cdfb26c64a2b88f7c6f88109a0f8fe2c7e8677c34ce has been resurrected

2020-05-21 02:41:45.639 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 716 have been successfully verified

2020-05-21 02:41:45.653 WARN DOWNLOAD_RESURRECT Fossil 59d2d7ba17552af9c2b25cf7a9de9f18e8a94edb319de23a3d495a8d56e6190f has been resurrected

2020-05-21 02:41:45.939 WARN DOWNLOAD_RESURRECT Fossil b96d2716afad7ed0455489ef994a16508e39ea4b133bb7ca31d23805b5bebf2e has been resurrected

2020-05-21 02:41:49.413 WARN DOWNLOAD_RESURRECT Fossil 1220539548406509b5ca3b71fae8b4f58e243251a4a87029ce98822cbb1bf8ed has been resurrected

2020-05-21 02:41:49.709 WARN DOWNLOAD_RESURRECT Fossil 5a2cb7b564d9c7f4120064d85ccf8db31568933fb8126f9ff4f364cc9649fd8a has been resurrected

2020-05-21 02:41:51.651 WARN DOWNLOAD_RESURRECT Fossil 34e5a8961bb293dafd1170f85e04fddadf4ae3eb7ddd3d5ed7e46dcdf95847a5 has been resurrected

2020-05-21 02:41:56.779 WARN DOWNLOAD_RESURRECT Fossil 7a932a86ba380fc1da063315369aa15448a1825a625aa4a475c75362a88b0be2 has been resurrected

2020-05-21 02:41:58.785 WARN DOWNLOAD_RESURRECT Fossil c9772d767cb5e53dab212220cda1d7f97943a0128f2585749b8610ea0b8d60d7 has been resurrected

2020-05-21 02:42:09.359 WARN DOWNLOAD_RESURRECT Fossil 88c0ee5e2ba7eee8b29e61f957c62cc78adf53cc06277af0444c47e4191ae91c has been resurrected

2020-05-21 02:42:09.732 WARN DOWNLOAD_RESURRECT Fossil 8e90385d307cdfdfa73e5cc66d45cc29679a9a70f731f3fb16d2e1d92e8d5679 has been resurrected

2020-05-21 06:30:56.797 WARN DOWNLOAD_RESURRECT Fossil 3835f43388f2a915407996992eed4965a92fa91b2308d37337830285eabdc183 has been resurrected

2020-05-21 06:30:56.902 INFO SNAPSHOT_VERIFY All files in snapshot alexmbp at revision 717 have been successfully verified

alexmbp snapshot has 1128 revisions btw.

No duplicacy processes other than UI

alex@Tuchka:~$ sudo ps -ax |grep duplicacy | grep -v grep

18377 ? Ssl 0:07 /var/services/homes/duplicacy-web/duplicacy_web_linux_x64_1.3.0

alex@Tuchka:~$

The datastore is 1.5TB with about 300k chunks. alexmbp has about 200k files. Device (synology) duplicacy runs on has 32GB of RAM with 29GB available.

I have removed the DUPLICACY_ATTRIBUTE_THRESHOLD flag that I had set before. Last time I looked at it it had consumed about 12GB. Could it just have exceeded some threshold and die?

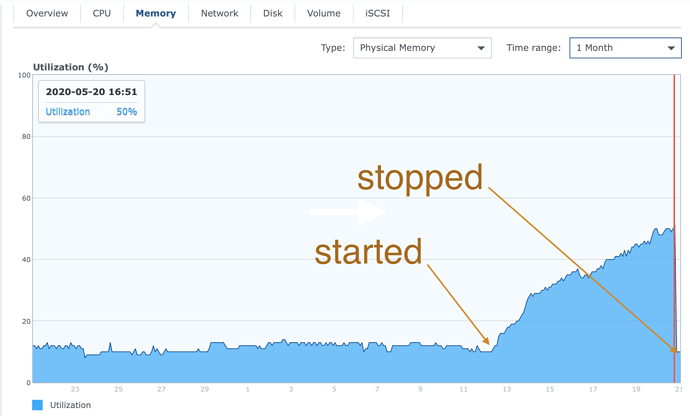

Looking at memory utilization history it never even approached the limit: