Good morning! I’ve searched through several posts but havent found an exact match to my situation.

ISSUE:

Quarterly disk scrub found checksum mismatch with several chunks on the backup. The same chunks have shown up in logs during test run in November and now again this month. Check command shows all snapshots and revisions exist (~15TB). shown below:

2024-12-18 07:00:02.164 INFO SNAPSHOT_CHECK Listing all chunks

2024-12-18 07:01:46.367 INFO SNAPSHOT_CHECK 5 snapshots and 79 revisions

2024-12-18 07:01:46.486 INFO SNAPSHOT_CHECK Total chunk size is 14255G in 3003109 chunks

2024-12-18 07:01:47.720 INFO SNAPSHOT_CHECK All chunks referenced by snapshot personal at revis…

I have not ran the Prune command to cleanup any snapshots.

Check command finds all chunks although I’ve not tried to do a full restore and would believe several files would fail based on the checksum mismatch.

Is there a way to determine which backup is effected by the corrurpted files?

What is the proper method to remove the bad chunks and backup the missing data?

I havent ran check command with -files or -chunks <<< would this be my best option for finding the corrupted files? Before attempting and regretting a mistake, I thought I’d ask questions first.

SETUP:

Duplicacy (Web-UI) runninng on a Synololgy DS1819; backing up to a Synology DS1815 on local network

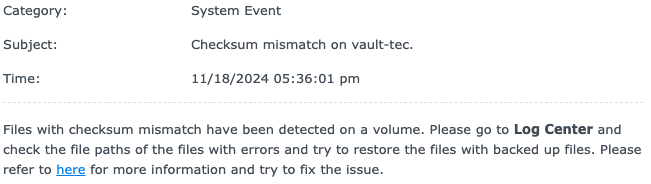

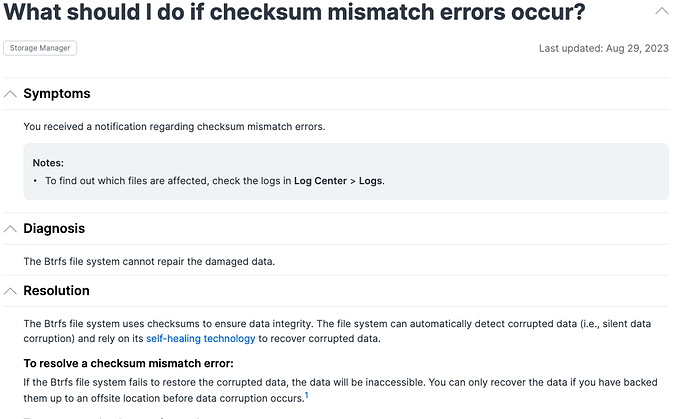

DSM LOG:

| Warning | System | 12/17/24 5:07 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/fc/351ed75115572c57b9c215328c599c16a755d492eaffe450b102bdef72d29e]. | |||

|---|---|---|---|---|---|---|---|

| Warning | System | 12/17/24 4:59 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/94/d27bee28af153f0f08336e86f64c2dcda0589acc44cddee9a5e4df86633c97]. | |||

| Warning | System | 12/17/24 4:05 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/80/39f04b76a182af38fc6cfa7f3d1fb70c5f7846afd78d9ebf1268f58e967df8]. | |||

| Warning | System | 12/17/24 3:38 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/47/d1e04e8973e22d6ca0b5d2020f11bfa6c66e7d6e6a1e769b458eb3336d70ce]. | |||

| Warning | System | 12/17/24 3:34 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/1d/2da395baf7a0fc9d5641d5045c6c289301be5bcc5bb0ea826ff902211608e0]. | |||

| Warning | System | 12/17/24 3:33 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/a9/a42822f836dd0cddf91692e7bf03aa583eb5c0cdecf5ffdf10f90f0061e039]. | |||

| Warning | System | 12/17/24 3:31 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/5d/cd35d95dcd5aa6079987605f76ed7731aaad32e673236faf03963729b89079]. | |||

| Warning | System | 12/17/24 3:22 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/81/7be8627450de2b66fa89e9a4309497c4cbbb3304b5de19249f18765c425dad]. | |||

| Warning | System | 12/17/24 3:15 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/a1/7059369a58a8193255bfb2055f243c673453926008da9bedcdc7d6be3e3b03]. | |||

| Warning | System | 12/17/24 3:01 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/12/a2e672bb2c1e166a7477595e77c9f578fdbdd76d86dd98189f3f8d7322f1f3]. | |||

| Warning | System | 12/17/24 2:42 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/10/720dad9056dc2c3bc805a0798a44d4dfa5cb832cc2d582b6c2c4fd809b412c]. | |||

| Warning | System | 12/17/24 2:42 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/90/c57ce46fb6b091da0707b365828035d7e3cf02409d7ec624a147b8c706087b]. | |||

| Warning | System | 12/17/24 2:36 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/3a/4ce10b1a1a3507987b1b72f0d898d64f86b6c98559efccfb458bc9f73c3c9e]. | |||

| Warning | System | 12/17/24 2:32 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/db/6bfdaa1321a670609ac8cf552bc908acd3bd123b6ec761bd1917d66df10cff]. | |||

| Warning | System | 12/17/24 2:04 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/b6/088633d2da63120f586d446ee08124b757fe755c89e9a20a45e62ff36b258c]. | |||

| Warning | System | 12/17/24 1:24 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/ab/e60a0b1c6a985083b45f2eb580edee619004d1c3a44237e4c455fb257862f2]. | |||

| Warning | System | 12/17/24 1:14 | SYSTEM | Checksum mismatch on file [/volume1/backup/duplicacy/chunks/b0/67aa775c0d98c7f4ad3b7f3d06246882386ceb1e8924a104d2c63ca6cc62bf]. |

Thank you for the help and I’m happy to add more detail as necessary.