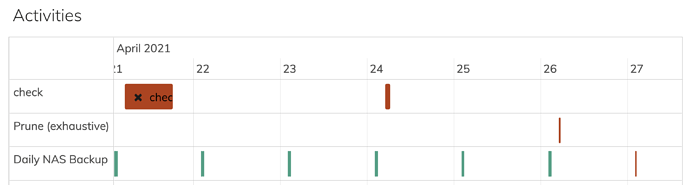

My prune scheduled prunes and checks are all of a sudden all failing and now also my backups seem to be affected:

The last prune failed like this:

2021-04-26 05:11:35.804 INFO PRUNE_NEWSNAPSHOT Snapshot NAS_christoph revision 162 was created after collection 3

2021-04-26 05:12:38.173 WARN DOWNLOAD_RETRY Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed; retrying

2021-04-26 05:12:39.980 WARN DOWNLOAD_RETRY Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed; retrying

2021-04-26 05:12:41.023 WARN DOWNLOAD_RETRY Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed; retrying

2021-04-26 05:12:41.912 ERROR DOWNLOAD_DECRYPT Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed

Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed

The last check, failed like this:

2021-04-24 06:13:46.340 INFO SNAPSHOT_CHECK All chunks referenced by snapshot NAS_christoph at revision 161 exist

2021-04-24 06:13:49.543 WARN DOWNLOAD_RETRY Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed; retrying

2021-04-24 06:13:50.921 WARN DOWNLOAD_RETRY Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed; retrying

2021-04-24 06:13:52.185 WARN DOWNLOAD_RETRY Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed; retrying

2021-04-24 06:13:53.073 ERROR DOWNLOAD_DECRYPT Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed

Failed to decrypt the chunk 678c1453b36e44663c330d20a2f5a93c4722180108c94fce0a5e1ef1827ae493: cipher: message authentication failed

What’s going on?

I checked the storage for the chunk that it fails to decrypt and I see nothing unusual. It is 887.7 kB in size and located in the correct folder (67).