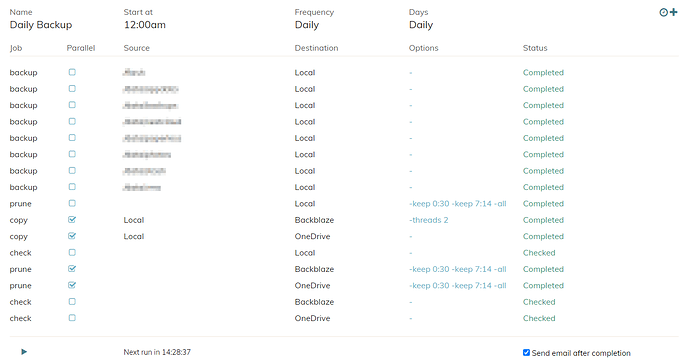

Same under “unique” column as well.

The only time the chunk count is different from what I see is at the very start of the “Check” logs. Everything in the tabulated section apperas identical.

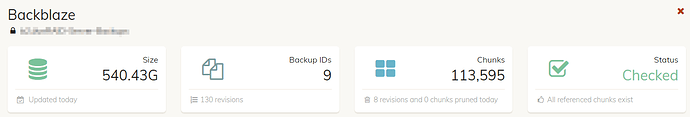

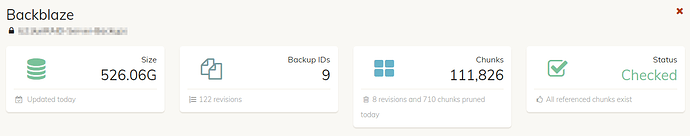

B2 Shows

2021-03-16 05:10:50.442 INFO SNAPSHOT_CHECK 9 snapshots and 122 revisions

2021-03-16 05:10:50.470 INFO SNAPSHOT_CHECK Total chunk size is 501,745M in 111872 chunks

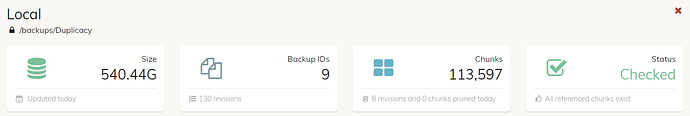

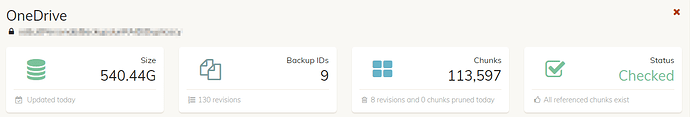

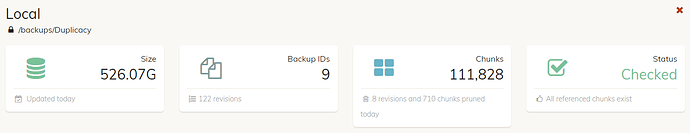

OneDrive and Local show

2021-03-16 05:18:34.172 INFO SNAPSHOT_CHECK 9 snapshots and 122 revisions

2021-03-16 05:18:34.194 INFO SNAPSHOT_CHECK Total chunk size is 501,751M in 111874 chunks

Tabulated data is in different order at the end, but the numbers all match up there. I copy/pasted and did a “all” line for each backu section to verify they match on the other log as well. Found each one of them for an exact match.