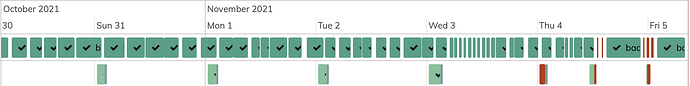

The prune on the google drive (configured via gcd_start) failed, with no other diagnostic:

2021-11-04 01:21:17.530 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1613

2021-11-04 01:21:18.652 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1614

2021-11-04 01:21:19.692 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1615

2021-11-04 01:21:20.784 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1616

2021-11-04 01:21:21.946 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1617

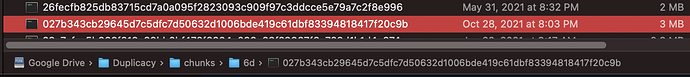

2021-11-04 01:38:51.853 ERROR CHUNK_FIND Chunk 6d027b343cb29645d7c5dfc7d50632d1006bde419c61dbf83394818417f20c9b does not exist in the storage

Chunk 6d027b343cb29645d7c5dfc7d50632d1006bde419c61dbf83394818417f20c9b does not exist in the storage

Mounting the storage with rclone I see that chunk is actually present:

If that was google API failure – there should be error log. Does it mean this is google bug or there is some unhandled failure path in Google Drive API library and/or duplicacy?

Update. I ran it again, with -d, and it worked correctly this time:

2021-11-04 12:00:00.734 TRACE CHUNK_FOSSILIZE The chunk 6d027b343cb29645d7c5dfc7d50632d1006bde419c61dbf83394818417f20c9b has been marked as a fossil

So it is indeed some intermittent failure of the backend that duplicacy does not check for (either itself, or in the library)

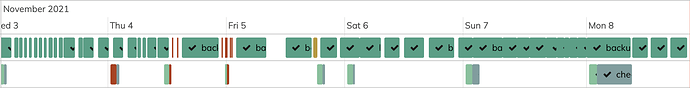

Update2: and while it was running – backup failed:

Running backup command from /Library/Caches/Duplicacy/localhost/0 to back up /Users

Options: [-log backup -storage Rabbit -vss -stats]

2021-11-04 13:00:01.976 INFO REPOSITORY_SET Repository set to /Users

2021-11-04 13:00:01.976 INFO STORAGE_SET Storage set to gcd://Duplicacy

2021-11-04 13:00:05.581 INFO BACKUP_KEY RSA encryption is enabled

2021-11-04 13:00:05.581 INFO BACKUP_EXCLUDE Exclude files with no-backup attributes

2021-11-04 13:00:06.988 ERROR SNAPSHOT_NOT_EXIST Snapshot obsidian-users at revision 1670 does not exist

Snapshot obsidian-users at revision 1670 does not exist