Did it  THanks.

THanks.

Thanks @gchen, for a fair summary of that comparison thread. Yes, this kind of open discussion is what moves open source software along (and what helps users make informed decisions).

Regarding the approach of trusting the backend, I wonder if anyone can give some indication as to what trusting implies in practice. I suspect that, despite fundamentally different approaches (trusting vs not trusting), in practice, the difference is not so big (because a lot has to go wrong before you actually lose data because you trusted). Or maybe it is? Because you do mention several providers where problems did occur. And the thing is: those are the known problems. If duplicacy trusts the storage backend by default, doesn’t that already minimize the chances such problems become known (before it is too late)?

While it is clear that trusting the backend is riskier than not trusting it, I am not in the position to assess how much riskier it is. Or perhaps: under what circumstances. Anyone?

As you asked for “anyone”, then, my two cents:

There is no 100% fail-safe software / system, neither Dropbox, nor Duplicacy, nor Duplicati, or your NAS, and no other.

Of course there are systems theoretically more reliable than others, but in the real world you don’t have easy access to system data to confirm this reliability.

So what we have to do is take actions under our control to minimize or mitigate the risks. The more measures, or more complex, the safer it gets but also more expensive.

For example: To mitigate the risk of “trusting the backend”, I can periodically download random files and compare them with the production environment files. It’s cheap (free), and it minimizes the risk a little.

Or I can dilute the risk by backing up to multiple destinations (can be more expensive depending on how you will set it up).

In an airplane, to mitigate risks, systems are redundant. An airplane has two or three parallel hydraulic systems. If one fails, the other system takes over. Extremely expensive, but necessary.

So in my opinion, it’s not a “trusting vs not trusting” issue. The question is: how much are you willing to spend to mitigate your risk?

So in my opinion, it’s not a “trusting vs not trusting” issue. The question is: how much are you willing to spend to mitigate your risk?

Exactly, that is my point. In order to answer that question, you need to understand “your risk”. More specifically: what kind of damage can occur, how serious is it, and how likely is it to occur. In the case of the airplane, the potential damage is quite clear: people die and it is is so high, that one is willing to accept the very high mitigation costs, even though the failure of a hydraulic system (let alone two) is quite low.

So with the backups, what might happen and how likely is it? Total data loss? Partial data loss? Corrupted data, making restore a pain?

There is no 100% fail-safe software / system, neither Dropbox, nor Duplicacy, nor Duplicati, or your NAS, and no other.

Though it is fair to mention that most cloud storage providers du have multiple redundancies across multiple data centres. So we are not really talking about that kind of loss due to hardware failure. What we need to worry about is basically software failure, like the server returning “OK” although the data has not been saved correctly. So this is basically what I’m after: what kind of software failures are we up against?

The “trusting vs not trusting” decision affects the implementation of backup software. If we determine the storage can be trusted, we’ll just implement some verification scheme to catch the error should such an error occur, otherwise we need to implement correction (like PAR2 error correction) on top of verification.

I agree that from a point of view of an end user, no system can be trusted 100%. The addition of a backup software as the middle layer only makes it worse if the software is ill-devised. The only weapon against failures is hash verification. There are two types of hashes in Duplicacy, chunk hashes and file hashes. With chunk hashes we know for sure that a chunk we download is the same as what we uploaded. With the file hash we can be confident once we are able to restore a file with the correct file hash that we have restored correctly what was backed up. Of course, there can be some cases that may not be detected by hash verification (for instance, if there is a bug in reading the file during backup) but that is very unlikely.

Continuing the tests …

One of the goals of my use for Duplicati and Duplicacy is to back up large files (some Gb) that are changed in small parts. Examples: Veracrypt volumes, mbox files, miscellaneous databases, and Evernote databases (basically an SQLite file).

My main concern is the growth of the space used in the backend as incremental backups are performed.

I had the impression that Duplicacy made large uploads for small changes, so I did a little test:

I created an Evernote installation from scratch and then downloaded all the notes from the Evernote servers (about 4,000 notes, the Evernote folder was then 871 Mb, of which 836 Mb from the database).

I did an initial backup with Duplicati and an initial backup with Duplicacy.

Results:

Duplicati:

672 Mb

115 min

and

Duplicacy:

691 Mb

123 min

Pretty much the same, but with some difference in size.

Then I opened Evernote, made a few minor changes to some notes (a few Kb), closed Evernote, and ran backups again.

Results:

Duplicati (from the log):

BeginTime: 21/01/2018 22:14:41

EndTime: 21/01/2018 22:19:30 (~ 5 min)

ModifiedFiles: 5

ExaminedFiles: 347

OpenedFiles: 7

AddedFiles: 2

SizeOfModifiedFiles: 877320341

SizeOfAddedFiles: 2961

SizeOfExaminedFiles: 913872062

SizeOfOpenedFiles: 877323330

and

Duplicacy (from the log):

Files: 345 total, 892,453K bytes; 7 new, 856,761K bytes

File chunks: 176 total, 903,523K bytes; 64 new, 447,615K bytes, 338,894K bytes uploaded

Metadata chunks: 3 total, 86K bytes; 3 new, 86K bytes, 46K bytes uploaded

All chunks: 179 total, 903,610K bytes; 67 new, 447,702K bytes, 338,940K bytes uploaded

Total running time: 01:03:50

Of course it jumped several chunks, but still uploaded 64 chunks of a total of 176!

I decided to do a new test: I opened Evernote and changed one letter of the contents of one note.

And I ran the backups again. Results:

Duplicati:

BeginTime: 21/01/2018 23:37:43

EndTime: 21/01/2018 23:39:08 (~1,5 min)

ModifiedFiles: 4

ExaminedFiles: 347

OpenedFiles: 4

AddedFiles: 0

SizeOfModifiedFiles: 877457315

SizeOfAddedFiles: 0

SizeOfExaminedFiles: 914009136

SizeOfOpenedFiles: 877457343

and

Duplicacy (remembering: only one letter changed):

Files: 345 total, 892,586K bytes; 4 new, 856,891K bytes

File chunks: 178 total, 922,605K bytes; 26 new, 176,002K bytes, 124,391K bytes uploaded

Metadata chunks: 3 total, 86K bytes; 3 new, 86K bytes, 46K bytes uploaded

All chunks: 181 total, 922,692K bytes; 29 new, 176,088K bytes, 124,437K bytes uploaded

Total running time: 00:22:32

In the end, the space used in the backend (contemplating the 3 versions, of course) was:

Duplicati: 696 Mb

Duplicacy: 1,117 Mb

That is, with these few (tiny) changes Duplicati added 24 Mb to the backend and Duplicacy 425 Mb.

Only problem: even with a backup so simple and small, in the second and third execution Duplicati showed me a “warning”, but I checked the log and:

Warnings: []

Errors: []

It seems to me a behavior already known to Duplicati. What worries me is to ignore the warnings and fail to see a real warning.

Now I’m here evaluating the technical reason for such a big difference, thinking about how Duplicati and Duplicacy backups are structured. Any suggestion?

Interesting. I know the evernote data directory is just a big sqlite database and a bunch of very small files. The default chunk size in Duplicati is 100K, and 4M in Duplicacy. While 100K would be too small for Duplicacy, I wonder if you can run another test with the default size set to 1M:

duplicacy init -c 1M repository_id storage_url

Or even better, since it is a database, you can switch to the fixed-size chunking algorithm by calling this init command:

duplicacy init -c 1M -max 1M -min 1M repository_id storage_url

In fact, I would recommend this for backing up databases and virtual machines, as this is the default setting we used in Vertical Backup.

OK! I deleted all backup files from the backend (Dropbox), I also deleted all local configuration files (preferences, etc.).

I ran the init command with the above parameters (second option, similar to Vertical Backup) and I’m running the new backup. As soon as it’s finished I’ll post the results here.

I’m following up the backup and it’s really different (as expected), since with the previous configuration it ran several packing commands and then an upload, and now it’s almost 1:1, a packing and an upload.

But I noticed something: even with the chunk size fixed, they still vary in size, some have 60kb, some 500Kb, etc. None above 1Mb. Should not they all be 1024?

Everything was going well until it started to upload the chunks for the database, so he stopped:

Packing Databases/.accounts

Uploaded chunk 305 size 210053, 9KB/s 1 day 03:08:51 0.8%

Packed Databases/.accounts (28)

Packing Databases/.sessiondata

Uploaded chunk 306 size 164730, 9KB/s 1 day 02:40:56 0.8%

Packed Databases/.sessiondata (256)

Packing Databases/lgtbox.exb

Uploaded chunk 307 size 231057, 9KB/s 1 day 01:58:44 0.8%

Uploaded chunk 308 size 28, 9KB/s 1 day 02:04:33 0.8%

Uploaded chunk 309 size 256, 9KB/s 1 day 02:08:27 0.8%

Uploaded chunk 310 size 1048576, 11KB/s 23:12:09 0.9%

Uploaded chunk 311 size 1048576, 12KB/s 20:56:24 1.0%

Uploaded chunk 312 size 1048576, 13KB/s 19:10:50 1.1%

Uploaded chunk 313 size 1048576, 14KB/s 17:36:14 1.3%

Uploaded chunk 314 size 1048576, 15KB/s 16:34:14 1.4%

...

...

Uploaded chunk 441 size 1048576, 61KB/s 03:23:18 15.9%

Uploaded chunk 442 size 1048576, 62KB/s 03:22:16 16.1%

Failed to upload the chunk 597ec712d6954c9d4cd2d64f38857e6b198418402d2aaef6f6c590cacb5af31d: Post https://content.dropboxapi.com/2/files/upload: EOF

Incomplete snapshot saved to C:\_Arquivos\Duplicacy\preferences\NOTE4_Admin_Evernote-dropbox/incomplete

I tried to continue the backup and:

Incomplete snapshot loaded from C:\_Arquivos\Duplicacy\preferences\NOTE4_Admin_Evernote-dropbox/incomplete

Listing all chunks

Listing chunks/

Listing chunks/48/

Listing chunks/8e/

...

Listing chunks/03/

Listing chunks/f4/

Listing chunks/2b/

Failed to list the directory chunks/2b/:

I tried again to run and now it’s going …

...

Skipped chunk 439 size 1048576, 19.77MB/s 00:00:38 15.8%

Skipped chunk 440 size 1048576, 19.91MB/s 00:00:37 15.9%

Skipped chunk 441 size 1048576, 20.05MB/s 00:00:37 16.1%

Uploaded chunk 442 size 1048576, 6.73MB/s 00:01:49 16.2%

Uploaded chunk 443 size 1048576, 4.45MB/s 00:02:44 16.3%

Uploaded chunk 444 size 1048576, 2.99MB/s 00:04:04 16.4%

...

The new “initial” backup is completed, and it took much longer than with the previous setup:

Backup for C:_Arquivos\Evernote at revision 1 completed

Files: 345 total, 892,586K bytes; 344 new, 892,538K bytes

File chunks: 1206 total, 892,586K bytes; 764 new, 748,814K bytes, 619,347K bytes uploaded

Metadata chunks: 3 total, 159K bytes; 3 new, 159K bytes, 101K bytes uploaded

All chunks: 1209 total, 892,746K bytes; 767 new, 748,973K bytes, 619,449K bytes uploaded

Total running time: 02:39:20

It ended up with 1206 file chunks (the previous was 176), which was expected.

Then I opened Evernote and it synchronized a note from the server (an article I had captured this morning), which lasted 2 seconds and added a few kb, see the log:

14:03:13 [INFO ] [2372] [9292] 0% Retrieving 1 note

14:03:13 [INFO ] [2372] [9292] 0% Retrieving note "Automação vai mudar a carreira de 16 ..."

14:03:13 [INFO ] [2372] [9292] 0% * guid={6a4d9939-8721-4fb5-b9d1-3aa6a045266f}

14:03:13 [INFO ] [2372] [9292] 0% * note content, length=0

14:03:13 [INFO ] [2372] [9292] 0% Retrieving resource, total size=31053

14:03:13 [INFO ] [2372] [9292] 0% * guid={827bb525-071c-40f3-9c00-1e82b60f4136}

14:03:13 [INFO ] [2372] [9292] 0% * note={6a4d9939-8721-4fb5-b9d1-3aa6a045266f}

14:03:13 [INFO ] [2372] [9292] 0% * resource data, size=31053

14:03:14 [INFO ] [2372] [7112] 100% Updating local note "Automação vai mudar a carreira de 16 ...", resource count: 4, usn=0

14:03:15 [INFO ] [2372] [7112] 100% * guid={6A4D9939-8721-4FB5-B9D1-3AA6A045266F}

14:03:15 [INFO ] [2372] [7112] 100% Updating local resource "363cd3502354e5dbd4e95e61e387afe2", 31053 bytes

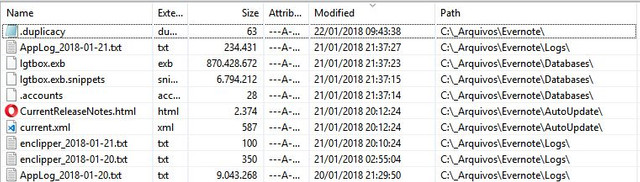

The Evernote folder, which was like this:

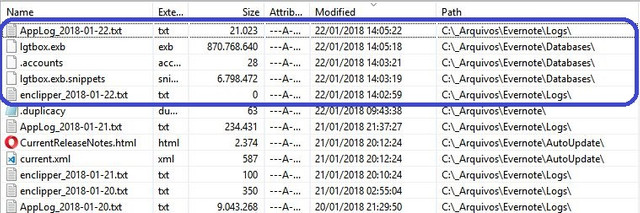

becomed like this

So I ran a new backup with Duplicati:

BeginTime: 22/01/2018 16:07:50

EndTime: 22/01/2018 16:10:50 (~ 3 min)

ModifiedFiles: 3

ExaminedFiles: 349

OpenedFiles: 5

AddedFiles: 2

SizeOfModifiedFiles: 877567112

SizeOfAddedFiles: 21073

SizeOfExaminedFiles: 914374437

SizeOfOpenedFiles: 877588213

and a new backup with Duplicacy:

Files: 346 total, 892,943K bytes; 4 new, 857,019K bytes

File chunks: 1207 total, 892,943K bytes; 74 new, 73,659K bytes, 45,466K bytes uploaded

Metadata chunks: 3 total, 159K bytes; 3 new, 159K bytes, 101K bytes uploaded

All chunks: 1210 total, 893,103K bytes; 77 new, 73,819K bytes, 45,568K bytes uploaded

Total running time: 00:14:16

A much better result than with the initial setup, but still, much longer runtime and twice as many bytes sent.

The question now is: can (should?) I push the limits and reconfigure chunks size to 100K?

In Duplicacy the chunk size must be a power of 2, so it has to 64K or 128K.

Generally a chunk size that is too small is not recommended for two reasons: it will create too many chunks on the storage, and the overhead of chunk transfer becomes significant. However, if the total size of your repository is less than 1G, a chunk size of 64K or 128K won’t create too many chunks. The overhead factor can be mitigated by using multiple threads.

Unfortunately, Dropbox doesn’t like multiple uploading threads – it will complain about too many write requests if you attempt to do this. But, since in this test your main interest is the storage efficiency, I think you can just use a local storage and you can complete the run in minutes rather than hours.

Here is another thing you may be interested. Can you run Evernote on another computer and download the database there and then back up to the same storage? I’m not sure if the databases on two computers can be deduplicated – it depends on how Evernote creates the sqlite database, but it may be worth a try.

I think you can just use a local storage and you can complete the run in minutes rather than hours.

Nope, the idea is to do an off-site backup. I already do a local backup.

I’ll try 128k with Dropbox…

Very interesting results! Thangs a lot, towerbr, for the testing.

So the smaller chunk size leads to better results in the case of db files. But since the default chunk size is much bigger, I assume that for other types of backups, the smaller chunk size will create other problems? Does this mean I should exclude db files (does anyone have a regex?) from all my backups and create a separate backup for those (possibly one for each repository)? Not very convenient, but I guess we’re seeing a clear downside of the duplicacy’s killer feature, cross repository deduplication…

Another question concerning duplicacy’s storage use:

In the end, the space used in the backend (contemplating the 3 versions, of course) was:

Duplicati: 696 Mb Duplicacy: 1,117 Mb

Unless I’m misunderstanding something, that difference will we significantly reduced, once you run a prune operation, right?

But then again, you will only save space at the cost of eliminating a revision compared to duplicati. So the original comparison is fair. Ironically, this means that duplicati is actually doing a (much) better job at deduplication between revisions…

Hm, this actually further worsens the space use disadvantage of duplicacy: I believe the original tests posted on the duplicati forum did not include multiple revisions. Now we know that the difference in storage use will actually increase exponentially over time (i.e. with every revision), especially when small changes are made in large files.

Does this mean I should exclude db files (does anyone have a regex?)

from all my backups and create a separate backup for those (possibly one

for each repository)? Not very convenient…

Exact! In the case of this test, I think the solution would be to make an init with the normal chunk size (4M) for the Evernote folder with an exclude for the database, and an add with smaller chunk (128k maybe) to contemplate only the database via include.

The problem is that in the case of Evernote it is very easy to separate via include / exclude pattern because it is only one SQLite file. But for other applications, with multiple databases, it is not practical. And it’s also not practical for mbox files, for example.

So, in the end, I would leave with only one configuration (128k) applied to the whole folder (without add), which is what I am testing now.

… the difference in storage use will actually increase exponentially

over time (i.e. with every revision), especially when small changes

are made in large files.

This is exactly my main concern.

This is exactly my main concern.

Are you worried that it might be so and are testing it, or do you think it is so but are sticking to duplicacy for other reasons?

Are you worried that it might be so and are testing it, or do you think it is so but

are sticking to duplicacy for other reasons?

I’m testing Duplicacy and Duplicati for backing up large files. At the moment I have some jobs of each one running daily. I haven’t decided yet.

In a very resumed way:

Duplicati has more features (which on the other hand exposes it to more failure points) and seems to me to deal better with large files (based on the performance of my jobs and this test so far). But the use of local databases and the constant warnings are the weak points.

Duplicacy seems to me a simpler and more mature software, with some configuration difficulty (init, add, storages, repositories, etc), but it starts to worry me about backups of large files.

Does this mean I should exclude db files (does anyone have a regex?) from all my backups and create a separate backup for those (possibly one for each repository)? Not very convenient…

I think an average chunk size of 1M should be good enough for general cases. The decision of 4M was mostly due to the considerations to reduce the number of chunks (before 2.0.10 all the cloud storage used a flat chunk directory) and to reduce the overhead ratio (single thread uploading and downloading in version 1). Now that we have a nested chunk structure for all storages, and multi-threading support, it perhaps makes sense to change the default size to 1M. There is another use case where 1M did much better than 4M:

Duplicacy, however, was never to achieve the best deduplication ratio for a single repository. I knew from the beginning that by adopting a relatively large chunk size, we are going to lost the deduplication battle to competitors. But this is a tradeoff we have to make, because the main goal is the cross-client deduplication, which is completely worth the lose in deduplication efficiency on a single computer. For instance, suppose that you need to back up your Evernote database on two computers, then the storage saving brought by Duplicacy already outweighs the wasted space due to a much larger chunk size. Even if you don’t need to back up two computers, you can still benefit from this unique feature of Duplicacy – you can seed the initial backup on a computer with a faster internet connection, and then continue to run the regular backups on the computer with a slower connection.

I also wanted to add that the variable-size chunking algorithm used by Duplicacy is actually more stable than the fixed-size chunking algorithm in Duplicati, even on a single computer. Fixed-size chunking is susceptible to deletions and insertions, so when a few bytes are added to or removed from a large file, all previously split chunks after the insertion/deletion point will be affected due to changed offsets, and as a result a new set of chunks must be created. Such files include dump files from databases and unzipped tarball files.

you can seed the initial backup on a computer with a faster internet connection, and then

continue to run the regular backups on the computer with a slower connection.

This is indeed an advantage. However, to take advantage of it, the files must be almost identical on both computers, which does not apply to Evernote. But there are certainly applications for this kind of use.

The new initial backup with 128k chunks is finished. Took several hours and was interrupted several times, but I think it’s Dropbox’s “fault”.

It ended like this:

Files: 348 total, 892,984K bytes; 39 new, 885,363K bytes

File chunks: 7288 total, 892,984K bytes; 2362 new, 298,099K bytes, 251,240K bytes uploaded

Metadata chunks: 6 total, 598K bytes; 6 new, 598K bytes, 434K bytes uploaded

All chunks: 7294 total, 893,583K bytes; 2368 new, 298,697K bytes, 251,674K bytes uploaded

Interesting that the log above shows a total size of ~890Mb but the direct verification with Rclone in the remote folder shows 703Mb (?!).

What I’m going to do now is to run these two jobs (Duplicati and Duplicacy) for a few days of “normal” use and follow the results in a spreadsheet, so I’ll go back here and post the results.