Hi again!

I have a question about file deduplication.

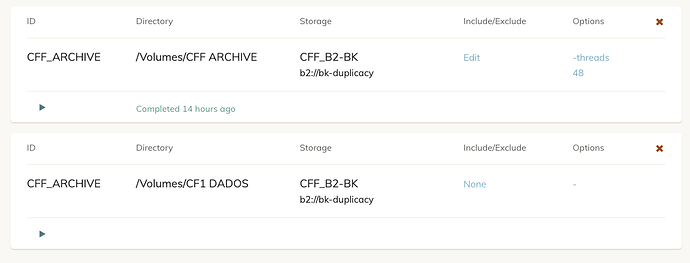

I currently work with 2 HD’s. There’s Archive, for older files and “Live” which has the files i’m working for the past year.

I have setup a backup to B2 for the Archive and another for the “Live”. Question is:

Will Duplicacy know that files from “Live” are the same when I move them to Archive?