While recently running a check operation, I received an error that a chunk was missing. I believe I’ve fixed the problem, but I’m hoping someone can review what I did just to assure me I handled it correctly.

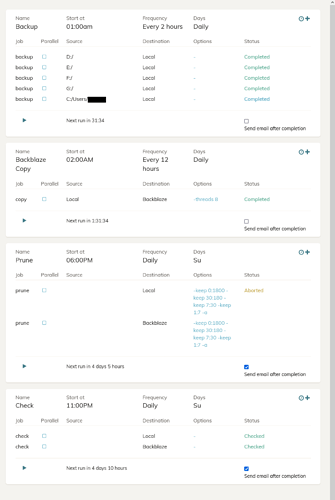

In case any of it becomes relevant, here’s how I have my operations scheduled. I’ve got several drives all backing up to a local drive pool, then that gets copied to Backblaze. On Sundays, I run a prune, followed by a check, on both backups.

The problem occurred last Sunday during the check of the local copy, when I received the error that a particular chunk was missing:

Running check command from C:\Users\username/.duplicacy-web/repositories/localhost/all

Options: [-log check -storage Local -a -tabular]

2024-04-21 23:00:01.511 INFO STORAGE_SET Storage set to Z:/

2024-04-21 23:00:01.519 INFO SNAPSHOT_CHECK Listing all chunks

2024-04-21 23:00:17.575 INFO SNAPSHOT_CHECK 6 snapshots and 800 revisions

2024-04-21 23:00:17.602 INFO SNAPSHOT_CHECK Total chunk size is 9084G in 1906697 chunks

2024-04-21 23:00:19.363 WARN SNAPSHOT_VALIDATE Chunk d863f9746fd9d987a24ee665ab0be129953f39f814c928ec4974b2368cfa4e2b referenced by snapshot MALCOLM-Video at revision 636 does not exist

2024-04-21 23:00:19.382 WARN SNAPSHOT_CHECK Some chunks referenced by snapshot MALCOLM-Video at revision 636 are missing

2024-04-21 23:00:20.642 WARN SNAPSHOT_VALIDATE Chunk d863f9746fd9d987a24ee665ab0be129953f39f814c928ec4974b2368cfa4e2b referenced by snapshot MALCOLM-Video at revision 1469 does not exist

2024-04-21 23:00:20.954 WARN SNAPSHOT_CHECK Some chunks referenced by snapshot MALCOLM-Video at revision 1469 are missing

2024-04-21 23:00:22.345 WARN SNAPSHOT_VALIDATE Chunk d863f9746fd9d987a24ee665ab0be129953f39f814c928ec4974b2368cfa4e2b referenced by snapshot MALCOLM-Video at revision 2310 does not exist

2024-04-21 23:00:22.622 WARN SNAPSHOT_CHECK Some chunks referenced by snapshot MALCOLM-Video at revision 2310 are missing

2024-04-21 23:00:23.866 WARN SNAPSHOT_VALIDATE Chunk d863f9746fd9d987a24ee665ab0be129953f39f814c928ec4974b2368cfa4e2b referenced by snapshot MALCOLM-Video at revision 3150 does not exist

2024-04-21 23:00:24.209 WARN SNAPSHOT_CHECK Some chunks referenced by snapshot MALCOLM-Video at revision 3150 are missing

2024-04-21 23:00:25.762 WARN SNAPSHOT_VALIDATE Chunk d863f9746fd9d987a24ee665ab0be129953f39f814c928ec4974b2368cfa4e2b referenced by snapshot MALCOLM-Video at revision 3990 does not exist

2024-04-21 23:00:25.889 WARN SNAPSHOT_CHECK Some chunks referenced by snapshot MALCOLM-Video at revision 3990 are missing

... a lot more references to the same chunk missing from many revisions ...

2024-04-21 23:05:27.564 WARN SNAPSHOT_CHECK Some chunks referenced by snapshot MALCOLM-Video-01 at revision 2089 are missing

2024-04-21 23:05:27.670 INFO SNAPSHOT_CHECK All chunks referenced by snapshot MALCOLM-Video-02 at revision 1 exist

2024-04-21 23:05:27.748 INFO SNAPSHOT_CHECK All chunks referenced by snapshot MALCOLM-Video-02 at revision 924 exist

.. a lot of chunks that do exist ...

2024-04-21 23:12:19.263 INFO SNAPSHOT_CHECK All chunks referenced by snapshot MALCOLM-User at revision 17277 exist

2024-04-21 23:12:19.263 ERROR SNAPSHOT_CHECK Some chunks referenced by some snapshots do not exist in the storage

I referenced the following posts to fix the problem:

Here’s what I did:

-

I searched all directories for the chunk. It did not exist.

-

I searched for references to the chunk in all log files under \Users\username\.duplicacy-web\logs to see if it may have been deleted. Nothing showed up.

-

I deleted all “cache” directories under \Users\username\.duplicacy-web. I then reran the check of the local copy. Same errors.

-

I replaced the backup ID “MALCOLM-Video-01” with a new backup ID, “MALCOLM-Video-01-fix”.

-

I ran the new backup, which took about 8 hours. The missing chunk was recreated, along with a few others, apparently.

Running backup command from C:\Users\username/.duplicacy-web/repositories/localhost/1 to back up E:/

Options: [-log backup -storage Local -stats]

2024-04-22 11:18:00.046 INFO REPOSITORY_SET Repository set to E:/

2024-04-22 11:18:00.046 INFO STORAGE_SET Storage set to Z:/

2024-04-22 11:18:00.055 INFO BACKUP_START No previous backup found

2024-04-22 11:18:00.055 INFO BACKUP_INDEXING Indexing E:\

2024-04-22 11:18:00.055 INFO SNAPSHOT_FILTER Parsing filter file \\?\C:\Users\username\.duplicacy-web\repositories\localhost\1\.duplicacy\filters

2024-04-22 11:18:00.055 INFO SNAPSHOT_FILTER Loaded 3 include/exclude pattern(s)

2024-04-22 19:14:18.471 INFO UPLOAD_FILE Uploaded ...

... a whole lot of files uploaded ...

2024-04-22 19:14:19.548 INFO BACKUP_END Backup for E:\ at revision 1 completed

2024-04-22 19:14:19.548 INFO BACKUP_STATS Files: 22405 total, 3550G bytes; 22403 new, 3550G bytes

2024-04-22 19:14:19.548 INFO BACKUP_STATS File chunks: 739924 total, 3550G bytes; 7 new, 58,267K bytes, 49,940K bytes uploaded

2024-04-22 19:14:19.585 INFO BACKUP_STATS Metadata chunks: 42 total, 57,871K bytes; 7 new, 11,321K bytes, 7,845K bytes uploaded

2024-04-22 19:14:19.585 INFO BACKUP_STATS All chunks: 739966 total, 3550G bytes; 14 new, 69,589K bytes, 57,786K bytes uploaded

2024-04-22 19:14:19.585 INFO BACKUP_STATS Total running time: 07:56:19

-

I replaced the backup ID “MALCOLM-Video-01-fix” with the original “MALCOLM-Video-01”.

-

I deleted the “snapshots” folder for “MALCOLM-Video-01-fix”.

-

I again deleted all “cache” directories under \Users\username\.duplicacy-web.

-

I reran the check of the local copy. No more errors.

Running check command from C:\Users\username/.duplicacy-web/repositories/localhost/all

Options: [-log check -storage Local -a -tabular]

2024-04-22 20:29:27.247 INFO STORAGE_SET Storage set to Z:/

2024-04-22 20:29:27.258 INFO SNAPSHOT_CHECK Listing all chunks

2024-04-22 20:29:51.352 INFO SNAPSHOT_CHECK 6 snapshots and 821 revisions

2024-04-22 20:29:51.380 INFO SNAPSHOT_CHECK Total chunk size is 9084G in 1906864 chunks

2024-04-22 20:29:55.313 INFO SNAPSHOT_CHECK All chunks referenced by snapshot MALCOLM-Video-01 at revision 1 exist

2024-04-22 20:29:57.314 INFO SNAPSHOT_CHECK All chunks referenced by snapshot MALCOLM-Video-01 at revision 60 exist

... a lot more existing chunks ...

2024-04-22 21:07:12.770 INFO SNAPSHOT_CHECK

snap | rev | | files | bytes | chunks | bytes | uniq | bytes | new | bytes |

... file details ...

Questions:

- The chunk that was created with the same filename is guaranteed to contain the same data as the missing one, correct? (I’m curious to know how that works.)

- Should the additional chunks that were created be of any concern? (No file changes should have occurred on that drive since the last backup. It’s basically a video archive.)

- What should be my next steps? Have I forgotten anything? (I’ve kept all schedules turned off since the original error.)

Thanks for your help!