It pruned 275 days ago: https://i.imgur.com/lswYu1d.png

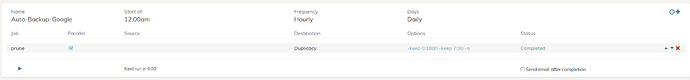

and it says backup completed successfully, but now i checked the logs and it actually does nothing…the backup finishes in 2 seconds.

This is the whole log:

Running backup command from D:/Cache/localhost/0 to back up C:/Backup Symbolic Links

Options: [-log backup -storage Duplicacy -stats]

2020-11-13 17:33:03.293 INFO REPOSITORY_SET Repository set to C:/Backup Symbolic Links

2020-11-13 17:33:03.360 INFO STORAGE_SET Storage set to gcd://Duplicacy Backup

2020-11-13 17:33:07.382 INFO BACKUP_START Last backup at revision 4894 found

2020-11-13 17:33:07.382 INFO BACKUP_INDEXING Indexing C:\Backup Symbolic Links

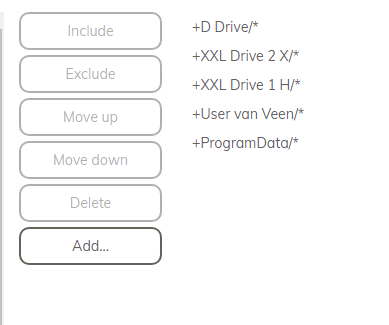

2020-11-13 17:33:07.382 INFO SNAPSHOT_FILTER Parsing filter file \?\D:\Cache\localhost\0.duplicacy\filters

2020-11-13 17:33:07.382 INFO SNAPSHOT_FILTER Loaded 8 include/exclude pattern(s)

2020-11-13 17:33:08.825 INFO BACKUP_END Backup for C:\Backup Symbolic Links at revision 4895 completed

2020-11-13 17:33:08.825 INFO BACKUP_STATS Files: 3 total, 41K bytes; 0 new, 0 bytes

2020-11-13 17:33:08.825 INFO BACKUP_STATS File chunks: 2 total, 3,971K bytes; 0 new, 0 bytes, 0 bytes uploaded

2020-11-13 17:33:08.825 INFO BACKUP_STATS Metadata chunks: 3 total, 787 bytes; 0 new, 0 bytes, 0 bytes uploaded

2020-11-13 17:33:08.825 INFO BACKUP_STATS All chunks: 5 total, 3,971K bytes; 0 new, 0 bytes, 0 bytes uploaded

2020-11-13 17:33:08.825 INFO BACKUP_STATS Total running time: 00:00:02

As you can tell, im using a folder with symbolic links to backup. been doing that for multiple years already, to just find out it stopped working almost a year ago.

Any way i can let it function again with my current google cloud backup structure, as its multiple tb’s?

Thanks!

So you might have to create this somewhere else on your Google Drive, move the chunk(s) inside, and move the folder back into the root of the

So you might have to create this somewhere else on your Google Drive, move the chunk(s) inside, and move the folder back into the root of the