I am trying to find a backup strategy for my NAS where I can protect two systems from each other in case one is attacked or affected by ransomware.

I have an Unraid server and a Windows gaming computer. I want to maintain server-backups on the Windows-computer in case the server loses data.

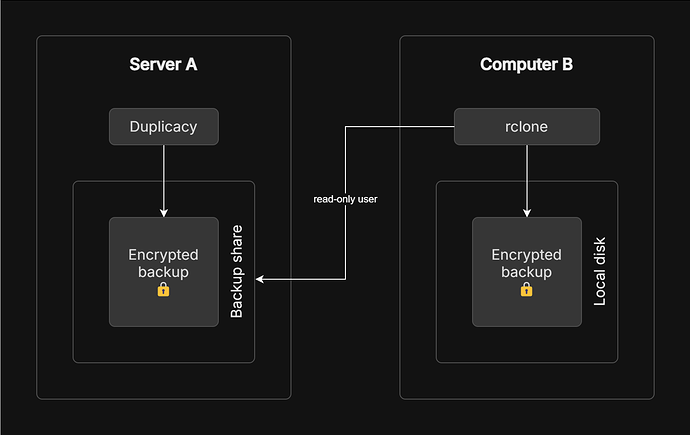

My theory so far is to make Ⓐ (the live Unraid server) do the backups with Duplicacy and let my gaming computer Ⓑ copy the backups from the server.

If Ⓑ is hacked the attacker will not be able to read the data on Ⓐ. The backup is encrypted and the attacker will need the encryption key from Ⓐ to decrypt. The attacker might destroy data on Ⓑ, but the server data is backed up on Ⓐ.

On the other hand, if Ⓐ is hacked the attacker will not be able to read the contents of Ⓑ because the server does not have any access at all. Likewise the attacker might destroy data on Ⓐ, but the data is backed up on Ⓑ.

This feels like a solid plan, but here is the thing I am struggling with…

How should I handle incremental backups? I mean sure, Duplicacy will take care of that on Ⓐ, but I don’t want to transfer the entire backup every night to Ⓑ. It would be better to just transfer the increment. But how do you do that?

Should I use rclone to incrementally sync Duplicacy’s storage folder

and copy each new chunk files in /chunks/ and add a new /snapshots/... file.

or…

should I perhaps run Duplicacy on both computers with the copycommand.