The history command seems to take a veeeeeeeery long time. My repository has on the order of 1M files (almost all files have only one version) and 7TB data.

$ time duplicacy_linux_x64_2.6.1 history path/to/file

Storage set to b2://<redacted>

download URL is: https://f001.backblazeb2.com

170: 24300027 2015-08-02 19:59:46 f38d2dae0ffbec73444acb2749404dd9cbc068c3ea59ca51936d530ac27b34e2 path/to/file

171: 24300027 2015-08-02 19:59:46 f38d2dae0ffbec73444acb2749404dd9cbc068c3ea59ca51936d530ac27b34e2 path/to/file

172: 24300027 2015-08-02 19:59:46 f38d2dae0ffbec73444acb2749404dd9cbc068c3ea59ca51936d530ac27b34e2 path/to/file

(many lines omitted)

206: 24300027 2015-08-02 19:59:46 f38d2dae0ffbec73444acb2749404dd9cbc068c3ea59ca51936d530ac27b34e2 path/to/file

207: 24300027 2015-08-02 19:59:46 f38d2dae0ffbec73444acb2749404dd9cbc068c3ea59ca51936d530ac27b34e2 path/to/file

current: 24300027 2015-08-02 19:59:46 path/to/file

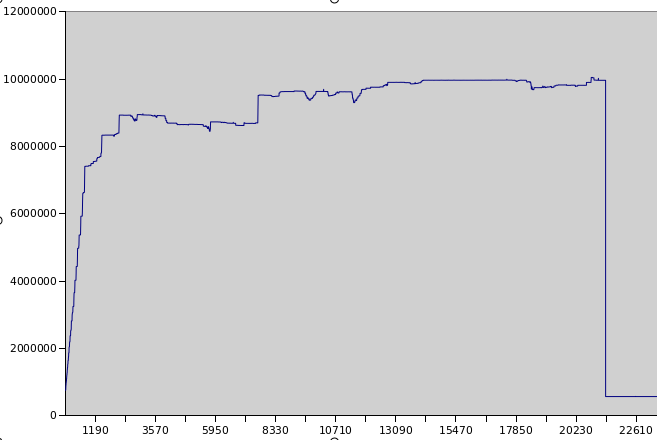

real 412m39.805s

user 325m38.754s

sys 4m38.362s

7 hours to get the history of one file that hasn’t changed seems like a very long time! Is this a quirk of the Backblaze B2 backend or a general problem? Also, how much $$ did I just add to my B2 bill by doing that?

Related: is there a better way to find a file or probe the existence of a file?