I am new to duplicacy and setting up my initial backups to my GSuite storage.

I have configured 6 backup jobs (a total of about 17.5 TB) that I am running individually, that I plan to add to a daily schedule once I get past this initial hurdle.

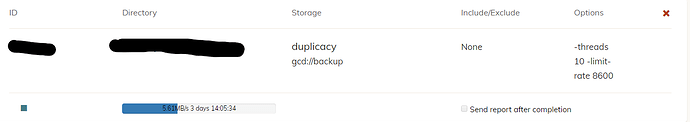

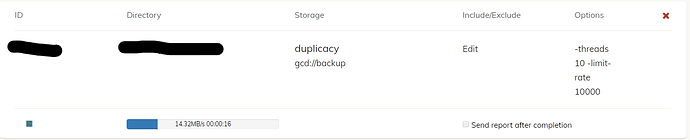

Only 2 of the backups are multi-terabyte. So my inital attempts fails because I was hitting that cap, upload was going about 16-18MB/sec. I did the math and figured I could limit the upload to 8.68 MB/sec I would come in just under the daily limit and get it done as fast as possible.

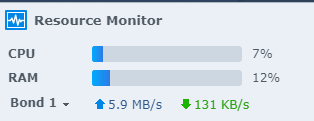

So I used a limit-rate of 8600 on the backup just to be safe. However the backup is running at a very stable 5.58-5.59 MB/sec. Any ideas?