Preface: I’ve read both Cache usage details and Cache folder is is extremely big! 😱

Both seem to imply that cache usage only grows through subsequent runs as the cache is not cleared until a prune is run. This thread is asking how to limit cache usage within a single run.

Running duplicacy 2.5.1 (51CBF7)

I recently ran a duplicacy check -chunks to verify that my backups had made it to the remote storage without corruption.

duplicacy check -chunks -threads 8

Repository set to /foo

Storage set to gcd://backups/duplicacy/foo

Listing all chunks

2 snapshots and 5 revisions

Total chunk size is 5173G in 1100701 chunks

All chunks referenced by snapshot foo at revision 1 exist

All chunks referenced by snapshot bar at revision 1 exist

All chunks referenced by snapshot bar at revision 2 exist

All chunks referenced by snapshot bar at revision 3 exist

All chunks referenced by snapshot bar at revision 4 exist

Verifying 1097648 chunks

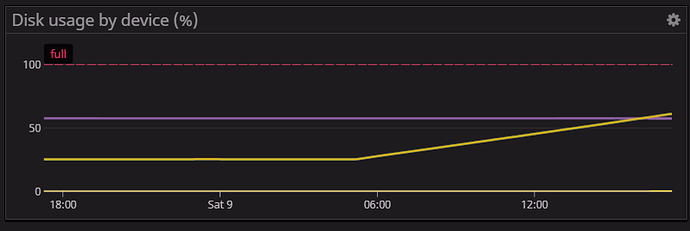

However after a few hours I noticed that disk usage was growing at an alarming rate. Currently sitting around 100GB.

Digging a bit deeper it looks like duplicacy is caching every chunk to disk.

/foo/.duplicacy # du -sh cache/

101.1G cache/

/foo/.duplicacy/cache/default # find chunks -type f | wc -l

20312

At the current growth it looks like running duplicacy check -chunks requires enough disk to store the whole backup (or at least will attempt to cache the whole backup on disk).

My assumption is that chunk verification shouldn’t need to store more than n threads worth of chunks on disk at a time and might not need to store them on disk at all if they’re sufficiently small (default chunk size is <16M which should fit in memory easily).

Is there any way to stop  from caching the chunks (and therefore my whole backup) on disk? Or at least a way to ask it to clean up as soon as it no longer needs the files to minimize the amount of storage needed to run the command?

from caching the chunks (and therefore my whole backup) on disk? Or at least a way to ask it to clean up as soon as it no longer needs the files to minimize the amount of storage needed to run the command?

I think it’s somewhat unreasonable to expect a single system to have enough disk to store the entirety of the backup storage, especially if many systems are backing up to the same location to leverage the deduplication functionality.