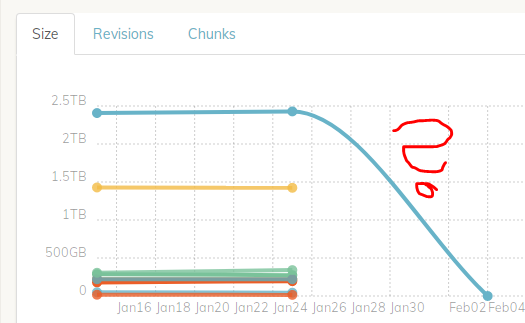

Guys. What does the graph want to tell me? My local backups disappeared? They are still existing.

Ahh. For whatever reason the system stopped updating the graph on 01-24

Have you run a check recently?

Yeah that’s correct, on 01-25

Weird that it produces such a funky graph but try running them again to see if it fixes it. How often do you run the checks?

Can you check the log from the check job on 02-04?

I’ve seen something similar before in my graphs. I haven’t tried diligently to recreate it; but it seems to happen if a check job starts and finishes while a backup is still running – or something related to the timing of the check job and backup job.

Oh sorry, not quickly finding the log. Will be away for two weeks and may have a look at this after that time.

@gchen I got this to happen in the following scenario (probably not a minimal example):

- Backups and check for storage A both run and complete

- Backups for storage B complete

- Check for storage B still in progress

In my current scenario, I don’t think the graphs will be correct for storage B until the check job from #3 completes.

Hello @gchen

I have also begun experiencing this issue after stopping running daily checks.

I’m a new user running Duplicacy in a Docker container on Unraid.

My initial setup was two backups in one scheduled job, then a following check in a later scheduled job.

I realised the lengthy checks were not really needed daily, so stopped these checks running and that’s when I noticed that the storage graph fell to zero.

Running a check resolves this. Running another backup does not.

I did add a prune to the end of the backup job when I stopped the check, which blurs things a little, but I wonder if I can be of any help in testing this/providing logs?

Rich.

For me this happened recently here Missing chunk, can't figure out why seemingly after check failed on the storage.

(It seems as if failed check results in default value to be returned for storage? That would be unlikely since that’s one of the things golang tried to address — to distinguish lack of value from zero value. :))

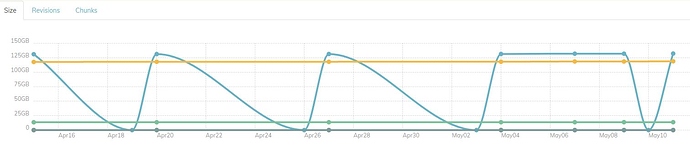

This happens on my systems all the time. But the graphs seem to fix themselves after some time.

Thanks Saspus and Bitrot.

I’ve left things as they are for now (4 days) to see if the graphs fix themselves too. I’ll keep an eye on it and get a before and after set of logs of the graph does come right.