Hi guys,

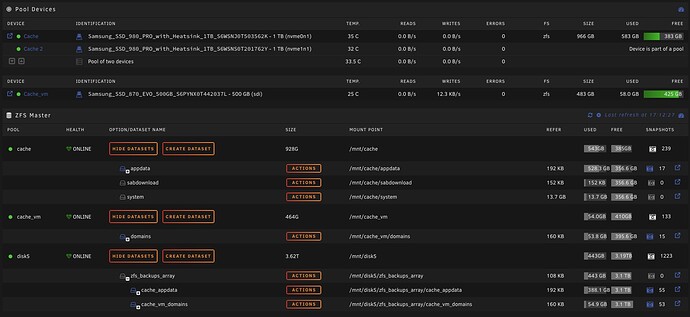

I use UserScripts to prepare/back up appdata (Dockers) and domains (VMs) and then i use Duplicacy to make backups. In Duplicacy i can then restore different versions from a dropdown. This way i can restore specific docker/VM back to Unraid.

I’m an newbie at Duplicacy, Unraid, rsync and tar so maybe this it totally wrong but it works for me.

Please tell me if you have some idea’s on how to improve (or maybe tell me to scrap it all  )

)

DOCKERS

#!/bin/bash

# Get all running containers and store them in $CONTAINERS

mapfile -t CONTAINERS < <( docker inspect --format='{{.Name}}' $(docker ps -q --no-trunc) | cut -c2- )

# show in logrun

echo "Appdata backup started - Starting to shut down dockers"

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Notice [Script] -Appdata backup started" -i "normal" -d "Starting to shut down dockers"

# Stop all running containers

for container in "${CONTAINERS[@]}"; do

echo "Stopping ${container}..."

docker stop ${container} 1> /dev/null

done

# show in logrun

echo "Dockers shutted down. Starting backup"

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Warning [Script] -Appdata backup running" -i "warning" -d "Dockers shutted down. Starting backup"

# Backup appdata

echo "Backing up /mnt/user/appdata/"

rsync -a --delete /mnt/user/appdata/ /mnt/user/backups/appdatabackups/

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Warning [Script] -Appdata backup running" -i "warning" -d "Backup done. Starting dockers"

# Start all containers that were previously running

for container in "${CONTAINERS[@]}"; do

echo "Starting ${container}..."

docker start ${container} 1> /dev/null

done

# show in logrun

echo "Dockers started. Starting to tar files"

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Warning [Script] -Appdata backup running" -i "warning" -d "Dockers started. Starting to tar files"

# Compress appdata and remove original folders

find /mnt/user/backups/appdatabackups/ -type d -maxdepth 1 -mindepth 1 -exec tar zcvf {}.tar.gz {} --remove-files \;

# show in logrun

echo "Appdata backup done - Ready to do Duplicacy backup"

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Notice [Script] - Appdata backup done" -i "normal" -d "Ready to do Duplicacy backup"

VMs

#!/bin/bash

#Empty vm list

echo "" > /tmp/vms-running.txt

# show in logrun

echo "VMs backup started - Starting to shut down VMs"

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Notice [Script] -VMs backup started" -i "normal" -d "Starting to shut down VMs"

#Get all running vms except VM-TO-NOT-SHUTDOWN server

for VM in $(virsh list --state-running --name); do

if [[ ! "$VM" == "VM-TO-NOT-SHUTDOWN" ]] ; then

virsh shutdown "$VM"

#Write running vms to list

echo "$VM" >> /tmp/vms-running.txt

fi

done

# sleep if no vm is started to get next notice

sleep 5

# show in logrun

echo "VMs shutted down. Starting backup"

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Warning [Script] -VMs backup running" -i "warning" -d "VMs shutted down. Starting backup"

# Backup appdata

echo "Backing up /mnt/user/domains/"

rsync -a --delete /mnt/user/domains/ /mnt/user/backups/vmbackups/

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Warning [Script] -VMs backup running" -i "warning" -d "Backup done. Starting VMs"

#Get all running vms except backup server

for VM in $(cat /tmp/vms-running.txt); do

virsh start "$VM"

done

# show in logrun

echo "VMs started. Starting to tar files"

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Warning [Script] -VMs backup running" -i "warning" -d "VMs started. Starting to tar files"

# Compress appdata and remove original folders

find /mnt/user/backups/vmbackups/ -type d -maxdepth 1 -mindepth 1 -exec tar zcvf {}.tar.gz {} --remove-files \;

# show in logrun

echo "VMs backup done - Ready to do Duplicacy backup"

# Show notification in Unraid (alert, warning, normal)

/usr/local/emhttp/webGui/scripts/notify -s "Notice [Script] - VMs backup done" -i "normal" -d "Ready to do Duplicacy backup"