Hello,

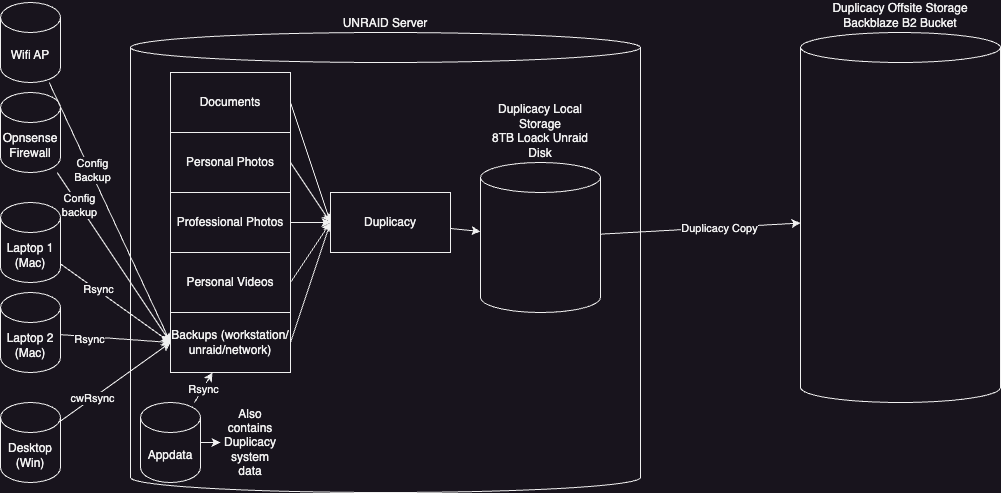

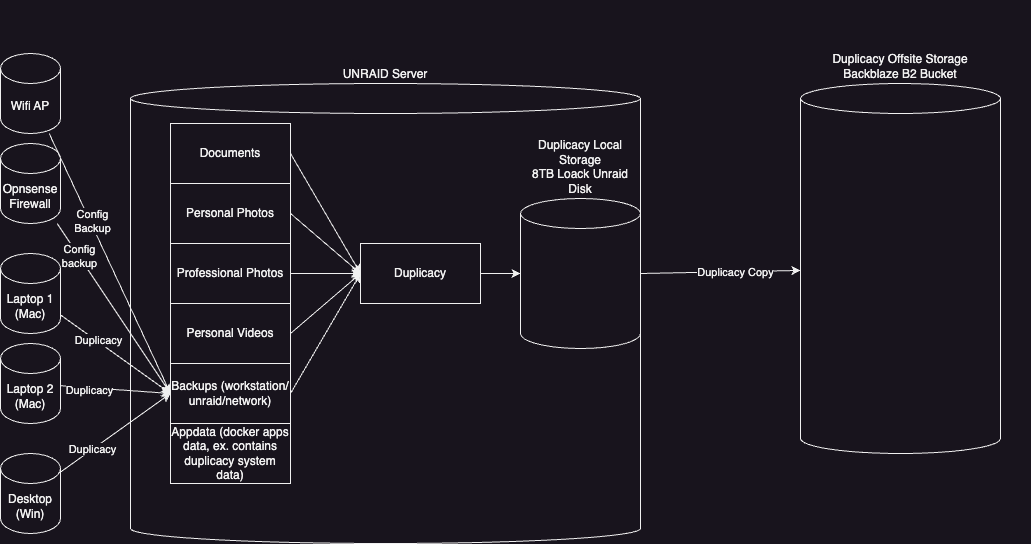

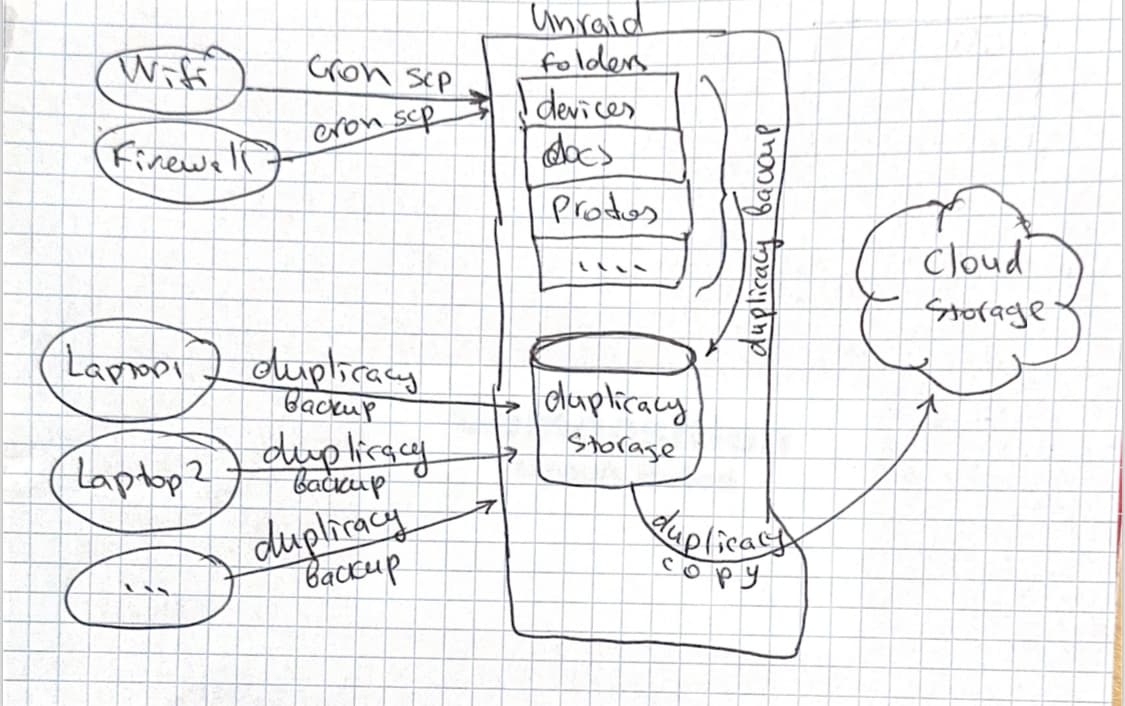

I’m looking for a sanity check on my backup plan. It includes a number of workstations, appliances, Unraid NAS and Backblaze B2. Duplicacy is running via docker on the Unraid. Here is my rough plan at a high level:

- 12am rsync of workstations & appliance configs copied to Backups folder on Unraid Nas

- 12am script runs to shutdown docker containers (including Duplicacy) so we can run rsync on of all docker configs and app data to backups folder. When complete script turns on containers including Duplicacy. So at this point Backups folder has latest data from all workstations, appliances, and docker containers.

- 9am duplicacy runs backups of the following repos which are shares on the Unraid Nas (Documents, Personal photos, professional photos, Personal Videos, and Backups folder). It backups to local storage (a disk that is not part of the Unraid NAS array).

- When backups to the local storage are complete, it then uses the Duplicacy “copy” command to make a copy of the local storage backup to my BackBlaze B2 off-site storage.

Here are my concerns/questions

- I’m thinking rsync from the workstations is the best way to go. If there is a better or recommended route, please let me know.

- I’m looking at about 1tb of data at a minimum right now I need to backup offsite, which will definitely take a few months with my connection. I can’t wrap my head around how steps 1-3 will work while step 4 will be taking forever to run. I know I will have to stop the 2nd step since Duplicacy will need to keep running while it does the backup to B2, but what about the backups to the local storage? Can Duplicacy continue to backup to my local storage WHILE it continues to run that initial copy to the off-site storage? Will this be an issue since the number of snapshots might be out of sync? I’m new to this so I just would like expert feedback before I dive in. Thanks your your eyes and input

Best Regards,

Richard