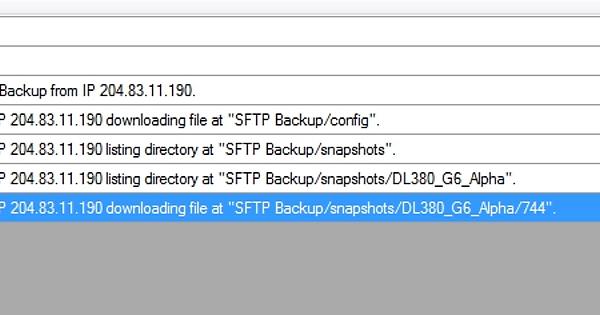

So I’m running into an issue like I did with ARQ backup with duplicacy, my remote storage completely filled up, and I’m unable to prune my backups to free up space.

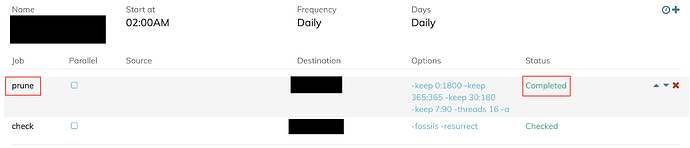

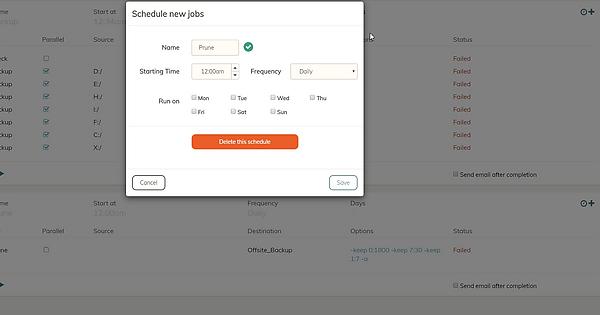

Unfortunately despite my weekly prune my storage got packed completely full and now in the web ui I can’t prune.

With command prompt set to duplicacy’s install folder I tried “duplicacy_web_win_x64_1.0.0.exe -d -log prune -exclusive -exhaustive” but that did nothing.

How can I force a prune operation to free up space? It’s pretty annoying that there’s no obvious way to use command line options when you have the web edition.