So this seems like a slightly unique issue, or at least one I can’t find the right phrasing for to pull up searching.

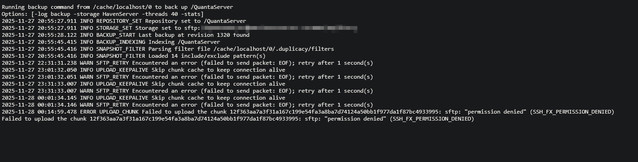

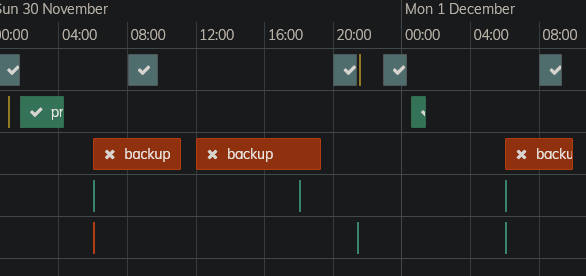

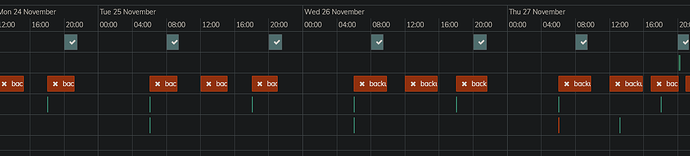

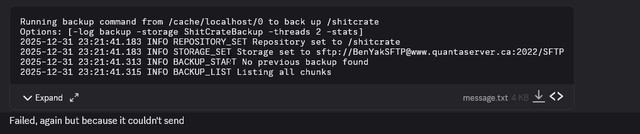

I’m having a permissions error with my backup suddenly that’s persisted about a week before I noticed, possibly more. I’m running duplicacy in docker on unraid so don’t have good cli access.

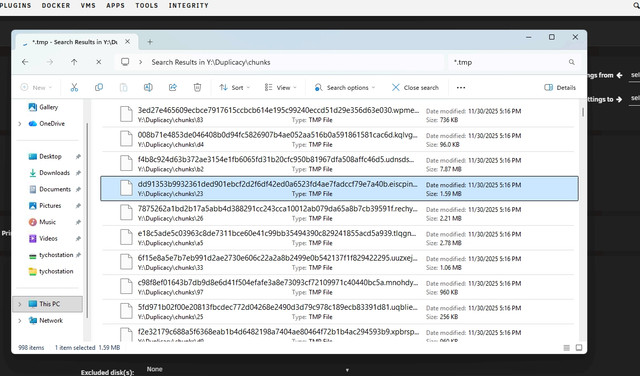

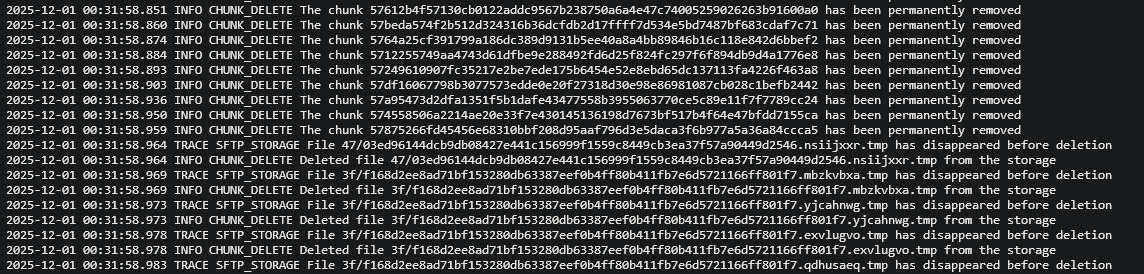

It seems like this one chunk keeps giving me permissions errors right at the end of my backup but the rest goes through. Wondering what arguments might fix it, or if I should try to find the offending chunk on my backup server and delete it?

There shouldn’t be anything that changed with any permissions so it seems odd, especially since it’s at the very end of the backup, literally the last thing it tries in the final few seconds.

edit: Actually it seems like just from today there are two or three different ones it happens with from testing, not the same one every time, but the same couple pop up a few times. Going to try with -d