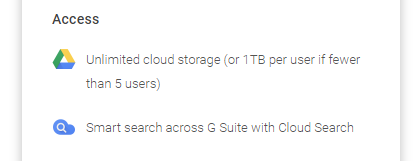

Yes, so to put the full figure on the table, we are talking about roughly 600 EUR per year or 50 EUR per month. I don’t really want to pay more than 10 EUR per month for my backups, hence: five four buddies and myself. (Of course, the G Suite gives you more than just storage, but I’m not sure I’m interested in anything else).

Nice. I wasn’t aware of that option.

But given that all other unlimited storage plans (ondrive, amazon, …) did not survive long (or are very slow, like jottacloud), I wonder how long this one is going to last… Maybe their advantage is that it’s not attractive for individual consumers.

Also nice: you can even choose where your data is stored: G Suite Updates Blog: Choose the regions where your data is stored

we all got unlimited storage?

we all got unlimited storage?