Not entirely certain what effect that might have on a Linux system but even if it ‘touched’ all the files, I’m fairly certain Duplicacy would only need to rehash the source data and determine that it would only need to upload the meta-data chunks, not the file content chunks. Since chunking is deterministic.

In theory, all the revision files for a repository contain enough information to know what chunks should exist in storage. But that excludes chunks for other repositories and backup clients, and it might be difficult to scale if it has to read every revision.

So perhaps it reads only the last revision, which contains the chunklist. And for everything else, before upload it checks to see if it exists? Not certain of that either, would need to check the source code.

Haha, no problem at all.

Good idea, and don’t forget you can always -dry-run to make sure it’s not overly zealous with the downloads. (Actually! It just crossed my mind that it might still download content chunks?? I definately need to check the source…).

Edit: Well OK, seems the copy command doesn’t have a -dry-run option!

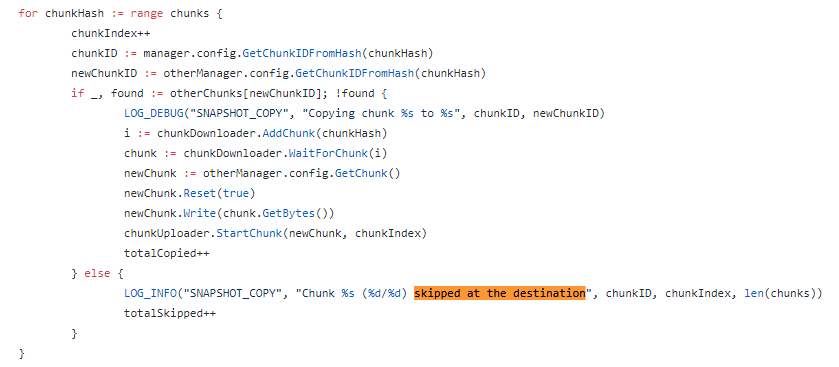

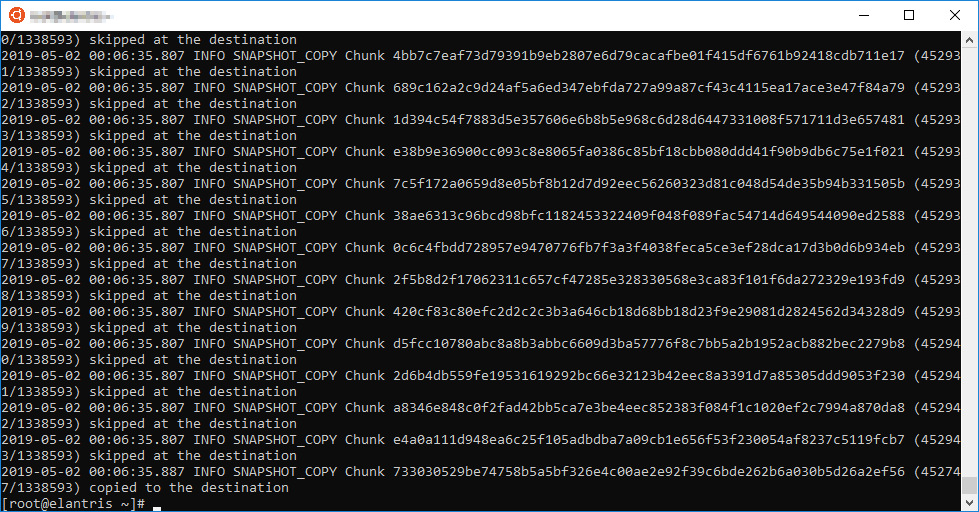

It would be really expensive if it downloads the content chunks, then just skips them if they already exist. I think it would really depend on how it determine what needs to be copied.

It would be really expensive if it downloads the content chunks, then just skips them if they already exist. I think it would really depend on how it determine what needs to be copied.

I’m not sure what to do

I’m not sure what to do

is all about deduplication ,all should be ok with how much new data is uploaded, right?

is all about deduplication ,all should be ok with how much new data is uploaded, right?