Tldr, my main goal is to create a new local backup and start copying it to my remote. The remote is on revision 566 now and would have conflicting revisions if I tried that. I want my local copy to basically think it’s on 577 instead of 1 so I can copy up from now on… Or any other work around but others haven’t worked so far.

That shouldn’t be happening. Not if the same repository, with the exact same content, was backed up to a local storage that was created with add -copy. Most of your chunks are already present.

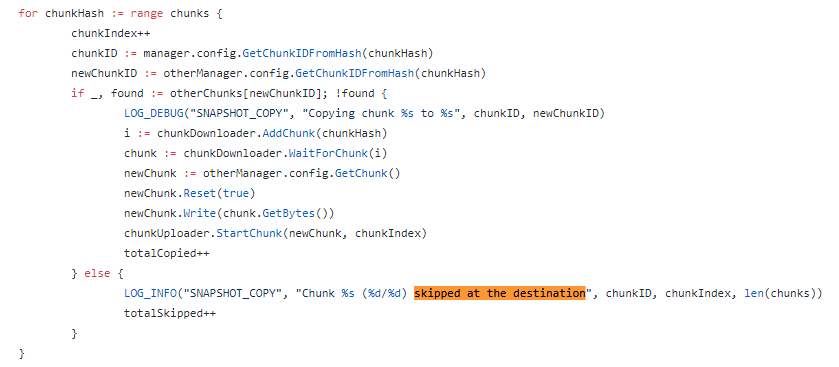

From your screenshot above, it’s saying that most chunks were “skipped at the destination”. That means it has no reason to download that chunk from remote - it merely knows of its existence from the revision you’re copying.

(But make sure you’re only copying the last revision of that specific repository with id <snapshot id> -r 566, otherwise it’ll grab all the revisions from all of the repositories.  )

)

Yes, they are skipped, but network traffic is very high. Are you sure it’s not skipped after trying to copy and then realizing it exists and choosing not to overwrite? Even if it doesn’t download completely before deciding it already exists, how would a get API call be calculated? The copy log shows x/total. That total matches the total number of chunks for my entire repository. I don’t know why there would be so much network traffic if it’s skipping all these files by looking at the latest snapshot from both locations in memory or even just the latest remote snapshot against the files on disk. I might just have to crawl the code and tweak it to my needs if it’s simple enough.

The total is normal, it’s just showing the total number of chunks used for that revision. Skipped chunks should not use bandwidth or any API calls. I can only imagine the network usage is where it’s actually copying needed chunks, like metadata, in separate threads.

You’ll notice, from your screenshot, that the one at the bottom was “copied to the destination”, but that its chunk number (452274) is far earlier than all the others (i.e. 452943). That’s because a thread finished copying that chunk after iterating through. It also suggests to me that the vast majority of chunks are being skipped, and Duplicacy will sail through those 1.3m chunks pretty sharpish.

Hmm… IF you ran a recent backup to both the local and remote storages from the same repository, those chunks - including the metadata chunks - should be mostly the same.

HOWEVER, I’ve just now realised it’s possible that when your local and remote repositories were hashed, the way your data was chunked may explain the discrepancy. So, try run a fresh backup to both local and remote with the -hash key option. The backup will take longer as it has to hash everything, and you may end up uploading a bit more to B2, but when it comes to copying back revision 567 (as it will be then), it should have less to download and you’ll have less (or hopefully no) download costs to incur.

You can rename a snapshot file if the storage is not encrypted. So if an unencrypted local storage is acceptable to you, you can simply run a local backup at revision 1, and then rename it to whatever revision you want.

Otherwise, another option is to use a new repository id for the local backup, so when you copy from local to B2 there won’t be a revision number conflict.

My sync script runs a backup locally, then one remotely (to make sure the latest revision copied down has the latest data, but should mostly be the same). It then runs a copy down. While it seemed unlikely that an API call would be made to copy down skipped chunks, I wanted to be sure. I suppose it is like you said @Droolio, there are enough threads running at once that the chunks that do need to be copied are what is spiking the network. For reassurance, I found the code where this is done and there is in fact NOT an api call if the chunk exists at the destination according to the latest snapshot and the log message with the snippet skipped at the destination implies that no api call was made based on the following function.

Sorry for being so paranoid and thank you for your reassurances and suggestions.