With storj you have two options: use native integration, or use their (or your own) S3 gateway.

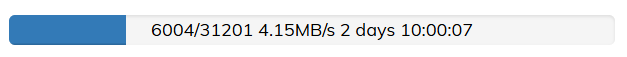

The former option can achieve absolutely ridiculous speeds, however

- network must be rock solid

- most consumer routers won’t do

- upstream channel needs to be beefy enough to support upload amplification

- upstream latency shall be low and/or segment sizes shall be as close to 64M as possible.

The latter option will work better on most residential connections: upstream bandwidth will match exactly what’s being uploaded, and all erasure endcoding and distribution to storage nodes will be happening on the S3 gateway and not your machine. It is still worth it to try to make segment size as close to 64MB as possible, both from performance and cost perspectives.

I suggest initialize a test repository on your machine in an empty folder pointing to storj in a both configurations (native and s3) and run duplciacy benchmark with different chunk size parameters.

This shall help pick the best configuration for your circumstances (machine speed, network stability, etc).

On the average chunk size: duplicacy by default uses 4MB average chunk size. It’s arguably way too small, even for other storages that don’t incentivize large chunk sizes. Picking 32 could yield better results. It can be set at the storage initialization stage.

A (very) long thread on the same topic: Completely new: where to start - #27 by saspus