Hey all - looking forward to seeing if Duplicacy is the right fit for me. I’ve been using restic, but debating if Duplicacy may be superior for my use case. To start with, I’ve been testing it out on my NAS backup, but because of a slow home connection, I spun up a VPS, restored the TB’s of data to that, then used duplicacy to backup from the VPS -> B2, with the plan that due to deduplication, I’d be able to back up from home afterward without uploading many/any blocks.

Once that completed, I started backing up from my home NAS, and noticed that it was uploading almost all blocks/chunks brand new - unfortunate, but based on my reading, if one file is slightly moved, it can make all chunks reupload (I’m guessing because I backed up from /mnt/restore/ instead of just /). Because of this, I tried pruning out the old backups - I figured that just setting it to keep “0 backups when older than 1 day” would be sufficient, however, when I go to do fossil collection, I’m told “Fossils from collection 1 can’t be deleted because deletion criteria aren’t met”.

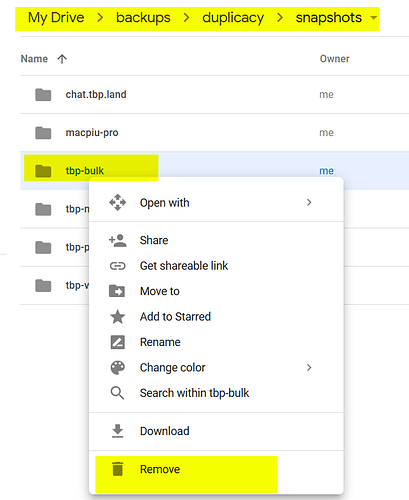

Further reading suggested I needed to do another backup from the original machine before I could prune this out - so given I’d deleted the VPS, I backup up a blank folder with a config file identifying it by the same name (i.e. duplicacy-vps). Then I was able to run the prune command, but not many chunks were remove. I looked a bit more into it, and found out your can remove snapshots/revisions by ID, so I told duplicacy to manually remove these - but again, I cannot remove the fossils. How am I to deal with the circumstances where a machine is no longer available, but I want to remove it entirely from the backup? Surely I am missing something

Edit: Actually, can’t even do a single host prune properly it seems:

[root@mail ~]# ./duplicacy_linux_x64_2.2.1 prune -keep 0:1

Repository set to /

Storage set to b2://mail/

Keep no snapshots older than 1 days

Fossil collection 1 found

Fossils from collection 1 can't be deleted because deletion criteria aren't met

Fossil collection 2 found

Fossils from collection 2 can't be deleted because deletion criteria aren't met

Fossil collection 3 found

Fossils from collection 3 can't be deleted because deletion criteria aren't met

Fossil collection 4 found

Fossils from collection 4 can't be deleted because deletion criteria aren't met

Deleting snapshot mail at revision 1

Deleting snapshot mail at revision 6

Fossil collection 5 saved

The snapshot mail at revision 1 has been removed

The snapshot mail at revision 6 has been removed

[root@mail ~]# ./duplicacy_linux_x64_2.2.1 backup

Repository set to /

Storage set to b2://mail/

Last backup at revision 7 found

Indexing /

Parsing filter file /root/.duplicacy/filters

Loaded 6 include/exclude pattern(s)

Backup for / at revision 8 completed

[root@mail ~]# ./duplicacy_linux_x64_2.2.1 prune -delete-only

Repository set to /

Storage set to b2://mail/

Fossil collection 1 found

Fossils from collection 1 can't be deleted because deletion criteria aren't met

Fossil collection 2 found

Fossils from collection 2 can't be deleted because deletion criteria aren't met

Fossil collection 3 found

Fossils from collection 3 can't be deleted because deletion criteria aren't met

Fossil collection 4 found

Fossils from collection 4 can't be deleted because deletion criteria aren't met

Fossil collection 5 found

Fossils from collection 5 can't be deleted because deletion criteria aren't met

)

)