Due to file permission limitations of how Synology works combined with some Docker containers constantly chowning their files in bound volumes and resetting whatever permissions I set on them, the duplicacy user that is created with installation of the package is unable to read many files for backup. Is there any way to make the backups run as root using the package or do I need to roll my own solution? I’m not very interested in running the web UI as root, just the backup engine. I understand the implications of running a process as root but I think this is the only feasible way to get backups to work properly on a multi-user system where the constant chowning by Docker containers occurs (it appears HyperBackup runs as root on Synology as well to backup everything). I’m running the latest 1.8.3 Web Edition.

- Get rid of docker and abhorrent Synology packaging system Duplicacy Web on Synology Diskstation without Docker | Trinkets, Odds, and Ends and run web ui as some other user (let’s call it duplicacy).

- Replace the duplicacy CLI with a shim script that would run original Duplicacy CLI under sudo

- In sudoers file let Duplicacy user run Duplicacy CLI

- Turn off env_reset (web ui communicates with CLI via env variables)

- In the end set permissions on newly created stat files for web ui to display

But is much easier to run web ui as root.

Do you have any example for the sudoers file entries and a shim script?

Something like

username ALL=(ALL:ALL) NOPASSWD: /path/to/duplicacy_cli

Thanks for the info! If possible, can you elaborate a little more on step 4 and 5 please?

- Turn off env_reset (web ui communicates with CLI via env variables)

Would we want to disable env_reset for the duplicacy user, or only for the duplicacy_cli command?

- In the end set permissions on newly created stat files for web ui to display

Not entirely sure what this means, mind giving an example (even if it’s contrived)?

Thanks!

I set it globally in the sudoers file

Defaults !env_reset

This is needed so that environment is passed to the process launched with sudo. Web UI passes credentials to CLI via environment.

I think you can also narrow it down to a specific process and user, so for duplicacy

Defaults:username /usr/local/bin/duplicacy !env_reset

I thought the stat files were created by duplicity CLI, so for webUI to be able to read them, the user or permissions would need to be adjusted now that they are run under different user accounts.

However thinking now more about it, I belive those files are created by duplicacy_web, so you can completely disregard item 5.

I just tried it and it worked perfectly!

I have the shim script as such:

#!/bin/bash

# Get the directory of the current script

bin_dir="$(dirname "$(readlink -f "$0")")"

args=()

# Skip the first argument (the script name)

shift

# Add all remaining arguments to the args array

while [[ $# -gt 0 ]]; do

args+=("$1")

shift

done

# Pass the collected arguments to duplicacy

sudo "$bin_dir/duplicacy" "${args[@]}"

❯ ls -al

drwxrwxrwx duplicacy users 1.6 KB Fri Mar 21 17:42:22 2025 .

drwxrwxrwx duplicacy users 2.0 KB Fri Mar 21 17:46:56 2025 ..

.rwxr-xr-x duplicacy users 35 MB Tue Nov 5 09:26:53 2024 duplicacy

.rwxr-xr-- duplicacy users 347 B Fri Mar 21 17:42:21 2025 duplicacy_linux_x64_3.2.4

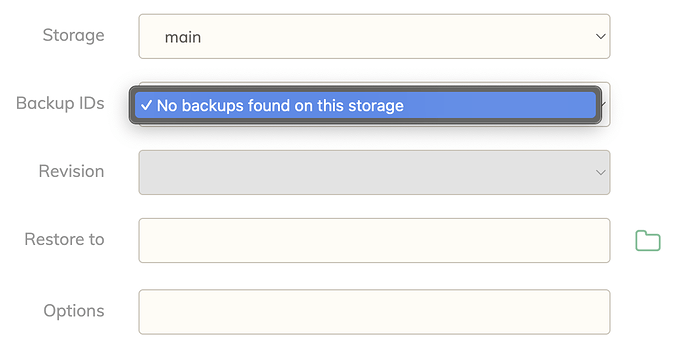

Not sure if it’s related, but after this change, the restore from Duplicacy Web UI no longer able list the backup IDs.

However, the odd thing is I am able to see the backups (snapshot IDs) being listed in the logs and everything! All my backup and check jobs were also able to execute successfully.

~duplicacy/.duplicacy-web/logs/duplicacy_web.log

2025/03/21 19:42:31 192.168.x.x:16315 POST /list_repositories

2025/03/21 19:42:31 Running /var/services/homes/duplicacy/.duplicacy-web/bin/duplicacy_linux_x64_3.2.4 [-log -d info -repository /var/services/homes/duplicacy/.duplicacy-web/repositories/localhost/all /volume1/xxxx/yyyyy]

2025/03/21 19:42:31 Set current working directory to /var/services/homes/duplicacy/.duplicacy-web/repositories/localhost/all

2025/03/21 19:42:31 CLI: The storage is encrypted with a password

2025/03/21 19:42:32 CLI: steam_1

2025/03/21 19:42:32 CLI: steam_2

2025/03/21 19:42:32 CLI: steam_3

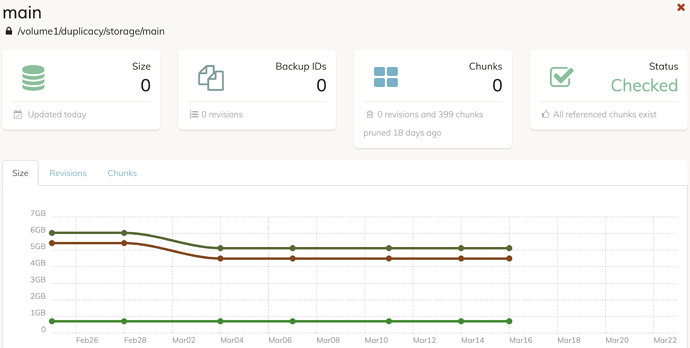

It also no longer correctly reports the storage details in the Storage tab even when the Check job completed successfully. Everything reports as 0.

I’m wondering if maybe sudo breaks communication by reopening the descriptors. Perhaps some scheninigans with manually connecting inputs and output could be needed (e.g. with mkfifo).

Is the issue with using sudo per se, or due to simply calling duplicacy from within the bash script? I.e. is there any difference with:

sudo duplicacy …

Vs

duplicacy …

Vs

exec duplicacy …

Vs

exec sudo duplicacy …

Another nuclear approach would be to chmod +s duplicacy so it always runs as root, remove sudo (to avoid extra process and associated potential piping issues) and run duplicacy with exec in bash script to reuse the same process.

If nothing works — we may ask @gchen for advice — in case there is something peculiar in how he handles communication with CLI within web UI’s restore workflow — because if logs make it back — why would list of files from restore be any different. Or use another instance of duplicacy for restored — which in ideal scenario you shall never need to do

Thanks for the ideas.

Is the issue with using sudo per se, or due to simply calling duplicacy from within the bash script?

I believe it’s the later. There is no difference with any of the these:

sudo duplicacy ...duplicacy …exec duplicacy …exec sudo duplicacy …-

chmod +s duplicacy+execwithin bash script also doesn’t work

The only thing that works is to remove the shim script and change it back to the real executable.

Any ideas @gchen? This has been bothering me for a while, and I feel like we are getting close to a solution.

Previously, when I ever had to do a restore, I had to change my systemd service’s user (which is a bit of a hassle).

2025/03/21 19:42:31 CLI: The storage is encrypted with a password

2025/03/21 19:42:32 CLI: steam_1

2025/03/21 19:42:32 CLI: steam_2

2025/03/21 19:42:32 CLI: steam_3

Where does this ‘CLI:’ prefix come from? I think because of this the web GUI can’t parse the output of the CLI.

Hmm, I never noticed that CLI: prefix until you mentioned it!

I followed @saspus’s suggestion on replacing the original duplicacy_linux_x64_3.2.4 binary into a shim script, which internally invoke the renamed binary (now just named duplicacy) using sudo.

Details in this thread: Synology Web Edition package - run backups as root? - #7 by taichi

I see that normally the CLI: prefix is displayed as STORAGE_SNAPSHOT instead. Not really sure where that prefix comes from!

025/03/24 11:19:39 Set current working directory to /var/services/homes/duplicacy/.duplicacy-web/repositories/localhost/all

2025/03/24 11:19:39 INFO STORAGE_ENCRYPTED The storage is encrypted with a password

2025/03/24 11:19:40 INFO STORAGE_SNAPSHOT steam_1

2025/03/24 11:19:40 INFO STORAGE_SNAPSHOT steam_2

2025/03/24 11:19:40 INFO STORAGE_SNAPSHOT steam_3

Investigation continues…

Hi @gchen! Would you be able to take a look at the source code of the web gui please? Specifically:

- Can you identify where the CLI: output is coming from?

- Is there a way to modify it to work with @saspus’s suggestion (parsing the output of CLI)?

Thank you!

Sorry I didn’t look carefully and thought “CLI:” was printed by your bash script. It is actually the web gui that does this, when a match by regular expression fails to find any meaningful log lines. It looks like the -log option didn’t get passed to the CLI executable so the log id wasn’t printed out?

OMG, you are right @gchen!

There is a bug in the shim script that had an un-necessary shift in the beginning that inadvertently removed the -log.

It all seems to work now (check, backup, restore…), thank you @saspus and @gchen!

Ngl, the web-ui becomes so much more useful with this

@saspus Now that the CLI operations run as root, the Web UI no longer has enough permission to abort any operations that were started.

It is something I am willing to live with since it’s not very often that I need to cancel, and I figure I could always do a kill -SIGTERM on the sudo’ed process. Does that sound like the right way to abort?

Lol – this is pretty cool confirmation that all works correctly  Also, perhaps it’s a good thing – nobody can accidentally abort a backup

Also, perhaps it’s a good thing – nobody can accidentally abort a backup

Yes; I don’t not usually bother with pleasantries and just do -KILL: backup tools above all else are expected to handle sudden interruptions. -TERM would indeed be more polite