I’ve installed Duplicacy on Unraid using the community docker install.

Everything is setup as follows.

Unraid server has an NFS share to a remote server via Tailscale for remote backups.

I can write to the share and use it fine from Unraid.

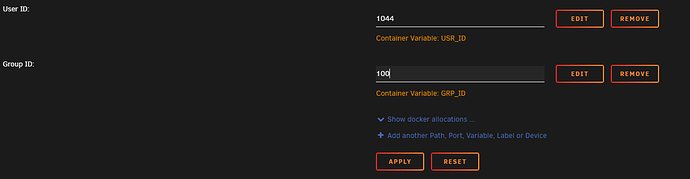

I’ve configured in the docker variables:

User Data: /mnt/remotes/ip_backups/

Container Path: /backuproot

When I go into Duplicacy and to setup storage, I select /backuproot and get the following:

“Failed to check the storage at /backuproot: stat /backuproot/config: permission denied”

If I docker exec into the duplicacy docker I can read/write to backuproot seeing the files on the shared drive and writing to it.

What gives?