Hey folks,

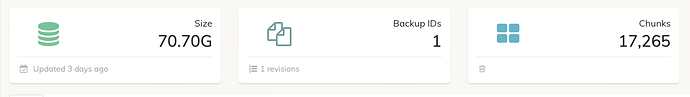

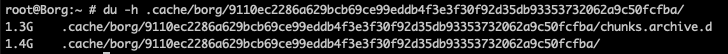

I’m just wondering about the size of the cache vs. backup. I have a device which is backed up, and then if I add the storage to another device + restore 1 file of about 150MB, the cache ends up as 1.4GB for a 70GB repository with only 1 revision:

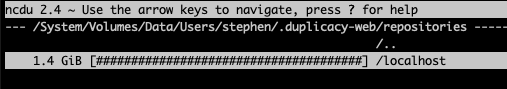

I have another backup which is ~2TB, and with 47 revisions, and the cache on that is only just hitting 1.4GB:

Any reason why the Duplicacy backup might be so much larger, proportionally? I would like to switch to using Duplicacy for everything, but if it grows at this same rate, the cache size will be too large for me to use unfortunately