Ah, that’s what I was missing. Then yes, it’s a correct behaviour, nothing to fix.

Still, none of the duplicacy flags result in a list of files processed being logged, not even -d. That’s a separate issue then.

demo

% touch meow{20..30}{10..50}.txt

% duplicacy -d backup -stats

Storage set to /tmp/2

Chunk read levels: [1], write level: 1

Compression level: 100

Average chunk size: 4194304

Maximum chunk size: 16777216

Minimum chunk size: 1048576

Chunk seed: 6475706c6963616379

Hash key: 6475706c6963616379

ID key: 6475706c6963616379

top: /tmp/1, quick: true, tag:

Downloading latest revision for snapshot ddd

Listing revisions for snapshot ddd

Downloaded file snapshots/ddd/6

Last backup at revision 6 found

Chunk ba1e216d4b7fd6d034019fd6ac7cea35c63227b10ce71a516e1359d0576386e2 has been loaded from the snapshot cache

Chunk 3bd1332085ddf729fee230ee55b3772a034aceae063b274c97b0f32d5f35178d has been loaded from the snapshot cache

Chunk ba1e216d4b7fd6d034019fd6ac7cea35c63227b10ce71a516e1359d0576386e2 has been loaded from the snapshot cache

Indexing /tmp/1

Parsing filter file /tmp/1/.duplicacy/filters

There are 0 compiled regular expressions stored

Loaded 0 include/exclude pattern(s)

Listing

Chunk 15f383e593b8bf660e7844fd94d3a9b184099cb3ffc46b36690454a0dc826bf1 has been downloaded

Skipped metadata chunk ba1e216d4b7fd6d034019fd6ac7cea35c63227b10ce71a516e1359d0576386e2 in cache

Skipped metadata chunk 3bd1332085ddf729fee230ee55b3772a034aceae063b274c97b0f32d5f35178d in cache

Chunk 0aae60bebc8f838b9b509e08bf750902e2f5b8fb9340d74a6e2d897ef9a4dc00 has been uploaded

Uploaded file snapshots/ddd/7

Chunk ba1e216d4b7fd6d034019fd6ac7cea35c63227b10ce71a516e1359d0576386e2 has been loaded from the snapshot cache

Listing snapshots/

Listing snapshots/ddd/

Delete cached snapshot file ddd/6 not found in the storage

Listing chunks/

Listing chunks/ba/

Listing chunks/8d/

Listing chunks/7e/

Listing chunks/78/

Listing chunks/45/

Listing chunks/3b/

Listing chunks/15/

Delete chunk 15f383e593b8bf660e7844fd94d3a9b184099cb3ffc46b36690454a0dc826bf1 from the snapshot cache

Backup for /tmp/1 at revision 7 completed

Files: 1414 total, 0 bytes; 0 new, 0 bytes

File chunks: 0 total, 0 bytes; 0 new, 0 bytes, 0 bytes uploaded

Metadata chunks: 3 total, 38K bytes; 1 new, 38K bytes, 7K bytes uploaded

All chunks: 3 total, 38K bytes; 1 new, 38K bytes, 7K bytes uploaded

Total running time: 00:00:01

How do I see files that should have been picked up?

Good point. Agreed. But it can be mitigated by correct wording. “Processed” as opposed to “Uploaded”

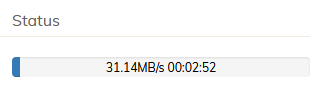

Well, I kill it if I notice it, (and duplicacy can’t throttle, so it always goes full speed, and is therefore very noticeable) and can’t easily see that it isn’t stuck.

Agree in general, but this is different circumstances. WebUI provides ui to show logs from duplicacy CLI. And therefore the expectation is that it shall contain current status. And if logs are empty for three hours – app is stuck. Or has a logging bug.

I don’t share the faith in infallibility of duplicacy. If it sits there chewing through CPU with no indication of progress (well, the progress bar you posted is indeed helpful) it gets murdered.

To go deeper into the woods – I would send a SIGINFO to the process (Control+T on FreeBSD and macOS) and expect current status. Duplicacy ignores SIGINFO. So how am I supposed to know it’s not stuck?

If I’m very persistent I would run spindump (or whatever analogue is on linux) on them to see what’s going on out of the curiosity but I would not expect most users to bother.

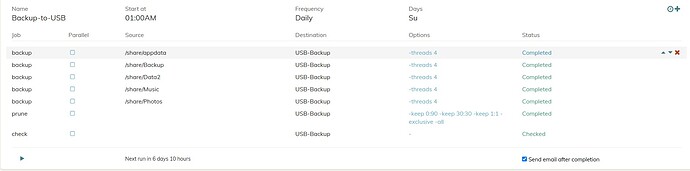

Either way, the progress bit of it seems to be addressed in web UI via a progress bar. I don’t know how well does it track actually progress, but it’s there.