Yes, I was running that but that’s because I was told earlier in the thread that it might fix the problem. When I first created this thread, I did not have any options in my backups - they were all default. I can run it again without the -threads 8 option and run the -d option so you can see the full log without it.

I think that recommendation was based on a ridiculous long it took to list revisions the first time. The second time (in the full log posted) most of it was cached and it took just a minute or two so this rules out suspected issue of, say, token expiring during transfer.

How do you have things setup? One storage and multiple repositories targeting that storage? Or multiple storages? Are they all setup with the same token file?

Can you list contents of that repository using different tools — like rclone?

I have eight external hard drives (in two four-slot enclosures) set to backup to one folder on my Google Drive. Five of the drives work no problem. Three of them all have the same issue where it fails in the exact same way. I have no idea what is different about those three drives to make them fail while the other five work with no problem. I am able to access those three drives through other means so they don’t seem to be corrupt or broken.

I am on a Mac and I don’t really know much about this stuff at all. I found Duplicacy after having trouble with Arq Backup and set it up with basic default options. I just connected my Google Drive and added my hard drives. I don’t have any options set up and I haven’t tried to use anything else.

The question to me seems to be why does Google connect with those five drives, but refuses to connect with the other three? What is going on with those three drives to make Google fail on them? (I am currently backing up one of the five drives that works and I’m getting no error from Google)

If you add -d as a global option to enable debug level logging and post the log here we might be able to figure out what went wrong.

There is one up above but it was created with the -threads 8 option added. Here is a new one without any options added: http://nathansmart.com/show_log2.txt

It looks like after 30 seconds of listing files google drops connection

2021-04-17 06:41:26.330 INFO BACKUP_LIST Listing all chunks

2021-04-17 06:41:26.331 TRACE LIST_FILES Listing chunks/

2021-04-17 06:41:56.906 DEBUG GCD_RETRY [0] Get....

Based on the size of the repo there would be massive number of chunks. Perhaps there should be (or maybe already is) a way to retrieve list in pieces?

Does the amount of chunks correspond with the number of files on a hard drive? or the total size of the hard drive? How does Duplicacy decide how many chunks are in a repo?

EDIT: I read the article above and I see there is an algorithm but it still doesn’t make sense to me why bigger drives with more files are being allowed by Google, but not these drives.

Your screenshot showed one backup failed while the other one was running. Is the other one still running? If so then it could be that Google is rate limiting your API calls when there is one running backup. I would wait until that backup finishes before starting a new one.

Like I said in that same reply, no. I am only running one at a time. In the past, I have run more than one at a time and Google connects just fine - but only on the drives that work. I have three specific drives that fail no matter how I run them. I’m just trying to figure out why those three drives fail every time and the other five that I have never fail.

The logs that I have uploaded here are all run alone by themselves with nothing else running.

The error occurs during the chunk listing phase. Only an initial backup needs to list existing chunks on the storage. There could be two reasons why other backups are working: either they don’t have as many chunks in the storage, or they are not initial backups (that is, backups with a revision number greater than 1).

I don’t know why Google servers always disconnected the connections if you’re not running other backups at the same time. Can you check the memory usage? High memory usage could significantly slow down the api calls which may cause Google to give up too quickly.

-

Does that mean that there’s nothing I can do since I can’t change the number of chunks it’s trying to list? (the other drives that work are much bigger - does that make a difference in the number of chunks it tries to list? can I move stuff around to different drives to fix that?)

-

How do I check memory usage?

Do I understand correctly that any initial backup of a new repository into existing storage will need to fetch list of all chunks? This can be ridiculous number of files and unless listing can be done in batches (can it?) it would be prone to failure, effectively meaning you cannot add another repo to a huge datastore.

Why is listing chunks needed in the first place? Why initial backup is treated any differenly than subsequent ones? It seems if it proceeded just like a new backup into an empty datastore it would just work — duplicate chunks won’t get reuploaded.

Am I missing something?

On macOS you can run top in Terminal to find out the memory usage. The thing to look for is that the amount of memory used by the Duplicacy CLI executable should be less than the amount of physical memory.

Listing chunks is needed so an initial backup doesn’t need to check the existence of a chunk before uploading it. A subsequent one assumes that all chunks referenced by the previous backup always exist.

We could have made Duplicacy continue to backup even after a listing error but in this case it won’t help much – it took more than 8 hours to list the chunks directory and fail which means there must be something wrong.

Would not it be better to check each chunk for existence before uploading one? This will spread out the workload and hide this initial latency entirely.

In the second log failure happened after 30 seconds, not 8 hours.

So, I guess I just can’t backup these specific drives?

Did you figure out what the memory usage was during the chunk listing phase?

How do I do that? Can you give me a little tutorial about it? I see that I can run “top” in Terminal but I don’t know how to do that. I know how to open Terminal and run commands that I copy and paste from online but what do I type to find out memory usage for Duplicacy? Do I run a backup for that drive and then open Terminal and type “top”? and if it’s happening in 8 hours (or in 30 seconds as saspus points out) when would I run it and find the exact moment that memory usage is too much? Or does it just list out all of the memory usage while it’s running?

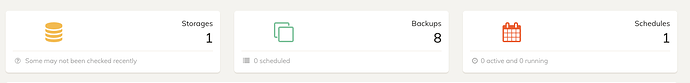

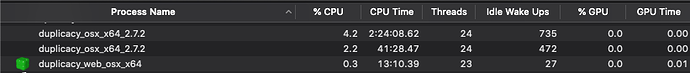

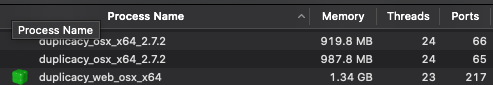

okay, I ran it and I see a bunch of programs listed - it looks like the information in Activity Monitor on the Mac - is that the same thing? I can’t really read what I’m seeing - lots of stuff is happening. Here is what it looks like in Activity Monitor under CPU:

And here is what it looks like under Memory:

I currently have it backing up two of the drives that work.

For reference, I have 32 gb of memory.

I got a new hard drive and so I wanted to test out if I reformatted one of the drives that isn’t working if that would fix the problem. I moved everything over and put a couple of files in the old drive and ran it. It failed again and here is the log. Looks like all the same stuff. I just can’t figure out what is happening with these specific drives. Why is Google letting me backup stuff from some hard drives but not others?

log.txt (98.7 KB)

EDIT: I just tried to backup the newest drive I purchased and added today and it is also failing with the same results. Honestly, it seems like drives that I have already completed backups on are working and drives that haven’t been backed up before are failing. Seems like I just can’t add anymore drives to Duplicacy.

I am testing out Arq Backup right now on the non-working drives to see if they will upload using another software. I tried Duplicati but I’m going with Arq because I know that’s worked in the past.