I’m wondering if we are seeing some side effects of

Today we are also enabling the new upload code in gateway-mt

In that thread they’ve also found and fixed a memory leak in uplink.

I’m wondering if we are seeing some side effects of

Today we are also enabling the new upload code in gateway-mt

In that thread they’ve also found and fixed a memory leak in uplink.

Ok, I’ve reproduced modem death:

Duplicacy log:

truenas% ../duplicacy_main -d check -chunks -threads 40 -storage duplicacy-storj | tee check-chunks-40-native-large.log

Storage set to storj://12EayRS2V1kEsWESU9QMRseFhdxYxKicsiFmxrsLZHeLUtdps3S@us1.storj.io:7777/duplicacy/duplicacy

...

Total chunk size is 67,721M in 13955 chunks

...

Verified chunk ea416b05b7655ce3b4abcca65a7d052466ff015068d9336e8f86be9ecf028dc1 (9249/13954), 43.45MB/s 00:08:48 66.3%

Chunk 1f6b38cb25dd008c36df92076a6dff758bb470fc258c572d145a9585220ae6a7 has been downloaded

Verified chunk 1f6b38cb25dd008c36df92076a6dff758bb470fc258c572d145a9585220ae6a7 (9250/13954), 43.46MB/s 00:08:48 66.3%

Chunk 2ac724f49e7a469351dcc1739f92342b68c2af6caf811acd6e25087a33cfa329 has been downloaded

Verified chunk 2ac724f49e7a469351dcc1739f92342b68c2af6caf811acd6e25087a33cfa329 (9251/13954), 43.45MB/s 00:08:48 66.3%

Chunk 3990e5490c6602755c680899494066a7ba86298c65ed3ff4030295582cbcda69 has been downloaded

Verified chunk 3990e5490c6602755c680899494066a7ba86298c65ed3ff4030295582cbcda69 (9252/13954), 43.46MB/s 00:08:48 66.3%

Failed to download the chunk 1dbb028c9ca6ba668e7c02c55bef3e895bd2bab9ac992006a958d3de37c538db: uplink: metaclient: rpc: tcp connector failed: rpc: context deadline exceeded

Added 9252 chunks to the list of verified chunks

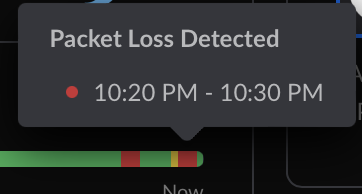

Ubiquiti log:

Today at 9:06 PM

Your primary internet Comcast Cable was disconnected and has been restored.

Internet health:

It just murders my connection completely. I got notification from my monitoring services about my services that I host at home being down:

At the same time, internal services such Unifi Protect, keep working (I have cameras on different virtual network continue recording), so the UDMP is not a culprit (not that I doubted it, but it’s another confirmation).

This leaves us with the pos modem being the culprit.

Now there are few avenues for further investigation:

running it on oracle cloud succeeded; I had to increase ulimit -u to 10000, however the number of concurrent established connections checked every second with while true; do netstat -an | grep ESTABLISHED | wc -l; sleep 1; done never exceeded 1800:

[opc@podman test-duplicacy]$ ulimit -n 10000

[opc@podman test-duplicacy]$ ./duplicacy_linux_arm64_3.1.0 -d check -chunks -threads 40 -storage duplicacy-storj | tee check-chunks-40-native-large-oracle.log

...

Verified chunk 4b074c82202db17907e038e83d1478dfdcfb30f91be92a7e7cc9aa5f3d87154c (13954/13954), 32.27MB/s 00:00:00 100.0%

All 13954 chunks have been successfully verified

Added 13954 chunks to the list of verified chunks

[opc@podman test-duplicacy]$

At the same time I ran another check session on my local server, with the same command as before, and this time it was running faster, at 47.42MB, and did not fail. Number of concurrent connections did not exceed 1650, and the check also completed successfully this time:

../duplicacy_main -d check -chunks -threads 40 -storage duplicacy-storj

...

Verified chunk ac4720cc33359301c85269a8915d808d0b50a7f1a764052f75120a07287d4355 (10611/10611), 47.55MB/s 00:00:00 100.0%

All 13954 chunks have been successfully verified

Added 13954 chunks to the list of verified chunks

It’s now 10:30PM, I’m wondering maybe somewhere network utilization is lower, stuff happens faster, and connections don’t accumulate to much. Ubiquiti still reported packet loss during that time, and nobody was able to connect to my services from outside, but perhaps not enough to cause failure in duplicacy:

I’ll try again local test tomorrow 9PM again, while monitoring number of connections.

Another interesting point is why are tcp connections used at all in the first place. Vast majority of nodes support QUIC.

I’ll investigate. And also will try the same from macOS.

Looking at a number of UDP and TCP connections created during duplicacy multithread download I see that there are not much of UDP activity, but over 60k TCP receive and 30k TCP send requests being sent per second.

This can’t end well, especially if some peers are not responding quickly.

Why is it using TCP connections in the first place? Storj is supposed to work over QUIC, stateless.

#!/usr/sbin/dtrace -qs

BEGIN

{

printf("Printing packets and bytes sent and received per second\n");

udp_count_sent = 0;

udp_count_rcvd = 0;

udp_bytes_sent = 0;

udp_bytes_rcvd = 0;

tcp_count_sent = 0;

tcp_count_rcvd = 0;

tcp_bytes_sent = 0;

tcp_bytes_rcvd = 0;

}

udp:::send

{

udp_count_sent += 1;

udp_bytes_sent += args[4]->udp_length;

}

udp:::receive

{

udp_count_rcvd += 1;

udp_bytes_rcvd += args[4]->udp_length;

}

tcp:::send

{

tcp_count_sent += 1;

tcp_bytes_sent += args[2]->ip_plength;

}

tcp:::receive

{

tcp_count_rcvd += 1;

tcp_bytes_rcvd += args[2]->ip_plength;

}

tick-1sec

{

printf("udp: >> %6d (%10d bytes), << %6d (%10d bytes)\n",

udp_count_sent,

udp_bytes_sent,

udp_count_rcvd,

udp_bytes_rcvd);

printf("tcp: >> %6d (%10d bytes), << %6d (%10d bytes)\n",

tcp_count_sent,

tcp_bytes_sent,

tcp_count_rcvd,

tcp_bytes_rcvd);

udp_count_sent = 0;

udp_count_rcvd = 0;

udp_bytes_sent = 0;

udp_bytes_rcvd = 0;

tcp_count_sent = 0;

tcp_count_rcvd = 0;

tcp_bytes_sent = 0;

tcp_bytes_rcvd = 0;

}

truenas% sudo ./udp.d

Printing packets and bytes sent and received per second

udp: >> 186 ( 95845 bytes), << 319 ( 328253 bytes)

tcp: >> 1054 ( 368336 bytes), << 1703 ( 1902650 bytes)

udp: >> 43 ( 47467 bytes), << 39 ( 10956 bytes)

tcp: >> 930 ( 534539 bytes), << 1308 ( 1271382 bytes)

udp: >> 79 ( 9598 bytes), << 143 ( 180265 bytes)

tcp: >> 1331 ( 1105769 bytes), << 1268 ( 1222994 bytes)

udp: >> 98 ( 27579 bytes), << 185 ( 220446 bytes)

tcp: >> 968 ( 560523 bytes), << 1335 ( 1302560 bytes)

udp: >> 93 ( 8726 bytes), << 171 ( 168801 bytes)

tcp: >> 1557 ( 855320 bytes), << 2089 ( 2339988 bytes)

udp: >> 160 ( 26198 bytes), << 277 ( 320532 bytes)

tcp: >> 1442 ( 1009272 bytes), << 1852 ( 1830740 bytes)

udp: >> 201 ( 70721 bytes), << 346 ( 416453 bytes)

tcp: >> 1286 ( 738573 bytes), << 1785 ( 1795193 bytes)

udp: >> 240 ( 63831 bytes), << 408 ( 500132 bytes)

tcp: >> 1187 ( 561221 bytes), << 1743 ( 1805061 bytes)

udp: >> 429 ( 255725 bytes), << 638 ( 741725 bytes)

tcp: >> 1621 ( 676433 bytes), << 2493 ( 2925412 bytes)

udp: >> 122 ( 42532 bytes), << 147 ( 154914 bytes)

tcp: >> 2770 ( 2387978 bytes), << 2351 ( 1600099 bytes)

udp: >> 84 ( 16293 bytes), << 145 ( 142717 bytes)

tcp: >> 5841 ( 6429401 bytes), << 3545 ( 1469891 bytes)

udp: >> 34 ( 7863 bytes), << 39 ( 21356 bytes)

tcp: >> 8844 ( 10234680 bytes), << 4961 ( 1628375 bytes)

udp: >> 52 ( 7046 bytes), << 97 ( 93041 bytes)

tcp: >> 10322 ( 11902005 bytes), << 6124 ( 2354127 bytes)

udp: >> 172 ( 81979 bytes), << 257 ( 272074 bytes)

tcp: >> 10940 ( 12669911 bytes), << 6359 ( 2250275 bytes)

udp: >> 507 ( 518177 bytes), << 451 ( 375965 bytes)

tcp: >> 7146 ( 7800666 bytes), << 4461 ( 2118071 bytes)

udp: >> 543 ( 484423 bytes), << 609 ( 590903 bytes)

tcp: >> 8869 ( 9957246 bytes), << 5477 ( 2353450 bytes)

udp: >> 818 ( 934425 bytes), << 753 ( 508856 bytes)

tcp: >> 10178 ( 11311536 bytes), << 6401 ( 2976690 bytes)

udp: >> 694 ( 741531 bytes), << 643 ( 535116 bytes)

tcp: >> 8513 ( 9428805 bytes), << 5151 ( 2379337 bytes)

udp: >> 505 ( 432781 bytes), << 556 ( 621025 bytes)

tcp: >> 10079 ( 11470817 bytes), << 5775 ( 2307926 bytes)

udp: >> 512 ( 642465 bytes), << 262 ( 95972 bytes)

tcp: >> 10425 ( 12069564 bytes), << 5619 ( 1792686 bytes)

udp: >> 603 ( 690062 bytes), << 522 ( 279910 bytes)

tcp: >> 7897 ( 8523969 bytes), << 4857 ( 2532763 bytes)

udp: >> 537 ( 742045 bytes), << 458 ( 84616 bytes)

tcp: >> 7908 ( 9353648 bytes), << 3982 ( 1945933 bytes)

udp: >> 711 ( 968198 bytes), << 364 ( 113005 bytes)

tcp: >> 2607 ( 2756724 bytes), << 2110 ( 1499416 bytes)

udp: >> 628 ( 859359 bytes), << 203 ( 73458 bytes)

tcp: >> 2431 ( 2339372 bytes), << 2121 ( 1433577 bytes)

udp: >> 689 ( 937198 bytes), << 276 ( 43322 bytes)

tcp: >> 4141 ( 2518638 bytes), << 5169 ( 5802361 bytes)

udp: >> 497 ( 639767 bytes), << 295 ( 98524 bytes)

tcp: >> 15227 ( 2379728 bytes), << 27796 ( 40316431 bytes)

udp: >> 511 ( 580885 bytes), << 328 ( 301105 bytes)

tcp: >> 8838 ( 6005310 bytes), << 9474 ( 11287877 bytes)

udp: >> 440 ( 514409 bytes), << 254 ( 225199 bytes)

tcp: >> 8354 ( 9755266 bytes), << 3519 ( 1936462 bytes)

udp: >> 434 ( 437549 bytes), << 324 ( 357289 bytes)

tcp: >> 5684 ( 1255525 bytes), << 8917 ( 10452962 bytes)

udp: >> 402 ( 452937 bytes), << 150 ( 90154 bytes)

tcp: >> 2872 ( 743187 bytes), << 4196 ( 4600774 bytes)

udp: >> 145 ( 86237 bytes), << 193 ( 217529 bytes)

tcp: >> 3648 ( 601781 bytes), << 5704 ( 6195227 bytes)

udp: >> 115 ( 75547 bytes), << 130 ( 145554 bytes)

tcp: >> 3614 ( 414351 bytes), << 5981 ( 6947809 bytes)

udp: >> 318 ( 343830 bytes), << 211 ( 216817 bytes)

tcp: >> 1198 ( 381424 bytes), << 1883 ( 2415870 bytes)

udp: >> 279 ( 229227 bytes), << 364 ( 326928 bytes)

tcp: >> 1082 ( 241788 bytes), << 1918 ( 2353281 bytes)

udp: >> 230 ( 30937 bytes), << 398 ( 515733 bytes)

tcp: >> 908 ( 37167 bytes), << 1745 ( 2245863 bytes)

udp: >> 352 ( 228898 bytes), << 512 ( 536851 bytes)

tcp: >> 1103 ( 247432 bytes), << 1944 ( 2362320 bytes)

udp: >> 156 ( 15584 bytes), << 267 ( 316137 bytes)

tcp: >> 985 ( 43730 bytes), << 1910 ( 2547067 bytes)

udp: >> 94 ( 10603 bytes), << 168 ( 214086 bytes)

tcp: >> 2060 ( 1572691 bytes), << 1919 ( 2018127 bytes)

udp: >> 88 ( 14589 bytes), << 152 ( 183689 bytes)

tcp: >> 552 ( 26623 bytes), << 1043 ( 1271345 bytes)

udp: >> 168 ( 24301 bytes), << 280 ( 342927 bytes)

tcp: >> 967 ( 324955 bytes), << 1486 ( 1763710 bytes)

udp: >> 131 ( 15609 bytes), << 233 ( 289993 bytes)

tcp: >> 1925 ( 1016946 bytes), << 2496 ( 2834887 bytes)

udp: >> 84 ( 9835 bytes), << 140 ( 169668 bytes)

tcp: >> 3297 ( 1051919 bytes), << 4823 ( 5522150 bytes)

udp: >> 171 ( 20002 bytes), << 315 ( 400877 bytes)

tcp: >> 1634 ( 854692 bytes), << 2188 ( 2297036 bytes)

udp: >> 299 ( 178502 bytes), << 385 ( 430308 bytes)

tcp: >> 1856 ( 945903 bytes), << 2574 ( 2698362 bytes)

udp: >> 261 ( 62269 bytes), << 455 ( 534830 bytes)

tcp: >> 2717 ( 1898582 bytes), << 3014 ( 3297335 bytes)

udp: >> 209 ( 44710 bytes), << 373 ( 494698 bytes)

tcp: >> 5760 ( 1242553 bytes), << 8782 ( 10845527 bytes)

udp: >> 192 ( 62353 bytes), << 326 ( 403860 bytes)

tcp: >> 1939 ( 652662 bytes), << 2793 ( 2793581 bytes)

udp: >> 179 ( 53714 bytes), << 301 ( 372618 bytes)

tcp: >> 2251 ( 1825849 bytes), << 2106 ( 2164722 bytes)

udp: >> 180 ( 56658 bytes), << 315 ( 408733 bytes)

tcp: >> 3294 ( 518476 bytes), << 5977 ( 8026640 bytes)

udp: >> 207 ( 56892 bytes), << 305 ( 374526 bytes)

tcp: >> 1681 ( 910321 bytes), << 2215 ( 2348771 bytes)

udp: >> 170 ( 54467 bytes), << 289 ( 367526 bytes)

tcp: >> 1446 ( 541875 bytes), << 2163 ( 2379618 bytes)

udp: >> 302 ( 80968 bytes), << 500 ( 608741 bytes)

tcp: >> 4922 ( 1740629 bytes), << 7416 ( 9843383 bytes)

udp: >> 216 ( 23712 bytes), << 406 ( 553958 bytes)

tcp: >> 2564 ( 631254 bytes), << 3831 ( 4149128 bytes)

udp: >> 214 ( 22952 bytes), << 385 ( 522218 bytes)

tcp: >> 1351 ( 405349 bytes), << 2145 ( 2443302 bytes)

udp: >> 315 ( 38169 bytes), << 564 ( 758881 bytes)

tcp: >> 1358 ( 435451 bytes), << 2094 ( 2296493 bytes)

udp: >> 225 ( 23245 bytes), << 423 ( 563985 bytes)

tcp: >> 1286 ( 401811 bytes), << 2048 ( 2283411 bytes)

udp: >> 321 ( 150279 bytes), << 346 ( 463445 bytes)

tcp: >> 1512 ( 396082 bytes), << 2476 ( 2983933 bytes)

udp: >> 262 ( 29213 bytes), << 464 ( 627233 bytes)

tcp: >> 1360 ( 440149 bytes), << 2096 ( 2348326 bytes)

udp: >> 211 ( 30031 bytes), << 395 ( 515066 bytes)

tcp: >> 1226 ( 584084 bytes), << 1726 ( 1782447 bytes)

udp: >> 340 ( 48374 bytes), << 621 ( 827943 bytes)

tcp: >> 3086 ( 486896 bytes), << 4870 ( 5390337 bytes)

udp: >> 370 ( 217623 bytes), << 522 ( 620790 bytes)

tcp: >> 2978 ( 485546 bytes), << 4655 ( 5581036 bytes)

udp: >> 526 ( 503049 bytes), << 408 ( 501541 bytes)

tcp: >> 2176 ( 699966 bytes), << 3068 ( 3350240 bytes)

udp: >> 353 ( 208584 bytes), << 451 ( 580298 bytes)

tcp: >> 2508 ( 547900 bytes), << 3798 ( 4054317 bytes)

udp: >> 446 ( 432488 bytes), << 419 ( 428059 bytes)

tcp: >> 1268 ( 732916 bytes), << 1687 ( 1775660 bytes)

udp: >> 305 ( 34452 bytes), << 566 ( 717829 bytes)

tcp: >> 1680 ( 445890 bytes), << 2650 ( 3091938 bytes)

udp: >> 331 ( 176833 bytes), << 459 ( 561966 bytes)

tcp: >> 1832 ( 528260 bytes), << 2668 ( 2964564 bytes)

udp: >> 202 ( 153302 bytes), << 178 ( 148942 bytes)

tcp: >> 2861 ( 658874 bytes), << 3985 ( 4321116 bytes)

udp: >> 175 ( 63369 bytes), << 286 ( 361665 bytes)

tcp: >> 2492 ( 844119 bytes), << 3383 ( 3564076 bytes)

udp: >> 381 ( 340426 bytes), << 403 ( 345007 bytes)

tcp: >> 12806 ( 3065233 bytes), << 9926 ( 7804356 bytes)

udp: >> 178 ( 36861 bytes), << 268 ( 337007 bytes)

tcp: >> 12818 ( 2571924 bytes), << 20322 ( 22871703 bytes)

udp: >> 117 ( 15139 bytes), << 197 ( 249602 bytes)

tcp: >> 36976 ( 1961680 bytes), << 67286 ( 89038183 bytes)

udp: >> 137 ( 31902 bytes), << 212 ( 263266 bytes)

tcp: >> 45720 ( 2070686 bytes), << 75817 ( 98513374 bytes)

udp: >> 222 ( 127373 bytes), << 299 ( 311413 bytes)

tcp: >> 26926 ( 1399583 bytes), << 47025 ( 60956097 bytes)

udp: >> 393 ( 406216 bytes), << 379 ( 304688 bytes)

tcp: >> 13215 ( 1455906 bytes), << 21092 ( 25215085 bytes)

udp: >> 291 ( 161856 bytes), << 399 ( 405177 bytes)

tcp: >> 20226 ( 2949652 bytes), << 26280 ( 28186188 bytes)

udp: >> 261 ( 36952 bytes), << 508 ( 676881 bytes)

tcp: >> 21972 ( 2289520 bytes), << 37515 ( 46879533 bytes)

udp: >> 233 ( 45490 bytes), << 359 ( 439539 bytes)

tcp: >> 47026 ( 2220402 bytes), << 77938 ( 103728885 bytes)

udp: >> 264 ( 38597 bytes), << 436 ( 586783 bytes)

tcp: >> 43103 ( 2345958 bytes), << 72261 ( 94108371 bytes)

udp: >> 232 ( 31225 bytes), << 428 ( 584656 bytes)

tcp: >> 29224 ( 2107379 bytes), << 49649 ( 63331560 bytes)

udp: >> 236 ( 46963 bytes), << 358 ( 437479 bytes)

tcp: >> 26204 ( 2005099 bytes), << 41700 ( 51544150 bytes)

udp: >> 106 ( 15611 bytes), << 177 ( 214505 bytes)

tcp: >> 40286 ( 2626891 bytes), << 70267 ( 89442335 bytes)

udp: >> 163 ( 26158 bytes), << 257 ( 265932 bytes)

tcp: >> 30656 ( 2564498 bytes), << 51835 ( 65137104 bytes)

udp: >> 215 ( 24568 bytes), << 407 ( 527265 bytes)

tcp: >> 26913 ( 2558011 bytes), << 43358 ( 52944767 bytes)

udp: >> 115 ( 20014 bytes), << 206 ( 248483 bytes)

tcp: >> 30298 ( 1985384 bytes), << 51591 ( 65712173 bytes)

udp: >> 155 ( 21571 bytes), << 240 ( 289473 bytes)

tcp: >> 31825 ( 2282204 bytes), << 53464 ( 66927502 bytes)

udp: >> 192 ( 24138 bytes), << 323 ( 424434 bytes)

tcp: >> 32706 ( 2269805 bytes), << 56947 ( 72701040 bytes)

udp: >> 206 ( 25215 bytes), << 315 ( 400032 bytes)

tcp: >> 33664 ( 2233141 bytes), << 58725 ( 74493932 bytes)

udp: >> 167 ( 34161 bytes), << 275 ( 289945 bytes)

tcp: >> 31354 ( 2157204 bytes), << 51433 ( 64576712 bytes)

udp: >> 113 ( 19268 bytes), << 222 ( 214848 bytes)

tcp: >> 24434 ( 2454805 bytes), << 37659 ( 43297812 bytes)

udp: >> 168 ( 30984 bytes), << 279 ( 329568 bytes)

tcp: >> 32551 ( 2150123 bytes), << 57867 ( 74287093 bytes)

udp: >> 86 ( 19610 bytes), << 121 ( 121342 bytes)

tcp: >> 38549 ( 2342238 bytes), << 66052 ( 159953962 bytes)

udp: >> 91 ( 22319 bytes), << 156 ( 125318 bytes)

tcp: >> 32140 ( 2211151 bytes), << 52303 ( 64253040 bytes)

udp: >> 256 ( 149300 bytes), << 228 ( 173674 bytes)

tcp: >> 10892 ( 1732260 bytes), << 21640 ( 20673862 bytes)

udp: >> 484 ( 80252 bytes), << 934 ( 1195038 bytes)

tcp: >> 3869 ( 546170 bytes), << 6846 ( 7225280 bytes)

udp: >> 502 ( 62931 bytes), << 764 ( 1027185 bytes)

tcp: >> 1534 ( 375392 bytes), << 2326 ( 2735540 bytes)

^C

udp: >> 202 ( 21140 bytes), << 393 ( 539844 bytes)

tcp: >> 1396 ( 574827 bytes), << 2098 ( 1989773 bytes)

truenas%

I’ll check a few more things and then will follow up on this topic: Question about UDP - #2 by thepaul - FAQ - Storj Community Forum (official). If they are blasting tcp connections no matter what this will explain it. Perhaps there are some knobs to disable tcp altogether.

Are you and I the only people who are using Storj with Duplicacy?? Seems like this would have come up before now.

At any rate, you are well beyond my comfort and competence at this point, so I’m just going to watch from here unless you need me to test something.

I’ve done some tests before, both with the standard connection and using the S3 gateway. I had several “fail to upload/download” problems, mainly with the standard connection. I didn’t have time to look into the problem, so I stayed with my provider (Wasabi) and set up copies (with Duplicacy) to Storj.

Yes, I know that Wasabi is a little more expensive than other options (B2, for example, or even Glacier), but the difference is small in my case (around 2TB) and doesn’t justify the time I would spend making fine tuning to avoid download fees, etc.

They’ve provided two suggestions Question about UDP - #7 by elek - FAQ - Storj Community Forum (official)

You can try to set environment variable STORJ_QUIC_ROLLOUT_PERCENT=100, and test it. (but use it your own risk ;-), worst case it will be slower or failed download)

to force QUIC for everything, but this did not have effect, I’m following up.

One option is increasing the connection pool with this function: uplink/transport.go at 72bcffbeac33146027b0f70fc6fcd703fdf8bfb0 · storj/uplink · GitHub

The default size is 100, which is very low. you can even use 5000-10000, which will increase the performance but also increase the memory usage…

This will take me some time to try, as it requires source modification and rebuilding.

The first suggestion turned out to be a non-starter:

Trying the second option will involve me rebuilding duplicacy with the additional pool configuration calls, and it will have to wait till Monday — there is only so much I can do in Shelly on an iPhone

As my month-long check continues, I’m getting some new errors after it’s been running for several days.

I realized I had some other schedules that still ran in the interim (running backups and a prune) so that might explain part of it. But I suspect maybe I’m finally running into these larger issues, even on the super slow 3MB/s attempt.

2023-06-09 10:00:23.505 WARN DOWNLOAD_CHUNK Chunk af7c61a0374bde473aeea1c8500647e2764ad2e2de638a80454b839c73a6a1e4 can't be found

2023-06-09 10:00:23.505 INFO VERIFY_PROGRESS Verified chunk af7c61a0374bde473aeea1c8500647e2764ad2e2de638a80454b839c73a6a1e4 (196169/1321690), 3.04MB/s 21 days 15:42:40 14.8%

2023-06-09 10:00:23.505 INFO CHUNK_BUFFER Discarding a free chunk due to a full pool

2023-06-09 10:00:23.667 WARN DOWNLOAD_CHUNK Chunk 492fb143502c13619e7c08ccd3b7b8cdd49438422122c70f825b366995f5db38 can't be found

2023-06-09 10:00:23.668 INFO VERIFY_PROGRESS Verified chunk 492fb143502c13619e7c08ccd3b7b8cdd49438422122c70f825b366995f5db38 (196170/1321690), 3.04MB/s 21 days 15:42:29 14.8%

2023-06-09 10:00:23.668 INFO CHUNK_BUFFER Discarding a free chunk due to a full pool

You can add the flag ‘-fossils` to check, so that if prune fossilized chunk, check will still find it. That was suggested to be made default but I don’t think it was done.

If the next prune manages to delete the chunk — then perhaps you’ll still see that. I’m not sure if there is protection against check that runs for longer than 7 days.

Also I’m not sure why this message duplicacy/duplicacy_config.go at cdf8f5a8575fa50e22cad126a00140e38618b9fc · gilbertchen/duplicacy · GitHub is INFO level and not DEBUG, seems implementation detail.

So if this works, is it something we can add in a PR or will I have to build myself as a one-off exercise?

I have posted a smalll update here Question about UDP - #10 by arrogantrabbit - FAQ - Storj Community Forum (official)

TLDR – increasing pool size from 100 to 5000 helped somewhat with performance, but duplicacy still failed with

Verified chunk 6af6b75b06781044d9643426141c180bba455cad422f1a87baba163a424e386a (12673/13954), 52.58MB/s 00:01:59 90.8%

Failed to download the chunk feffffab4937b5654f82ba7ab6181f0d4de2a44746f3919e03f1f5521f9fcf6a: uplink: metaclient: context canceled

I did see seemingly fewer lost packets, and modem did not die, so I guess we’re moving in the right direction. I’ll also try 20000 connections (I anticipate quite a bill from storj in the end of the month for all that egress  ) and it would be interesting to see if storj has any suggestions.

) and it would be interesting to see if storj has any suggestions.

Unfortunately it looks like that thread has stalled on the storj forum. I still don’t have a full check of the chunks but in the meantime I’ve successfully resumed backups on storj without any issue, so maybe I just proceed as if it’s okay…

It’s not just check, I’d expect restore will be failing the same way.

Perhaps increasing the connection pool sizes is not a right thing to do here.

I would try enabling TCP fastopen as they recommend in this thread Please enable TCP fastopen on your storage nodes - STORJLINGS - Storj Community Forum (official) to reduce connection latency: that in turn will improve throughput and allow to reduce the number of threads thereby reducing load on the network equipment.

I can’t test it because it’s not yet implemented on uplink for FreeBSD: Please enable TCP fastopen on your storage nodes - #8 by jtolio - STORJLINGS - Storj Community Forum (official)

And there is always a workaround of using S3 gateway and moving the problem to the cloud.

Unfortunately my tests with S3 were slow too; it stabilized around 4.5 MB/s

I still don’t have a fully completed check -chunks but I have been successfully completing backups for weeks now. Everything seems okay, but I’ll never really know for sure…

I’ve enabled TCP fastopen with --sysctl net.ipv4.tcp_fastopen=3 in the docker run command. I’ll try running a check again and see if anything is different /shrug

Update: After running for several hours the speeds are mostly the same; it doesn’t seem to help this situation.

There were similar observations on storj forums that fastopen does not dramatically improve things.

If you have the same poor performance both with s3 and uplink, maybe the chunk size is too small, making the connection overhead huge.

Try running the duplicacy benchmark with higher chunk size, perhaps variable between 48 and 64MB, I think benchmark allows to specify the chunk sizes. I’ll try to run it too tonight.

ive tried benchmark on storj backend with default chunk size (4M), large chunk size (64M) at both 1 thread, 4, and 64 threads.

(64 threads knocked down my modem)

testing chunk size = 4 MB, threads = 1

Uploaded 256.00M bytes in 486.45s: 539K/s

Downloaded 256.00M bytes in 117.05s: 2.19M/s

testing chunk size = 64 MB, threads = 1

Uploaded 4096.00M bytes in 5808.65s: 722K/s

Downloaded 4096.00M bytes in 525.05s: 7.80M/s

testing chunk size = 4 MB, threads = 4

Uploaded 256.00M bytes in 376.93s: 695K/s

Downloaded 256.00M bytes in 28.99s: 8.83M/s

testing chunk size = 64 MB, threads = 4

Uploaded 4096.00M bytes in 5506.00s: 762K/s

Downloaded 4096.00M bytes in 123.00s: 33.30M/s

testing chunk size = 4 MB, threads = 1

Uploaded 256.00M bytes in 239.21s: 1.07M/s

Downloaded 256.00M bytes in 65.80s: 3.89M/s

testing chunk size = 4 MB, threads = 4

Uploaded 256.00M bytes in 116.54s: 2.20M/s

Downloaded 256.00M bytes in 16.69s: 15.34M/s

testing chunk size = 64 MB, threads = 1

Uploaded 4096.00M bytes in 1951.04s: 2.10M/s

Downloaded 4096.00M bytes in 297.24s: 13.78M/s

testing chunk size = 64 MB, threads = 4

Uploaded 4096.00M bytes in 1778.49s: 2.30M/s

Downloaded 4096.00M bytes in 83.64s: 48.97M/s