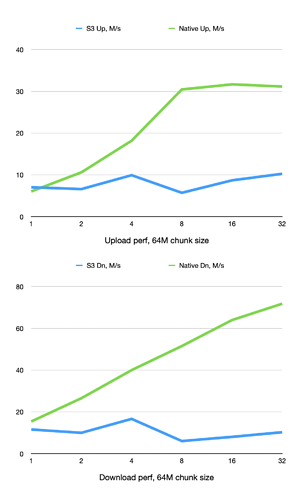

Benchmarking on a gigabit symmetric fiber internet:

Conclusions:

- S3 is much slower, both in upload and download

- Native integration upload maxed out at 30MBps

- Native download upward trend continued, increasing the thread count further would lead to further performance increase.

| Threads | S3 Up, M/s | S3 Dn, M/s | Native Up, M/s | Native Dn, M/s |

|---|---|---|---|---|

| 1 | 7.03 | 11.56 | 6.03 | 15.39 |

| 2 | 6.6 | 9.98 | 10.61 | 26.54 |

| 4 | 9.92 | 16.64 | 18.18 | 39.98 |

| 8 | 5.71 | 6.03 | 30.44 | 51.50 |

| 16 | 8.69 | 8.04 | 31.66 | 63.94 |

| 32 | 10.26 | 10.26 | 31.14 | 71.77 |

raw log

Tue Mar 5 13:02:58 PST 2024: storage: test-s3, chunk-size=64, threads=1

Uploaded 4096.00M bytes in 582.77s: 7.03M/s

Downloaded 4096.00M bytes in 354.33s: 11.56M/s

Tue Mar 5 13:20:14 PST 2024: storage: test-s3, chunk-size=64, threads=2

Uploaded 4096.00M bytes in 616.42s: 6.64M/s

Downloaded 4096.00M bytes in 410.42s: 9.98M/s

Tue Mar 5 13:38:50 PST 2024: storage: test-s3, chunk-size=64, threads=4

Uploaded 4096.00M bytes in 413.01s: 9.92M/s

Downloaded 4096.00M bytes in 246.21s: 16.64M/s

Tue Mar 5 13:51:15 PST 2024: storage: test-s3, chunk-size=64, threads=8

Uploaded 4096.00M bytes in 716.86s: 5.71M/s

Downloaded 4096.00M bytes in 679.03s: 6.03M/s

Tue Mar 5 14:15:57 PST 2024: storage: test-s3, chunk-size=64, threads=16

Uploaded 4096.00M bytes in 471.42s: 8.69M/s

Downloaded 4096.00M bytes in 509.50s: 8.04M/s

Tue Mar 5 14:33:43 PST 2024: storage: test-s3, chunk-size=64, threads=32

Uploaded 4096.00M bytes in 399.17s: 10.26M/s

Tue Mar 5 14:52:05 PST 2024: storage: test-native, chunk-size=64, threads=1

Uploaded 4096.00M bytes in 679.53s: 6.03M/s

Downloaded 4096.00M bytes in 266.13s: 15.39M/s

Tue Mar 5 15:09:26 PST 2024: storage: test-native, chunk-size=64, threads=2

Uploaded 4096.00M bytes in 385.91s: 10.61M/s

Downloaded 4096.00M bytes in 154.32s: 26.54M/s

Tue Mar 5 15:19:54 PST 2024: storage: test-native, chunk-size=64, threads=4

Uploaded 4096.00M bytes in 225.26s: 18.18M/s

Downloaded 4096.00M bytes in 102.45s: 39.98M/s

Tue Mar 5 15:26:49 PST 2024: storage: test-native, chunk-size=64, threads=8

Uploaded 4096.00M bytes in 134.57s: 30.44M/s

Downloaded 4096.00M bytes in 79.53s: 51.50M/s

Tue Mar 5 15:31:49 PST 2024: storage: test-native, chunk-size=64, threads=16

Uploaded 4096.00M bytes in 129.38s: 31.66M/s

Downloaded 4096.00M bytes in 64.06s: 63.94M/s

Tue Mar 5 15:36:28 PST 2024: storage: test-native, chunk-size=64, threads=32

Uploaded 4096.00M bytes in 131.56s: 31.14M/s

Downloaded 4096.00M bytes in 57.07s: 71.77M/s