The total chunks of what?

Interesting question … I always thought it was the total number of chunks in the storage from where it is being copied.

I checked into a local repository that is copied regularly to Wasabi.

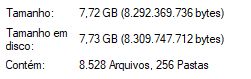

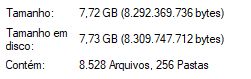

Windows reports 8528 files in “chunks” folder:

The check command also reports 8528 chunks (although there should be a total in the new column too, after all is the total of this column):

pastebin version, better display of columns

Listing all chunks

All chunks referenced by snapshot [repository] at revision 1 exist

All chunks referenced by snapshot [repository] at revision 2 exist

All chunks referenced by snapshot [repository] at revision 3 exist

All chunks referenced by snapshot [repository] at revision 4 exist

All chunks referenced by snapshot [repository] at revision 5 exist

All chunks referenced by snapshot [repository] at revision 6 exist

All chunks referenced by snapshot [repository] at revision 7 exist

All chunks referenced by snapshot [repository] at revision 8 exist

All chunks referenced by snapshot [repository] at revision 9 exist

All chunks referenced by snapshot [repository] at revision 10 exist

All chunks referenced by snapshot [repository] at revision 11 exist

All chunks referenced by snapshot [repository] at revision 12 exist

All chunks referenced by snapshot [repository] at revision 13 exist

All chunks referenced by snapshot [repository] at revision 14 exist

All chunks referenced by snapshot [repository] at revision 15 exist

All chunks referenced by snapshot [repository] at revision 16 exist

All chunks referenced by snapshot [repository] at revision 17 exist

snap | rev | | files | bytes | chunks | bytes | uniq | bytes | new | bytes |

[repository] | 1 | @ 2018-05-31 00:23 -hash | 2456 | 9,185M | 7349 | 6,961M | 4 | 712K | 7349 | 6,961M |

[repository] | 2 | @ 2018-05-31 00:41 | 2407 | 9,190M | 7359 | 6,961M | 4 | 714K | 14 | 1,048K |

[repository] | 3 | @ 2018-05-31 00:48 | 2407 | 9,190M | 7369 | 6,967M | 13 | 6,045K | 15 | 6,796K |

[repository] | 4 | @ 2018-06-01 12:21 | 2406 | 9,196M | 7395 | 6,983M | 0 | 0 | 41 | 23,408K |

[repository] | 5 | @ 2018-06-03 20:44 | 2406 | 9,196M | 7395 | 6,983M | 0 | 0 | 0 | 0 |

[repository] | 6 | @ 2018-06-04 20:01 | 2407 | 9,233M | 7412 | 7,007M | 25 | 11,209K | 53 | 44,188K |

[repository] | 7 | @ 2018-06-05 20:02 | 2413 | 9,312M | 7466 | 7,065M | 23 | 8,487K | 81 | 70,258K |

[repository] | 8 | @ 2018-06-06 20:20 | 2422 | 9,346M | 7490 | 7,092M | 26 | 16,851K | 51 | 37,699K |

[repository] | 9 | @ 2018-06-07 20:12 | 2427 | 9,307M | 7482 | 7,085M | 17 | 5,797K | 366 | 379,181K |

[repository] | 10 | @ 2018-06-08 12:26 | 2430 | 9,388M | 7527 | 7,127M | 34 | 25,652K | 64 | 51,327K |

[repository] | 11 | @ 2018-06-09 22:13 | 2427 | 9,325M | 7531 | 7,113M | 23 | 8,695K | 146 | 116,753K |

[repository] | 12 | @ 2018-06-10 20:03 | 2436 | 9,321M | 7547 | 7,124M | 33 | 24,552K | 63 | 41,660K |

[repository] | 13 | @ 2018-06-10 22:46 | 2432 | 9,224M | 7507 | 7,079M | 15 | 5,313K | 47 | 36,935K |

[repository] | 14 | @ 2018-06-11 20:12 | 2432 | 9,234M | 7521 | 7,089M | 33 | 16,252K | 42 | 22,764K |

[repository] | 15 | @ 2018-06-12 20:05 | 2438 | 9,239M | 7516 | 7,086M | 28 | 8,671K | 73 | 51,075K |

[repository] | 16 | @ 2018-06-13 20:02 | 2433 | 9,244M | 7515 | 7,098M | 31 | 20,682K | 34 | 24,953K |

[repository] | 17 | @ 2018-06-17 20:32 | 2431 | 9,237M | 7500 | 7,089M | 89 | 61,525K | 89 | 61,525K |

[repository] | all | | | | 8528 | 7,908M | 8528 | 7,908M | | |

But the copy command reports 7717:

2018-06-18 09:40:30.248 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 1 already exists at the destination storage

2018-06-18 09:40:30.424 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 2 already exists at the destination storage

2018-06-18 09:40:30.607 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 3 already exists at the destination storage

2018-06-18 09:40:30.777 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 4 already exists at the destination storage

2018-06-18 09:40:30.952 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 5 already exists at the destination storage

2018-06-18 09:40:31.123 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 6 already exists at the destination storage

2018-06-18 09:40:31.302 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 7 already exists at the destination storage

2018-06-18 09:40:31.474 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 8 already exists at the destination storage

2018-06-18 09:40:31.663 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 9 already exists at the destination storage

2018-06-18 09:40:31.839 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 10 already exists at the destination storage

2018-06-18 09:40:32.019 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 11 already exists at the destination storage

2018-06-18 09:40:32.192 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 12 already exists at the destination storage

2018-06-18 09:40:32.364 INFO SNAPSHOT_EXIST Snapshot [repository] at revision 13 already exists at the destination storage

2018-06-18 09:40:33.081 TRACE SNAPSHOT_COPY Copying snapshot [repository] at revision 14

2018-06-18 09:40:33.121 TRACE SNAPSHOT_COPY Copying snapshot [repository] at revision 15

2018-06-18 09:40:33.138 TRACE SNAPSHOT_COPY Copying snapshot [repository] at revision 16

2018-06-18 09:40:33.153 TRACE SNAPSHOT_COPY Copying snapshot [repository] at revision 17

...

2018-06-18 09:40:46.661 INFO SNAPSHOT_COPY Chunk fca6...84ef (118/7717) skipped at the destination

2018-06-18 09:40:50.837 INFO SNAPSHOT_COPY Chunk 9595...4386 (1/7717) copied to the destination

2018-06-18 09:40:50.837 INFO SNAPSHOT_COPY Chunk 642c...293f (120/7717) skipped at the destination

2018-06-18 09:40:50.837 INFO SNAPSHOT_COPY Chunk b113...0890 (121/7717) skipped at the destination

Now I’m in doubt too … @gchen, could you clarify?