Deleting snapshots from google drive fails silently:

Log:

2021-10-01 19:47:28.726 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1132

2021-10-01 19:47:29.411 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1133

2021-10-01 19:47:30.103 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1134

2021-10-01 19:47:30.762 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1135

2021-10-01 19:47:31.439 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1137

2021-10-01 19:47:32.119 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1138

2021-10-01 19:47:32.781 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1139

2021-10-01 19:47:33.433 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1140

2021-10-01 19:47:34.107 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1141

2021-10-01 19:47:34.860 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1142

2021-10-01 19:47:35.532 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1145

2021-10-01 19:47:36.236 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1147

2021-10-01 19:47:36.920 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1149

2021-10-01 19:47:37.615 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1152

2021-10-01 19:47:40.133 WARN DOWNLOAD_RESURRECT Fossil 228836a3c5e5310d155b48ae5915e5c6bb0771180d6d4c5ff10b0f95c7032ccd has been resurrected

2021-10-01 19:47:42.424 INFO SNAPSHOT_DELETE Deleting snapshot obsidian-users at revision 1153

2021-10-01 19:47:45.444 WARN DOWNLOAD_RESURRECT Fossil 7f45c29b6cf3d79737a92a6c2b21f6b11d67176b98d7c2cb120d851011020a84 has been resurrected

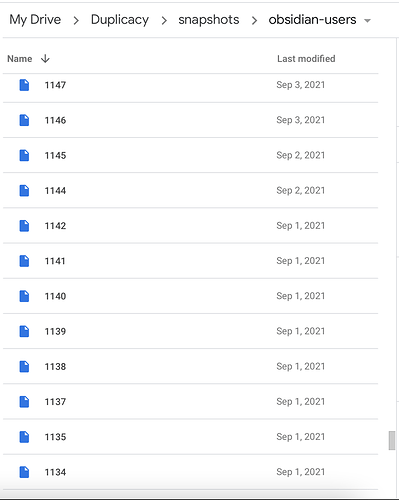

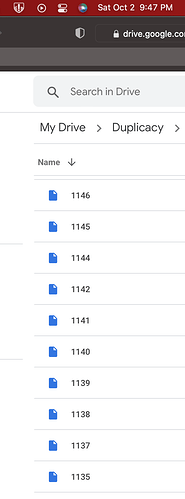

Google Drive view:

Google drive configured via gcd_start. It’s located in My Drive in Google Workspace account.

Earlier deleting snapshots from shared google drive would fail, but now it seems it fails even when directly connected

Impact: after prune check will fail because the ghost snapshot file is left in the datastore.