Hey Sapsus,

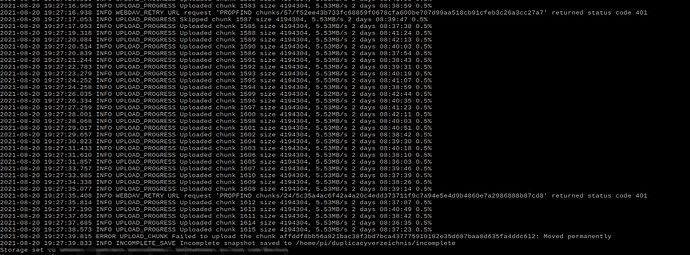

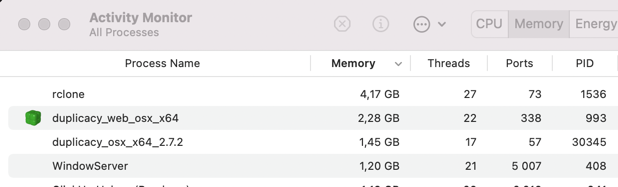

i’ve got following issue: Rclone is serving with sftp. but as the upload is asynchroneous , it seems to take the chunkgs in cache and after filling up the cache at a level i currently dont understand, it stops talking to duplicacy (or to a manual sftp connection). As i’m running rclone verbose, i see, that its uploading the chunks to pcloud, but still a connection is not possible. i think, it first wants to empty the cache at a certain level before taking new file transfers…

do you have an idea, how i can handle this? can i extend the rclone cache or make this unlimited?

thanks a lot again