Thank you @Droolio!

Combining -exclusive and -keep in the same command is NOT recommended my dude. See.

I’m sorry, i still don’t understand why after reading your link and the search you posted. To me it looks like they can be used together without any issue, as long as no other backups occur at the same time. (too much bold? maybe too much bold)

My statement is based on this:

-

keep <n:m> - keep 1 snapshot every n days for snapshots older than m days

- i use the same config in my day-to-day backup

-

exhaustive - remove all unreferenced chunks

- yes please, i want

fossils deleted

-

exclusive - assume exclusive access to the storage (disable two-step fossil collection)

- yes: i don’t want fossil collection, but i hope fossil deletion still happens, as that’s part of the process right? RIGHT?

- The last revision can only be deleted in

-exclusive mode

- that’s okay. i don’t care if i lose any revision at any ends of the time (later edit) in this instance, as what i care about now is to cleanup my

fossils

What backend are you using? Normal file storage?

Seems weird you have a fossils directory at all, because local file and ssh backends rename chunks to .fsl. Are they being accessed in some other way?

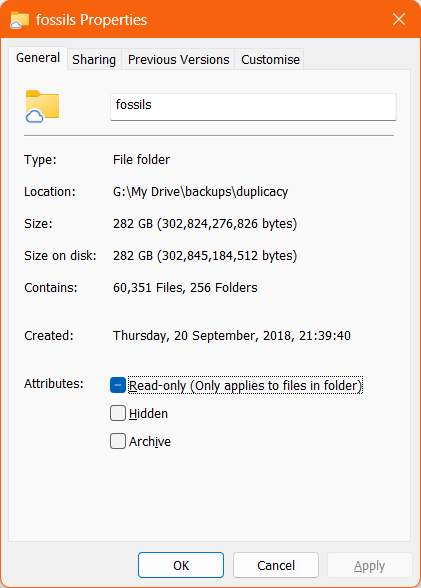

Using Google Drive, both via API and via Google Drive app on desktop. All my automated backups and prune do it only via the API, and i tried to run the prune both with the API and the desktop app. Neither seem to touch the fossils folder.

So the folder is probably created as normal backups are done via the API.

… permissions …

I don’t think this applies to me as uploading works and pruning works. Everything seemed to work except for cleanup of the fossils folder.

perhaps this was an old storage pulled

I don’t think so? I started using Duplicacy with Google Drive long long long ago, and all my backups from all my devices are stored in GD since the beginning.

Anyway, try -exhaustive -exclusive on its own and see what it does.

Trying….

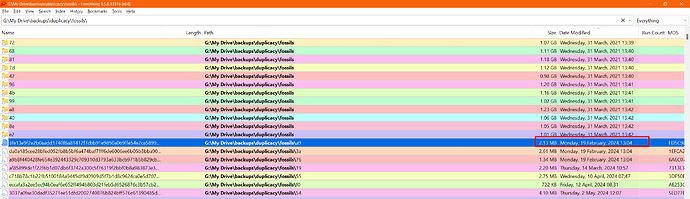

.\.duplicacy\z.exe -d -log prune -a -exhaustive -exclusive -threads 32

-

When using app, it doesn’t do anything.

When using app, it doesn’t do anything.

-

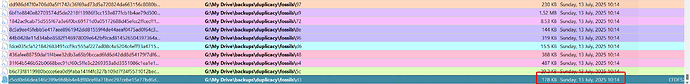

When using the API, it deleted all the fossils. So that’s nice.

When using the API, it deleted all the fossils. So that’s nice.

Thank you for the suggestion @Droolio

Now comes the next question: why does -keep interfere with -exhaustive (or with -exclusive?)? I run prune multiple times per month (automatically) (-all -exhaustive -keep 0:700 -keep 365:365 -keep 30:180 -keep 7:30 -keep 1:7, so the fossils entries from < 1 month ago should have been deleted, but they were not.

There is nothing in the description of the flags which suggests this to me.

@gchen do you have an answer?

When using app, it doesn’t do anything.

When using app, it doesn’t do anything.

When using the API, it deleted all the fossils. So that’s nice.

When using the API, it deleted all the fossils. So that’s nice.