After some more experimenting (see full logs here if interested https://1drv.ms/u/s!ArUmnWK8FkGnkvhVY_zPz802KgFqOg?e=wV6tMw) it looks like it was failing to SNAPSHOT_DELETE.

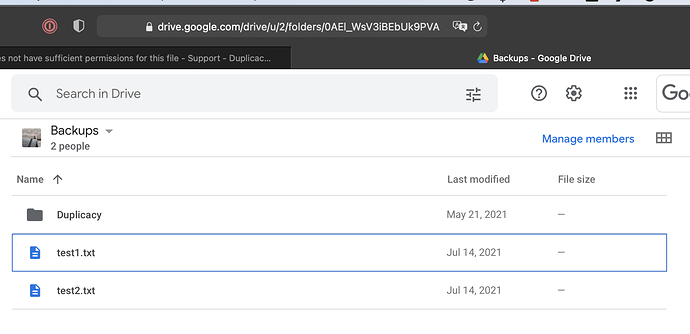

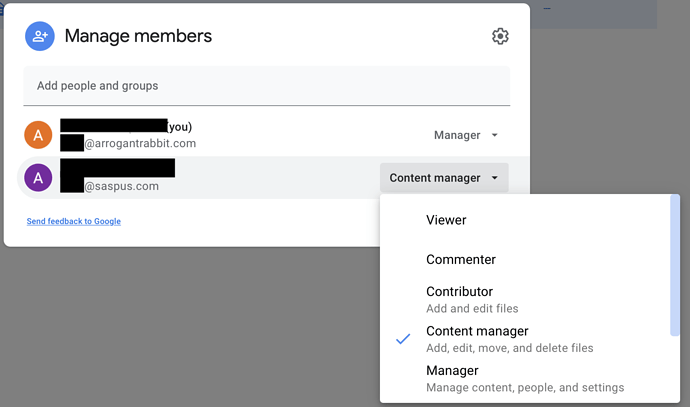

I created another storage which points directly to the google account that created the shared folder, as opposed to the account that shared folder was shared with, and the prune completed successfully.

2021-07-09 19:04:58.585 WARN CHUNK_FOSSILIZE Chunk 6ca17f466d2fa26e4e3b53c938adac0a38a6b60fe981833fa673b2170e8c21dc is already a fossil

2021-07-09 19:04:59.273 WARN CHUNK_FOSSILIZE Chunk 7844ba28a9aa46a1642c030b95c58259c37c82641aa6bdd6565083d226107d7c is already a fossil

2021-07-09 19:04:59.944 WARN CHUNK_FOSSILIZE Chunk e23dae6f5358746cabf3fc93aa8e9245b0f0f060b1affe1c8c912c2dd0aff2db is already a fossil

2021-07-09 19:05:00.617 WARN CHUNK_FOSSILIZE Chunk 273efbfe18c7b1eee64735417405be70646ad96adea7413f3b8222546b6c559d is already a fossil

2021-07-09 19:05:00.734 INFO FOSSIL_COLLECT Fossil collection 1 saved

2021-07-09 19:05:01.934 INFO SNAPSHOT_DELETE The snapshot obsidian-users at revision 4 has been removed

2021-07-09 19:05:03.067 INFO SNAPSHOT_DELETE The snapshot obsidian-users at revision 5 has been removed

2021-07-09 19:05:04.323 INFO SNAPSHOT_DELETE The snapshot obsidian-users at revision 6 has been removed

2021-07-09 19:05:05.617 INFO SNAPSHOT_DELETE The snapshot obsidian-users at revision 7 has been removed

2021-07-09 19:05:06.869 INFO SNAPSHOT_DELETE The snapshot obsidian-users at revision 8 has been removed

2021-07-09 19:05:08.077 INFO SNAPSHOT_DELETE The snapshot obsidian-users at revision 9 has been removed

...

Any idea why that could be the case? I confirmed that the snapshot files can be deleted by CyberDuck using the other google account: maybe there is some issue with duplicacy doing things differently deleting the stuff via shared account?

For now as a workaround I can just use the connection to main account to do prune, but it would be great to get to the bottom of this.