No, that’s the entire tail of the log. There is no mention of which file did it encounter a failure with.

Attempt one: prune-20210704-160929.log

2021-07-04 16:29:29.398 WARN CHUNK_FOSSILIZE Chunk be5d223b4a0266f4b218edecf345657a1401e649c464522c2f94899b6e22841c is already a fossil

2021-07-04 16:29:29.970 WARN CHUNK_FOSSILIZE Chunk 6576d4f49125159558d4b691cd4b7682056d7a99f8634b83745dbef70bc6da63 is already a fossil

2021-07-04 16:29:30.540 WARN CHUNK_FOSSILIZE Chunk f16ab189465900475364a9c3f74ee5c432195aa974117bd63088a8cfd71c670c is already a fossil

2021-07-04 16:29:30.640 INFO FOSSIL_COLLECT Fossil collection 1 saved

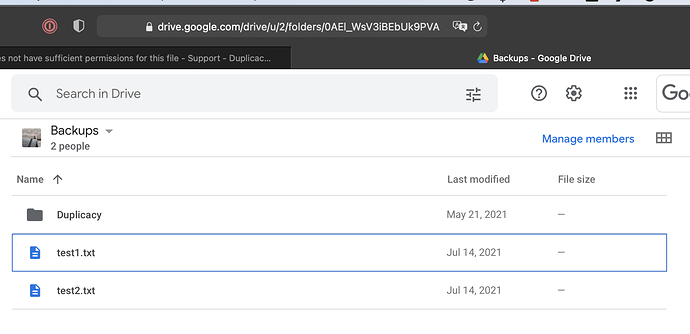

2021-07-04 16:29:31.400 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 0.32 seconds (backoff: 2, attempts: 1)

2021-07-04 16:29:32.190 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 7.64 seconds (backoff: 4, attempts: 2)

2021-07-04 16:29:40.290 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 13.07 seconds (backoff: 8, attempts: 3)

2021-07-04 16:29:53.861 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 23.69 seconds (backoff: 16, attempts: 4)

2021-07-04 16:30:18.054 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 54.28 seconds (backoff: 32, attempts: 5)

2021-07-04 16:31:12.793 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 110.84 seconds (backoff: 64, attempts: 6)

2021-07-04 16:33:04.483 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 122.48 seconds (backoff: 64, attempts: 7)

Attempt two: prune-20210704-163533.log

2021-07-04 16:49:24.660 WARN CHUNK_FOSSILIZE Chunk 420c301e7ec96021b8ab8f802664fabbdda943aace9875b296ece08d28d5b3d6 is already a fossil

2021-07-04 16:49:25.167 WARN CHUNK_FOSSILIZE Chunk 5c362b02f36e4d7d14ea26c0e0c2165a7f304eaf7ca4706cc921ade92fa6583c is already a fossil

2021-07-04 16:49:25.706 WARN CHUNK_FOSSILIZE Chunk fa9a5d7cb3fca8ef77a2612fb5a96e95652b2c3ce0d9ec84baf01386147f4886 is already a fossil

2021-07-04 16:49:25.978 INFO FOSSIL_COLLECT Fossil collection 2 saved

2021-07-04 16:49:26.766 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 1.37 seconds (backoff: 2, attempts: 1)

2021-07-04 16:49:28.565 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 4.72 seconds (backoff: 4, attempts: 2)

2021-07-04 16:49:33.848 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 0.26 seconds (backoff: 8, attempts: 3)

2021-07-04 16:49:34.688 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 16.79 seconds (backoff: 16, attempts: 4)

2021-07-04 16:49:51.915 DEBUG GCD_RETRY [0] The user does not have sufficient permissions for this file.; retrying after 48.22 seconds (backoff: 32, attempts: 5)

I’ve logged in to drive.google.com with the user duplicacy is using and created and deleted a new folder there successfully, so google permissions seem OK.

How can I figure out which file does it complain about? Is it the last “already a fossil” chunk logged? First?

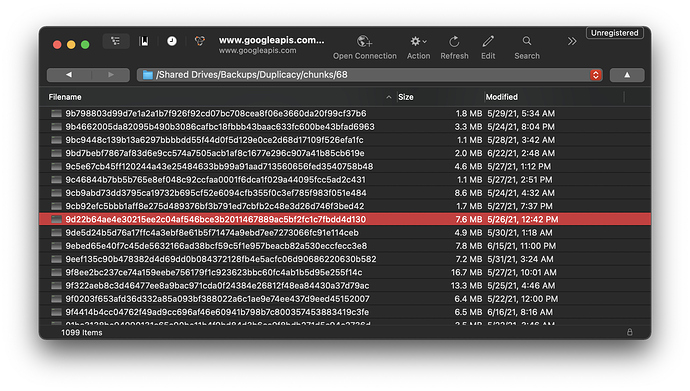

Not sure if relevant – in the beginning the message

2021-07-04 16:09:32.689 DEBUG STORAGE_NESTING Chunk read levels: [1], write level: 1

while the directory structure is chunks/XX/XXXXX..XX – i.e. it is not single level.

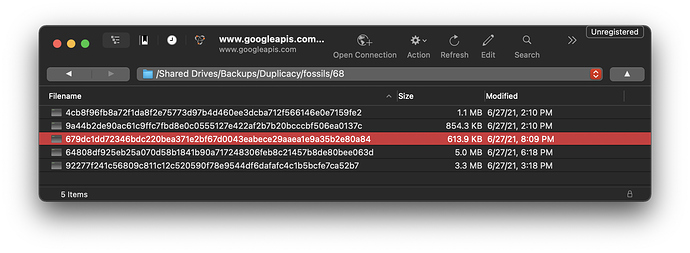

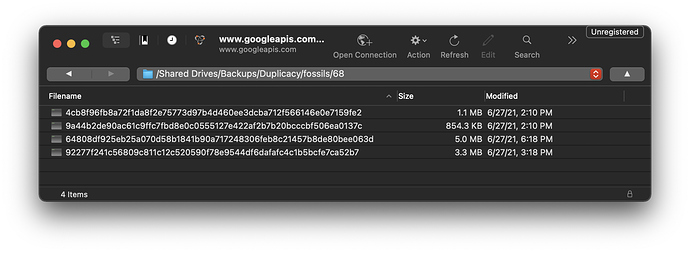

Edit.

Prune logs under /Library/Caches/Duplicacy/localhost/all/.duplicacy/logs/ contain only

Marked fossil 0ec236fb81269dadefc02144cf6f54326ed87ace42d7542762608a7ad06a5dce

Marked fossil d34577c35e5abdf31d8350b816cd9e05e527fa68f9e261b3e2f18e77ef1f1eef

Marked fossil 1880fb12e95efb3e63ecb8e22f1691a2412b8eba9ad5eb48c7c97b4d3a2f5829

Marked fossil be5d223b4a0266f4b218edecf345657a1401e649c464522c2f94899b6e22841c

Marked fossil 6576d4f49125159558d4b691cd4b7682056d7a99f8634b83745dbef70bc6da63

Marked fossil f16ab189465900475364a9c3f74ee5c432195aa974117bd63088a8cfd71c670c

Fossil collection 1 saved

and

Marked fossil 5b1be728795a1d1683e1f708bec73ceafe8d0dfec4d21d025260d35ef27fd298

Marked fossil 15476679e68311bf78ca5eea4ede94e0102ef3b1e54847347e743dc4064e325e

Marked fossil 420c301e7ec96021b8ab8f802664fabbdda943aace9875b296ece08d28d5b3d6

Marked fossil 5c362b02f36e4d7d14ea26c0e0c2165a7f304eaf7ca4706cc921ade92fa6583c

Marked fossil fa9a5d7cb3fca8ef77a2612fb5a96e95652b2c3ce0d9ec84baf01386147f4886

Fossil collection 2 saved

ending with Fossil Collection Saved respectively, but no other messages

Maybe it fails at something that is trying to do after dealing with fossils?