Hello all, so I’m testing out Duplicacy as an alternative to ARQ backup for a couple reasons, mainly that ARQ backup is unable to actually use the budget setting or enforce it, resulting in me having to start my backup from scratch every few months, which is annoying. I don’t see such an option at a first glance but perhaps I’m missing it.

The other reason is that ARQ is extremely slow to scan a drive while backing up, meaning I can only effectively run one backup per day.

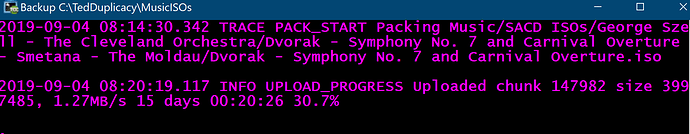

Anyhow, I’m starting my initial backup over the lan before I move my target machine back to a relative’s house, however the initial backup is going quite slowly. I initially saw 8 MB/s for a single drive over sftp, I changed to a network share and the speed decreased slightly.

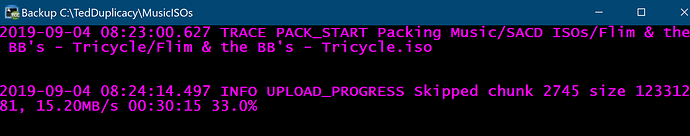

I changed the web ui ip address and I saw a significant increase in speed, this slowly ramped down but was actually at around ~16MB/s for each of my drives running at the same time for a while, but now it’s dropped down to about 3MB/s for each drive.

I’m not running into a cpu or ram bottleneck as far as I can tell, I have 24 threads at 2.93 ghz available and cpu usage is quite low, and I have about 30% of my 72gb of ram used, mostly by another application.

I’m wondering what else I could look at as a potential cause of this slowdown, or if this is pretty much expected and has to do with the de-duplication or encryption?

My other note is that the web ui seems oversimplified, I feel like I should be able to access a lot more, in my searching I see references to settings regarding number of threads used, and tweaks people make to chunk sizes, but this all appears to be only in the command line version? I might be missing something but it definitely feels like there should be a more advanced mode toggle, or something along those lines. Perhaps there is a way to access the command line but I can’t seem to find it.

I was also curious if there is going to be an ability to search for a specific file or folder name added in, as that’s one thing I really like about ARQ, even if it was dog slow to find it and load lists from the sftp server.