Thank you so much for confirming this all for me! I think I will go with the second option. Every now and then I have as much as 100 GB written in one day. Other times almost nothing at all. I can look at the statistics after my next remote backup and reseed creating r2 locally if I’m worried about the amount of data to be uploaded.

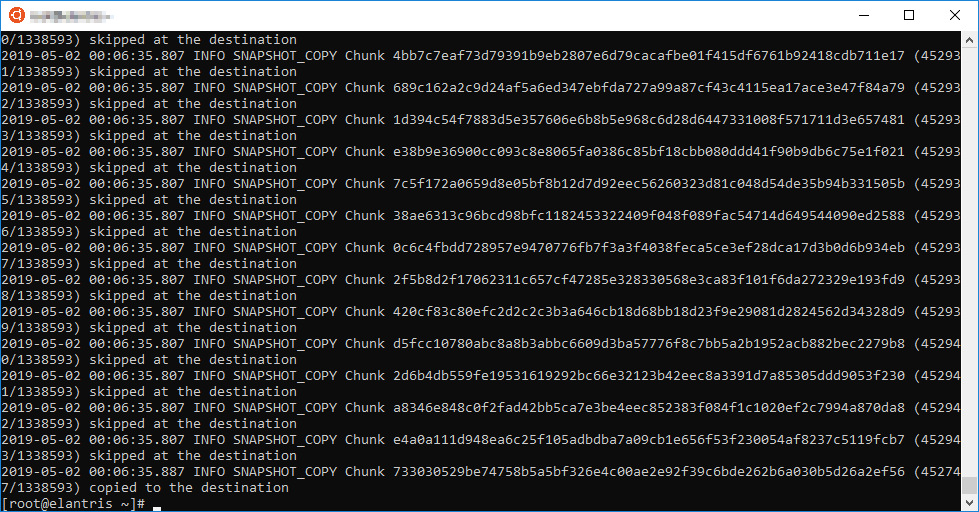

I was guessing that the software assumed all chunks existed in the latest previous rev due to how fast it runs after the initial run and most time isn’t spent uploading as much as chunking for me with my internet speeds.

This makes me question things like “how does it know what’s new/ changed from the previous rev?” “What are the chances it misses changes?” “How do file attributes affect the backup? If I do a setfacl to add a user to every file on my data drive, is it going to re-chunk the whole thing or will it even notice a difference?” “Did I know the answers to all of this years ago and have forgotten? (lol) If not how did I know question it?”

You’ve been a great help across all of my posts today. I will get out of your hair for now. This is like… my yearly check-in. lol

As a final check, I will run my check script on both storages when I’m done for good measure.

It would be really expensive if it downloads the content chunks, then just skips them if they already exist. I think it would really depend on how it determine what needs to be copied.

It would be really expensive if it downloads the content chunks, then just skips them if they already exist. I think it would really depend on how it determine what needs to be copied.

I’m not sure what to do

I’m not sure what to do

is all about deduplication ,all should be ok with how much new data is uploaded, right?

is all about deduplication ,all should be ok with how much new data is uploaded, right?