So I started with an offsite backup then later decided I wanted to add a local one. I did a copy so that all of the chunking, etc would be the same. Then, because I didn’t want to incur charges from my off-site provider initialized the local backup by preforming a regular backup command. This created a revision, 1.

From now on I want to copy from my local media to the offsite one (presumably saving some function calls to the offsite storage, saving cpu, and keeping them in sync), however the local revision is 1. The problem here is that I run into the possibility of running into a non-pruned, higher revision number on the offsite storage if I continue copying these (if the program will even let me copy a lower revision up to begin with).

Can I run the backup again and specify the revision number? Or perhaps spoof the “next” revision number somehow? Then I could prune the first revision. I considered the possibility of changing the current local version, but it’s encrypted, so whatever the solution, I feel like it has to somehow be done through the program.

RELATED POSTS:

(sort of not really this next one)

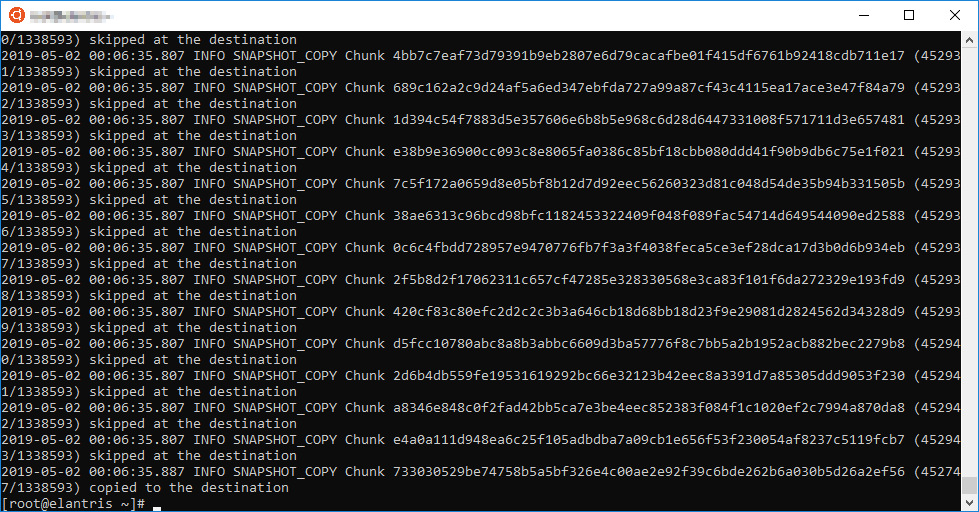

It would be really expensive if it downloads the content chunks, then just skips them if they already exist. I think it would really depend on how it determine what needs to be copied.

It would be really expensive if it downloads the content chunks, then just skips them if they already exist. I think it would really depend on how it determine what needs to be copied.

I’m not sure what to do

I’m not sure what to do

is all about deduplication ,all should be ok with how much new data is uploaded, right?

is all about deduplication ,all should be ok with how much new data is uploaded, right?