I think what you want to do is possible in the web gui. It’s still in beta as more and more things are added, but you may want to give that a try and see if it does the job.

Maybe I’m too new around here…where does one get the web GUI?

Silly me expecting to find in on the main Duplicacy webisite or download page…

I think I’ll wait until the 1.0 release. I came to duplicacy from duplicati to try and get away from all the perpetual beta bugs.

You can start using the command line duplicacy, and then perhaps realize that you don’t actually need UI in the first place. All you need is to configure a bunch of backups and then schedule periodic runs with the task Scheduler on your OS. There is literally nothing else to it.

Few years back I failed to find backup tool (to replace Crashplan) that does not fail at actually doing backups, so while GUI is nice to have it is not a priority, and there turned out to be the only backup tool that works in the first place. This one.

I can blackmouth every single backup tool in existence backed with specific horrific failure examples, including (and especially) duplicati you’ve mentioned, but I don’t think that would be an ethical thing to do on this specific forum.

That said Duplicacy CLI is straightforward and flexible and I personally don’t feel the need for GUI at all. Seriously, can’t think of any reason for it other to avoid spending 5 minutes reading manual about two commands you need to use once.

Note, the GUIs (both current basic one and beta web-GUI) execute command line version to actually perform backups. The command line version did not change, its stable and mature, so no reason to wait.

You should be able to then import configuration into the stable gui when it released but I’m sure you will be just fine with command line since by then it will all just work.

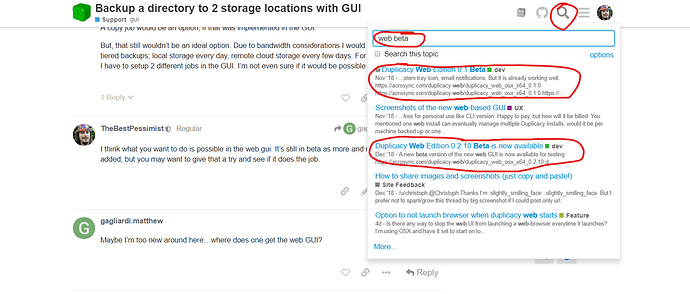

Backing up one directory to 2 storage locations isn’t supported by the old GUI, but is possible with the new web-based GUI available from Duplicacy Web Edition 0.2.10 Beta is now available

While I could have written more or less exactly the same post (though you seem to have tried even more backup tools than I), I don’t think the above sentence is fair, or even correct. It’s not a matter of five minutes. And not 50 either. Perhaps five hours, if you resist temptations of experimenting and trying to optimise things (or to understand how duplicacy works). And if you’re either good at scripting or happy with the basic features of duplicacy (e.g. no logs, except pruning logs). I’d still say those five hours are probably worth it, though.

Can you confirm this, @gchen? I was under a different impression but would welcome this, of course.

Thankyou, finally a useful answer.

Three questions:

- Is there an ETA on the release of the WebGUI?

- Will it support scheduling the local vs remote at different intervals?

- Right now I have two separate backup jobs working (1 local, 1 cloud) by using a proxy directory as noted above. Will the webGUI be able to adopt the cloud storage into a single backup job, or will I have to re-upload. It takes ~4 days for my initial backup to the cloud, so I don’t want to have to repeat that later.

The web-ui version is even more closely based on the CLI version than the GUI version was. Basically, the web-ui version is “only” a fancy front-end for telling the CLI version what to do. So, the simple answer is: whatever you can do with the CLI version, you can do with the web-ui version. - Except, of course, that you may have to resort to the command line if the web-ui doesn’t yet support a particular setup. I don’t know what will or will not be supported by the first web-ui release but you might also find some answers in previous discussions about #multi-storage setups and #web-ui.

@gchen, perhaps you could create a matrix with all CLI commands and options, indicating whether they will be available in the first release of the web-ui?

Yes, not only that, but if you set up duplicacy on a different computer, it will also be able to backup to the same storage. Or am I misunderstanding your question?

No, I don’t plan to implement this feature for the first release. Again, since this is of one-time use I would assign a low priority to it.

I think you are misunderstanding the question. Right now I have 2 backup jobs acting on the same source, one to local server one to cloud. To save the week of uploading time already invested, I wanted to know if the “copy” command (either in CLI or webGUI) would re-use all the chunks in the cloud to adopt without having to re-upload the entire job.

That’s exactly what copy does, so you’re safe here.

So if I have a full backup in the cloud, from a different job, copy will recognize that the files are the same and not re-upload everything from the local backup?

I think I’m going to have to test that out. I don’t understand the way chunks are broken up and created, but would two jobs actually create the exact same chunks?

Note the second of the two Duplicacy storages (local or cloud) would need to have been initialised with the add command to make them copy-compatible. If you haven’t done this, you’d need to reupload to (or download from) the cloud. If you did do this, copy recognises and avoids uploading existing chunks.

Overall, I’d say it’s better to go:

Repository > Local > Cloud

…than:

Repository > Local

Repository > Cloud

That’s what I was afraid of.

Maybe in the future I’ll spend the week re-uploading everything the “better” way, but for now I think I have to continue with two jobs even though it is less efficient.

I think this is an example of a very basic and essential advice that new users should find very easily without looking for it. Well, we already have it in the Duplicacy quick-start (CLI) but I don’t think it’s included in the GUI guide. I guess the web-ui will solve this as ot can (I suppose) easily alert users to these things.

Well, unless I misunderstand you again, you wont have to re-upload anything to the cloud but can instead download everything from the cloud if you create a new local storage using add and the copy everything from the cloud.

Once that is done, it’s probably better to switch things around as was mentioned earlier:

(where the first > is a backup and the second a copy job.)

Yes, the lack of documentation on the limitations and function of the GUI has definitely hindered me.

Can you re-initialize a storage location? If I download everything from the could backup to local (initialized with the add), how could I switch that around to make local primary and add the “add” to the cloud? When I tried to init something existing it exited because the .duplicacy folder and config file already existed.

Or, would I have to init a new repository/stoarge pair and then manually copy/move the chunks into those locations?

It depends what you mean by that. What I meant was that you delete your local storage (duplicacy storage, that is, of course) and initialize a new storage using the add command. You can csll that “re-initialize” if you wish.

I’m sorry, I can’t help you with the gui version as I have never used it.

Ignore the GUI, CLI is fine if the only way.

I’m trying to understand how the suggestion to copy from the cloud to the local backup gets me closer to

Repository > Local > Cloud

You imply that once the copy from Cloud>local is done that I can switch things around to go the other way, but wouldn’t that require the cloud storage to be init with the “add” flag, which it isn’t currently?

How would I switch it around as you suggest?

This is what I would do…

1) Create a new local repository using the add -copy command (from your cloud storage).

This effectively copies the storage parameters such as chunk size and encryption keys, making it copy-compatible. (You can use a different master password when using -encrypt if you want.)

2) Adjust your backup schedules to backup only to the local storage.

3) Run all your backup jobs to this new storage, using the same repository IDs.

This quickly populates the local storage with chunks that should be mostly identical to the ones in the cloud (minus historic revisions and some minor differences due to chunk boundaries while rehashing).

4) Copy (with -all) the cloud storage to the local storage.

This fills in the rest of the missing chunks from previous revisions, and brings all those old snapshots back to your local storage. This should also overwrite the snapshot revision #1 of all the backups made in step 2, with the original ones you did to the cloud time ago, unless these were pruned*.

5) Make a new job to copy -all from local storage to cloud storage.

Perhaps add this after the backup jobs, or you can do it once a day or whenever.

6) [Optional] Run a prune -exclusive -exhaustive on the local storage to tidy things up.

There’ll probably be unreferenced chunks from step 2.

Step 3 is basically a time saving procedure, and should hopefully save a lot of downloading.

You can do this with the web GUI but you’d need to use CLI for the add command.

*IF the revision 1s in the cloud were pruned, the local, temporary, ones you made in step 2 won’t be overwritten in step 4, so you might want to manually delete all the 1 files in the local storage under snapshots/ just to be consistent - I don’t know if there’ll be any adverse affects if you didn’t, though.