You can start using the command line duplicacy, and then perhaps realize that you don’t actually need UI in the first place. All you need is to configure a bunch of backups and then schedule periodic runs with the task Scheduler on your OS. There is literally nothing else to it.

Few years back I failed to find backup tool (to replace Crashplan) that does not fail at actually doing backups, so while GUI is nice to have it is not a priority, and there turned out to be the only backup tool that works in the first place. This one.

I can blackmouth every single backup tool in existence backed with specific horrific failure examples, including (and especially) duplicati you’ve mentioned, but I don’t think that would be an ethical thing to do on this specific forum.

That said Duplicacy CLI is straightforward and flexible and I personally don’t feel the need for GUI at all. Seriously, can’t think of any reason for it other to avoid spending 5 minutes reading manual about two commands you need to use once.

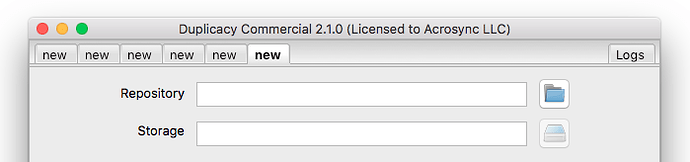

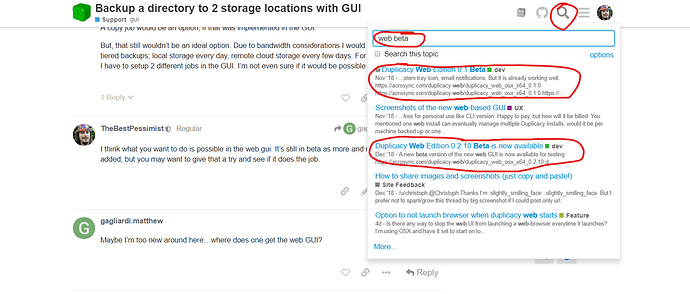

Note, the GUIs (both current basic one and beta web-GUI) execute command line version to actually perform backups. The command line version did not change, its stable and mature, so no reason to wait.

You should be able to then import configuration into the stable gui when it released but I’m sure you will be just fine with command line since by then it will all just work.